Paper:

Teleoperating Assistive Robots: A Novel User Interface Relying on Semi-Autonomy and 3D Environment Mapping

Zdeněk Materna*, Michal Španěl*, Marcus Mast**, Vítězslav Beran*, Florian Weisshardt***, Michael Burmester**, and Pavel Smrž*

*Centre of Excellence IT4Innovations, Faculty of Information Technology, Brno University of Technology

Bozetechova 1/2, 612 66 Brno, Czech Republic

**Information Experience and Design Research Group, Stuttgart Media University

Nobelstr. 10, 70569 Stuttgart, Germany

***Fraunhofer Institute for Manufacturing Engineering and Automation IPA

Postfach 800469, 70504 Stuttgart, Germany

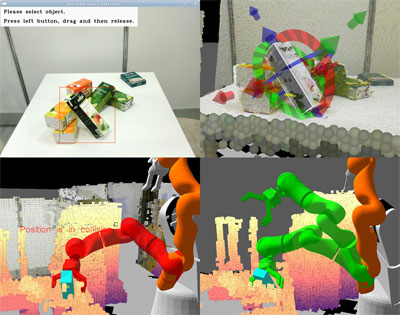

User-assisted pick and place task

- [1] L. Pigini, D. Facal, A. Garcia, M. Burmester, and R. Andrich, “The proof of concept of a shadow robotic system for independent living at home,” Computers Helping People with Special Needs, pp. 634-641, Springer, 2012.

- [2] R. Qiu, Z. Ji, A. Noyvirt, A. Soroka, R. Setchi, D. T. Pham, S. Xu, N. Shivarov, L. Pigini, G. Arbeiter et al., “Towards robust personal assistant robots: Experience gained in the srs project,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1651-1657, 2012.

- [3] M. Mast, M. Burmester, K. Krüger, S. Fatikow, G. Arbeiter, B. Graf, G. Kronreif, L. Pigini, D. Facal, and R. Qiu, “User-centered design of a dynamic-autonomy remote interaction concept for manipulation-capable robots to assist elderly people in the home,” J. of HRI, Vol.1, No.1, 2012.

- [4] U. Reiser, C. Pascal Connette, J. Fischer, J. Kubacki, A. Bubeck, F. Weisshardt, T. Jacobs, C. Parlitz, M. Hägele, and A. Verl, “Care-o-bot® 3-creating a product vision for service robot applications by integrating design and technology,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vol.9, pp. 1992-1998, 2009.

- [5] M. Mast, Z. Materna, M. Španěl, F. Weisshardt, G. Arbeiter, M. Burmester, P. Smrž, and B. Graf, “Semi-autonomous domestic service robots: Evaluation of a user interface for remote manipulation and navigation with focus on effects of stereoscopic display,” Int. J. of Social Robotics, Vol.7, No.2, pp. 183-202, 2015.

- [6] M. Mast, M. Španěl, G. Arbeiter, V. Štancl, Z. Materna, F. Weisshardt, M. Burmester, P. Smrž, and B. Graf, “Teleoperation of domestic service robots: Effects of global 3d environment maps in the user interface on operators’ cognitive and performance metrics,” Social Robotics, pp. 392-401, Springer, 2013.

- [7] M. Mast, M. Burmester, B. Graf, F. Weisshardt, G. Arbeiter, M. Španěl, Z. Materna, P. Smrž, and G. Kronreif, “Design of the human-robot interaction for a semi-autonomous service robot to assist elderly people,” Ambient Assisted Living, pp. 15-29, Springer, 2015.

- [8] F. Michaud, P. Boissy, D. Labonté, S. Briere, K. Perreault et al., “Exploratory design and evaluation of a homecare teleassistive mobile robotic system,” Mechatronics, Vol.20, No.7, pp. 751-766, 2010.

- [9] S. Muszynski, J. Stuckler, and S. Behnke, “Adjustable autonomy for mobile teleoperation of personal service robots,” 2012 IEEE RO-MAN, pp. 933-940, 2012.

- [10] D. J. Bruemmer, D. A. Few, R. L. Boring, J. L. Marble, M. C. Walton, and C. W. Nielsen, “Shared understanding for collaborative control,” IEEE Trans. on Systems, Man and Cybernetics, Part A: Systems and Humans, Vol.35, No.4, pp. 494-504, 2005.

- [11] T. L. Chen, M. Ciocarlie, S. Cousins, P. Grice, K. Hawkins, K. Hsiao, C. C. Kemp, C.-H. King, D. A. Lazewatsky, A. Leeper et al., “Robots for humanity: A case study in assistive mobile manipulation,” IEEE Robotics & Automation Magazine, 2013.

- [12] A. E. Leeper, K. Hsiao, M. Ciocarlie, L. Takayama, and D. Gossow, “Strategies for human-in-the-loop robotic grasping,” Proc. of the seventh annual ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 1-8, 2012.

- [13] T. Witzig, J. M. Zollner, D. Pangercic, S. Osentoski, R. Jakel, and R. Dillmann, “Context aware shared autonomy for robotic manipulation tasks,” 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 5686-5693, 2013.

- [14] J. D. Will, K. L. Moore, and I. K. Lynn, “Optimizing human-robot teleoperation interfaces for mobile manipulators,” Industrial Robot: An Int. J., Vol.40, No.2, pp. 173-184, 2013.

- [15] J. A. Atherton and M. A. Goodrich, “Supporting remote manipulation with an ecological augmented virtuality interface,” Proc. of the AISB Symposium on New Frontiers in Human-Robot Interaction, Edinburgh, Scotland, 2009.

- [16] J. L. Drury, J. Scholtz, and H. A. Yanco, “Awareness in human-robot interactions,” IEEE Int. Conf. on SMC 2003, Vol.1, pp. 912-918, 2003.

- [17] J. Zhu, X. Wang, and M. Rosenman, “Fusing multiple sensors information into mixed reality-based user interface for robot teleoperation,” IEEE Int. Conf. on Systems, Man, and Cybernetics (SMC) 2009, pp. 876-881, 2009.

- [18] C. W. Nielsen, M. A. Goodrich, and R. W. Ricks, “Ecological interfaces for improving mobile robot teleoperation,” IEEE Trans. on Robotics, Vol.23, No.5, pp 927-941, 2007.

- [19] D. Labonte, P. Boissy, and F. Michaud, “Comparative analysis of 3-d robot teleoperation interfaces with novice users,” IEEE Trans. on Systems, Man, and Cybernetics (SMC), Part B: Cybernetics, Vol.40, No.5, pp. 1331-1342, 2010.

- [20] J. Franz, A. Maciel, and L. Nedel, “Assessment of a user centered interface for teleoperation and 3d environments,” Proc. of the 28th Annual ACM Symposium on Applied Computing, pp. 953-960, 2013.

- [21] J. Kofman, X. Wu, T. J. Luu, and S. Verma, “Teleoperation of a robot manipulator using a vision-based human-robot interface,” IEEE Trans. on Industrial Electronics, Vol.52, No.5, pp. 1206-1219, 2005.

- [22] H. Hu, J. Li, Z. Xie, B. Wang, H. Liu, and G. Hirzinger, “A robot arm/hand teleoperation system with telepresence and shared control,” Proc., 2005 IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics (AIM), pp. 1312-1317, 2005.

- [23] C. J. Bell, P. Shenoy, R. Chalodhorn, and R. P. N. Rao, “Control of a humanoid robot by a noninvasive brain-computer interface in humans,” J. of neural eng., Vol.5, No.2, p. 214, 2008.

- [24] K. Chintamani, A. Cao, R. D. Ellis, and A. K. Pandya, “Improved telemanipulator navigation during display-control misalignments using augmented reality cues,” IEEE Trans. on SMC, Part A: Systems and Humans, Vol.40, No.1, pp. 29-39, 2010.

- [25] M. Ferre, R. Aracil, and M. Sanchez-Uran, “Stereoscopic human interfaces,” IEEE Robotics & Automation Magazine, Vol.15, No.4, pp. 50-57, 2008.

- [26] D. R. Scribner and J. W. Gombash, “The effect of stereoscopic and wide field of view conditions on teleoperator performance,” Technical report, DTIC Document, 1998.

- [27] W.-k. Fung, W.-t. Lo, Y.-H. Liu, and N. Xi, “A case study of 3d stereoscopic vs. 2d monoscopic tele-reality in real-time dexterous teleoperation,” 2005 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 181-186, 2005.

- [28] T. Fujiwara, T. Kamegawa, and A. Gofuku, “Stereoscopic presentation of 3d scan data obtained by mobile robot,” 2011 IEEE Int. Symposium on Safety, Security, and Rescue Robotics (SSRR), pp. 178-183, 2011.

- [29] S. Livatino and G. Muscato, “Robot 3d vision in teleoperation,” World Automation Congress (WAC) 2012, pp. 1-6, 2012.

- [30] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “OctoMap: An efficient probabilistic 3D mapping framework based on octrees,” Autonomous Robots, Vol.34, pp. 189-206, 2013. Software available at http://octomap.github.com [AccessedDecember 7, 2015].

- [31] M. Yguel and O. Aycard, “3d mapping of outdoor environment using clustering techniques,” 2011 23rd IEEE Int. Conf. on Tools with Artificial Intelligence (ICTAI), pp. 403-408, 2011.

- [32] International Organization for Standardization, “Ergonomics of Human-system Interaction: Part 210: Human-centred Design for Interactive Systems,” ISO, 2010.

- [33] M. Mast, M. Burmester, E. Berner, D. Facal, L. Pigini, and L. Blasi, “Semi-autonomous teleoperated learning in-home service robots for elderly care: A qualitative study on needs and perceptions of elderly people, family caregivers, and professional caregivers,” Proc. of the twentieth ICROM, pp. 06-09, 2010.

- [34] N. Koenig and A. Howard, “Design and use paradigms for gazebo, an open-source multi-robot simulator,” Proc., 2004 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vol.3, pp. 2149-2154, 2004.

- [35] M. Hassenzahl, M. Burmester, and F. Koller, “Attrakdiff: A questionnaire to measure perceived hedonic and pragmatic quality,” Mensch & Computer, pp. 187-196, 2003.

- [36] K. Khoshelham and S. O. Elberink, “Accuracy and resolution of kinect depth data for indoor mapping applications,” Sensors, Vol.12, No.2, pp. 1437-1454, 2012.

- [37] G. Arbeiter, R. Bormann, J. Fischer, M. Hägele, and A. Verl, “Towards geometric mapping for semi-autonomous mobile robots,” Spatial Cognition VIII, pp. 114-127, Springer, 2012.

- [38] R. Edmondson, K. Light, A. Bodenhamer, P. Bosscher, and L. Wilkinson, “Enhanced operator perception through 3d vision and haptic feedback,” SPIE Defense, Security, and Sensing, pp. 838711-838711, 2012.

- [39] N. Broy, E. André, and A. Schmidt, “Is stereoscopic 3d a better choice for information representation in the car?,” Proc. of the 4th AutomotiveUI, pp. 93-100, ACM, 2012.

- [40] B. Pitzer, M. Styer, C. Bersch, C. DuHadway, and J. Becker, “Towards perceptual shared autonomy for robotic mobile manipulation,” 2011 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 6245-6251, 2011.

- [41] N. Chen, C.-M. Chew, K. P. Tee, and B. S. Han, “Human-aided robotic grasping,” 2012 IEEE RO-MAN, pp. 75-80, 2012.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.