| PMC full text: | Published online 2020 May 27. doi: 10.1148/ryai.2020190043

|

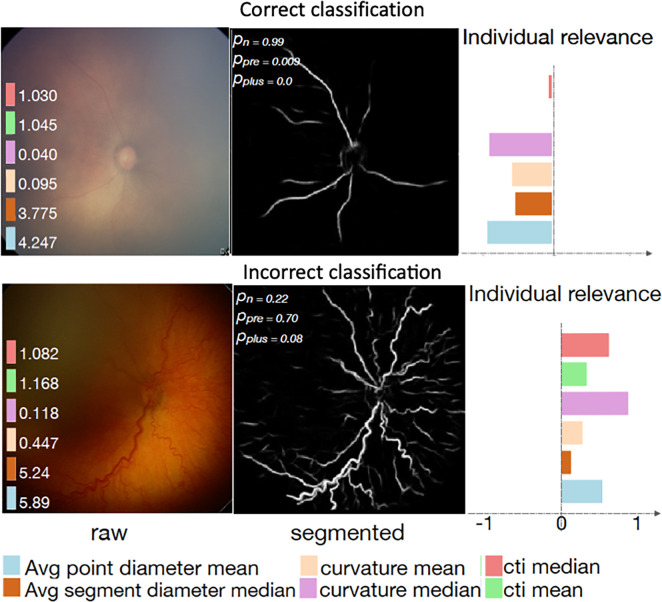

Figure 1b:

Examples of interpretability methods used on medical images. (a) Guided backpropagation and gradient-weighted class activation mapping (Grad-CAM) used on MRI to interpret areas of a brain image used by a deep learning model classifying the input image as a high-grade glioma. (Adapted and reprinted, with permission, from reference 7). Importance of pixels are color-coded as red = high importance, blue = low importance. (b) Regression concept vectors used to assess relevance of selected features describing curvature, tortuosity, and dilatation of retinal arteries and veins from retinal images, analyzed by a deep convolutional neural network. In b, examples of a correctly and wrongly classified image are shown, allowing the interpretation that the network is more sensitive to curvature and dilatation concepts for the classification of normal images, while being more sensitive to tortuosity for disease images. (Adapted and reprinted, with permission, from reference 6). Avg = average, cti = cumulative tortuosity index, Pn, Ppre, Pplus = network probabilities for normal, pre, and pre-plus classes.