Abstract

Background

As many countries seek to slow the spread of COVID-19 without reimposing national restrictions, it has become important to track the disease at a local level to identify areas in need of targeted intervention.Methods

In this prospective, observational study, we did modelling using longitudinal, self-reported data from users of the COVID Symptom Study app in England between March 24, and Sept 29, 2020. Beginning on April 28, in England, the Department of Health and Social Care allocated RT-PCR tests for COVID-19 to app users who logged themselves as healthy at least once in 9 days and then reported any symptom. We calculated incidence of COVID-19 using the invited swab (RT-PCR) tests reported in the app, and we estimated prevalence using a symptom-based method (using logistic regression) and a method based on both symptoms and swab test results. We used incidence rates to estimate the effective reproduction number, R(t), modelling the system as a Poisson process and using Markov Chain Monte-Carlo. We used three datasets to validate our models: the Office for National Statistics (ONS) Community Infection Survey, the Real-time Assessment of Community Transmission (REACT-1) study, and UK Government testing data. We used geographically granular estimates to highlight regions with rapidly increasing case numbers, or hotspots.Findings

From March 24 to Sept 29, 2020, a total of 2 873 726 users living in England signed up to use the app, of whom 2 842 732 (98·9%) provided valid age information and daily assessments. These users provided a total of 120 192 306 daily reports of their symptoms, and recorded the results of 169 682 invited swab tests. On a national level, our estimates of incidence and prevalence showed a similar sensitivity to changes to those reported in the ONS and REACT-1 studies. On Sept 28, 2020, we estimated an incidence of 15 841 (95% CI 14 023-17 885) daily cases, a prevalence of 0·53% (0·45-0·60), and R(t) of 1·17 (1·15-1·19) in England. On a geographically granular level, on Sept 28, 2020, we detected 15 (75%) of the 20 regions with highest incidence according to government test data.Interpretation

Our method could help to detect rapid case increases in regions where government testing provision is lower. Self-reported data from mobile applications can provide an agile resource to inform policy makers during a quickly moving pandemic, serving as a complementary resource to more traditional instruments for disease surveillance.Funding

Zoe Global, UK Government Department of Health and Social Care, Wellcome Trust, UK Engineering and Physical Sciences Research Council, UK National Institute for Health Research, UK Medical Research Council and British Heart Foundation, Alzheimer's Society, Chronic Disease Research Foundation.Free full text

Detecting COVID-19 infection hotspots in England using large-scale self-reported data from a mobile application: a prospective, observational study

Summary

Background

As many countries seek to slow the spread of COVID-19 without reimposing national restrictions, it has become important to track the disease at a local level to identify areas in need of targeted intervention.

Methods

In this prospective, observational study, we did modelling using longitudinal, self-reported data from users of the COVID Symptom Study app in England between March 24, and Sept 29, 2020. Beginning on April 28, in England, the Department of Health and Social Care allocated RT-PCR tests for COVID-19 to app users who logged themselves as healthy at least once in 9 days and then reported any symptom. We calculated incidence of COVID-19 using the invited swab (RT-PCR) tests reported in the app, and we estimated prevalence using a symptom-based method (using logistic regression) and a method based on both symptoms and swab test results. We used incidence rates to estimate the effective reproduction number, R(t), modelling the system as a Poisson process and using Markov Chain Monte-Carlo. We used three datasets to validate our models: the Office for National Statistics (ONS) Community Infection Survey, the Real-time Assessment of Community Transmission (REACT-1) study, and UK Government testing data. We used geographically granular estimates to highlight regions with rapidly increasing case numbers, or hotspots.

Findings

From March 24 to Sept 29, 2020, a total of 2 873 726 users living in England signed up to use the app, of whom 2 842 732 (98·9%) provided valid age information and daily assessments. These users provided a total of 120 192 306 daily reports of their symptoms, and recorded the results of 169 682 invited swab tests. On a national level, our estimates of incidence and prevalence showed a similar sensitivity to changes to those reported in the ONS and REACT-1 studies. On Sept 28, 2020, we estimated an incidence of 15 841 (95% CI 14 023–17 885) daily cases, a prevalence of 0·53% (0·45–0·60), and R(t) of 1·17 (1·15–1·19) in England. On a geographically granular level, on Sept 28, 2020, we detected 15 (75%) of the 20 regions with highest incidence according to government test data.

Interpretation

Our method could help to detect rapid case increases in regions where government testing provision is lower. Self-reported data from mobile applications can provide an agile resource to inform policy makers during a quickly moving pandemic, serving as a complementary resource to more traditional instruments for disease surveillance.

Funding

Zoe Global, UK Government Department of Health and Social Care, Wellcome Trust, UK Engineering and Physical Sciences Research Council, UK National Institute for Health Research, UK Medical Research Council and British Heart Foundation, Alzheimer's Society, Chronic Disease Research Foundation.

Introduction

The COVID-19 pandemic caused many countries to impose strict restrictions on their citizens' mobility and behaviour to curb the rapid spread of disease, often termed lockdowns. Since relaxing these restrictions, many countries sought to avoid their re-imposition through combinations of non-pharmaceutical interventions1 and test-and-trace systems. Despite these efforts, many countries have had increases in infection since re-opening and have often re-imposed either regional2 or national lockdowns. Regional lockdowns aim to contain the disease while minimising the severe economic effect of national lockdowns.

The effectiveness of regional interventions depends on the early detection of so-called infection hotspots.3 Large-scale, population-based testing can indicate regional hotspots, but at the cost of a delay between testing and actionable results. Moreover, accurately identifying changes in the infection rate requires sufficient testing coverage of a given population,4 which can be costly and requires substantial testing capacity. Regional variation in testing access can hamper the ability of public health organisations to detect rapid changes in infection rate. There is a high unmet need for tools and methods that can facilitate the timely and cost-effective identification of infection hotspots to enable policy makers to act with minimal delay.5

In this study, we used self-reported, population-wide data, obtained from a mobile application (the COVID Symptom Study app), combined with targeted PCR testing to provide geographical estimates of disease prevalence and incidence. We further show how these estimates can be used to provide timely identification of infection hotspots.

Methods

Study design and participants

For this prospective observational study, data were collected using the COVID Symptom Study app, developed by Zoe Global with input from King's College London (London, UK), the Massachusetts General Hospital (Boston, MA, USA), and Lund and Uppsala Universities (Sweden). The app guides users through a set of enrolment questions, establishing baseline demographic information. Users are asked to record each day whether they feel physically normal, and if not, to log any symptoms and keep a record of any COVID-19 tests and their results (appendix pp 3–7). More details about the app can be found in a study by Drew and colleagues,6 which also contains a preliminary demonstration of how symptom data can be used to estimate prevalence.

For the current study, eligible participants were required to have valid age information and to have logged at least one daily assessment. This study included only app users living in England, because some of the methods make use of England-specific testing capacity. In England, the Department of Health and Social Care (DHSC) allocated RT-PCR (swab) tests for severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) to users of the COVID Symptom Study app, beginning on April 28, 2020. Users who logged themselves as healthy at least once in a 9-day period and then reported any symptom (which we term newly sick) were sent invitations to book a test through the DHSC national testing programme, and they were then asked to record the result of the test in the app (note that individuals could be invited multiple times; appendix p 11). We included responses from all users in England (whether invited for a test or not) logged between March 24 and Sept 29, 2020. Our study was approved by King's College London ethics committee (REMAS ID 18210; LRS-19/20-18210), and all participants provided consent through the app.

Procedures

We used three datasets to validate our models: the Office for National Statistics (ONS) Community Infection Survey, the Real-time Assessment of Community Transmission (REACT-1) study, and UK Government testing data. The ONS survey7 is a longitudinal survey of individuals selected to be a representative sample of private households (excluding eg, care homes and student accommodation), which began on April 26, 2020. Individuals in the ONS survey are supervised while they self-administer nose and throat swabs. The results give estimates of prevalence and incidence over time. Data are released weekly, with each release covering 7–14 days before the release date, with the first release on May 10, 2020. The ONS survey swab 150 000 participants per fortnight. The REACT-1 study began on May 1, 2020, and is a cross-sectional community survey, relying on self-administered swab tests from a sample of the population in England.8, 9 The sample is randomly selected in each round of data collection. Data releases are intermittent and cover periods of several weeks. The UK Government swab test data are made up of two so-called pillars of testing: pillar 1 covers those with clinical need and health-care workers, and pillar 2 testing covers the wider population who meet government guidelines for testing.10 We used the ONS and REACT-1 surveys to compare our national estimates of incidence and prevalence, and we used the UK Government testing data to validate our geographically granular list of hotspots.

We calculated incidence using the invited swab tests reported in the app. We took 14-day averages, starting on May 12, 2020, to calculate the percentage of positive tests among newly sick users according to National Health Service (NHS) regions in England. We then combined this percentage with the proportion of users who report as newly sick in that region to produce the probability that a randomly selected person in that region is infected with COVID-19 on a given day (appendix p 2). We multiplied this probability by the population of each region to produce our swab-based incidence estimates, which we term IS. Incidence values are released daily, with a 4-day reporting lag.

We describe two methods for estimating prevalence. The first is symptom-based, primarily making use of self-reported symptoms and a predictive, symptom-based model for COVID-19. The second is both symptom-based and swab-based, and seeks to further integrate the information from swab test results collected in the app.

The symptom-based approach uses a previously validated logistic regression model11 to predict whether a user is SARS-CoV-2 positive on the basis of their reported symptoms (appendix p 2). For a given day, each user's most recent symptom report from the previous 7 days is used for prediction. If a user reports a positive COVID-19 test in that 7-day period, the test result is used to override the user's symptom-based estimate. The proportion of positive users is used to estimate prevalence. A user who is predicted to be COVID-19-positive for more than 30 days is considered long-term sick and no longer infectious, and they are then removed from the calculation. We sought to extrapolate these prevalence estimates to the general population. As noted in a previous study,12 there is a disparity in COVID-19 prevalence between regions of higher index of multiple deprivation (IMD), a measure of the relative deprivation of geographical regions,13 and those of lower IMD. We stratified users by Upper Tier Local Authority (UTLA), IMD tertile, and age bands (in decades), and we predicted the percentage prevalence per stratum. We then multiplied our predicted percentage of positive cases per stratum with that stratum's population size, according to census data, to estimate cases per stratum. These estimates were then summed to produce our population prevalence estimate, which we term PA. We examined the sensitivity of PA to health-seeking bias by removing all users reporting sick at sign-up from the analysis.

To estimate prevalence using the swab test data, we used our incidence estimates and the relationship Pt + 1=Pt + It – Mt where P represents prevalence, Mt represents the number of patients that recover at time t, and It is the number of new COVID-19 cases at time t. We estimated Mt from our data (appendix p 3).

These prevalence estimates make use of the swab test results but lack geographical granularity, being per NHS region. We can increase the granularity by taking the symptom-based estimates, which are calculated per UTLA, and rescaling all the estimates that make up an NHS region such that the total prevalence across those UTLAs matches the estimated per-NHS region prevalence. We term this hybrid method, which uses both symptom reports and swab tests, as PH. It is possible to produce granular incidence estimates by applying the model of recovery to these granular prevalence estimates; we term these estimates IH.

It is possible to retrieve the effective reproduction number, known as R(t), from incidence rates by combining them with known values of the serial interval.14 Briefly, we used the relationship It + 1=It exp(μ (R(t) – 1), where 1/μ is the serial interval. We modelled the system as a Poisson process and used Markov Chain Monte-Carlo to estimate R(t). In our probabilistic modelling, we assumed that the serial interval was drawn from a gamma distribution with α=6·0 and β=1·5 as in the study by Nishiura and colleagues.15 By sampling successive chains from the system, we obtained a distribution over R(t), which allowed us to report a median and 95% credible intervals. These estimates of uncertainty do not account for the uncertainty in the estimate of incidence, which we found to be mostly systematic and smaller than the other forms of uncertainty modelled.

A hotspot is defined as a sudden increase in the number of cases in a specific geographical region. We produced two rankings of UTLAs in England. The first ranks each by their estimated prevalence, PH. This ranking has the advantage of being preregistered; a list of the top ten UTLAs according to PH has been published online since July 23, 2020. However, it does not allow the direct identification of areas of concern; ie, areas with a large number of new cases, and we therefore also report a second ranking using IH.

We compared our rankings to those obtained by ranking according to government testing data. England contains 149 UTLAs, each containing a mean of 370 000 people. We used the government data to produce daily reference rankings of each UTLA, based on 7-day moving averages of daily cases per UTLA. We included all tests done on a given day to produce the ranking for that day, even if that test took several days to have its result returned, to produce the most accurate gold standard ranking that we could. We used 7-day moving averages of PH and IH to produce our predicted rankings of each UTLA. We then evaluated these predictions against the historical reference using two metrics. The first, recall at 20, is the number of UTLAs in our top 20 that appear in the reference top 20. The second, the normalised mean reciprocal rank at 20, measures the agreement between ranks of our top 20 list. We estimated uncertainty by drawing 100 samples of PH, IH, and the government testing data for each UTLA and day, making use of errors calculated using the Wilson interval approximation for the binomial distribution. These samples were ranked and metrics re-computed to produce 95% CIs for each metric.

Statistical analysis

Power analysis found that 320 000 weekly active users are needed to detect an increase from 5000 to 7500 daily cases with a significance of 0·05. All analysis was done with Python 3.7. We used ExeTera 0.2.7 for dataset processing and analysis.16 The COVID Symptom Study app is registered with ClinicalTrials.gov, NCT04331509.

Role of the funding source

Zoe Global developed the app for data collection. The funders had no role in the study design, data collection, data analysis, data interpretation, or writing of the report. All authors had full access to all the data and the corresponding author had final responsibility for the decision to submit for publication.

Results

From March 24 to Sept 29, 2020, a total of 4 644 227 participants signed up to use the app, of which 2 873 726 (61·9%) reported living in England. After excluding a further 4508 (0·1%) participants with invalid age information and 26 486 (0·6%) without any daily assessments logged, we report 2 842 732 users that participated in this study (table). In total, these participants completed 120 192 306 daily assessments, with a median of 16 logs (IQR 3–68) per user, corresponding to logged data for 22·4% of the total possible person-logs across the study period. Compared with the population of England, we had fewer participants aged 18 or younger (196 143 [6·9%] vs 22·5%) and fewer aged 65 or older (349 975 [12·3%] vs 18·4%), more female than male participants (1 739 929 [61·2%] vs 50·6%), and fewer participants living in deprived regions (1 322 394 [46·5%] in the least deprived tercile vs 33·3%; table). Between April 28 and Sept 29, a total of 851 250 invitations for swab tests were sent out. 169 682 (19·9%) of these invitations were responded to by a person reporting a swab test on the app, of which 1912 (1·1%) tested positive. In addition, users reported the results of 689 426 non-invited tests in the app, of which 25 663 (3·7%) were positive. 621 031 (0·5%) of 120 192 306 symptom reports were classified as COVID-19 positive by the symptom model.

Table

Characteristics of all app users in England who signed up between March 24, and Sept 29, 2020

| Participants (n=2 842 732) | ||

|---|---|---|

| Age, years | 43·3 (17·3) | |

| ≤18 | 196 143 (6·9%) | |

| 19–64 | 2 296 614 (80·8%) | |

| ≥65 | 349 975 (12·3%) | |

| Sex | .. | |

| Female | 1 739 929 (61·2%) | |

| Male | 1 101 145 (38·7%) | |

| Intersex | 150 (<0·1%) | |

| Prefer not to say | 1508 (0·1%) | |

| Preconditions* | .. | |

| Kidney disease | 18 674 (0·7%); n=2 842 028 | |

| Lung disease | 302 657 (12·1%); n=2 506 549 | |

| Heart disease | 66 445 (2·3%); n=2 842 028 | |

| Diabetes (type 1 and type 2) | 79 143 (2·8); n=2 842 028 | |

| Cancer† | 19 543 (1·3%); n=1 508 505 | |

| Index of multiple deprivation | .. | |

| Bottom tercile (most deprived) | 530 216 (18·7%) | |

| Middle tercile | 990 122 (34·8%) | |

| Upper tercile (least deprived) | 1 322 394 (46·5%) | |

| Smokers | 276 044 (9·7%) | |

| Race or ethnicity‡ | ||

| Asian or Asian British (Indian, Pakistani, Bangladeshi, or other) | 49 237 (0·6%); n=2 144 799 | |

| Black or Black British (Caribbean, African, or other) | 13 556 (0·6%); n=2 144 799 | |

| Mixed race (White and Black or Black British) | 13 556 (0·6%); n=2 144 799 | |

| Mixed race (other) | 31 627 (1·5%); n=2 144 799 | |

| White (British, Irish, or other) | 1 001 553 (93·3%); n=2 144 799 | |

| Chinese or Chinese British | 7823 (0·4%); n=2 144 799 | |

| Middle Eastern or Middle Eastern British (Arab, Turkish, or other) | 8880 (0·4%); n=2 144 799 | |

| Other ethnic group | 10 464 (0·5%); n=2 144 799 | |

| Prefer not to say | 8103 (0·4%); n=2 144 799 | |

Data are mean (SD) or n (%).

In our comparison of England-wide incidence estimates (IS) with government testing data and the ONS survey17 (figure 1A), we included two estimates from the ONS: the official reports, released every week, and the results from time-series modelling. The reports represent the best estimate of the ONS at the time of release, whereas the times-series model can evolve, leading to revision of previous estimates in response to new data (appendix p 7). The government values are consistently lower than other estimates because they are not a representative figure for the population. To account for this, we looked at the number of people who reported classic symptoms (fever, loss of smell, and persistent cough) for the first time between July 7 and Aug 5, 2020, and who did not get tested; we found the number to be 50 499 (62%). We used this percentage to scale the government data by a factor of 2·5, our best estimate of the systematic undercounting of new cases.

Daily incidence in the UK since May 12, 2020, compared with daily laboratory-confirmed cases and the ONS study (A) and daily prevalence in the UK compared with the ONS and REACT-1 studies (B)

ONS data are taken from the report released on Oct 9, 2020, and ONS report dates are taken as the midpoint for the date range covered by the estimate. For the Symptom Study and REACT-1, the shaded areas represent 95% CIs. For the ONS, the shaded areas and error bars represent 95% credible intervals. ONS=Office for National Statistics. REACT-1=Real-time Assessment of Community Transmission.

Our results predict a steep decline in incidence until the middle of July, a trend in agreement with the government data and ONS estimates. All three estimates show an increase in the number of daily cases from mid-August throughout September; on Sept 28, 2020, we estimated that there were 15 841 (95% CI 14 023–17 885) daily cases. Estimates of incidence per NHS region are in the appendix (p 8), as are maps of our most granular incidence, IH (appendix p 8).

We also compared our England-wide prevalence estimates, PA and PH, with prevalence reported by the ONS and REACT-1 studies (figure 1B). The symptom-based assessments, PA, indicate a continuous drop in the number of cases from April 1, following the lockdown measures instigated on March 23, which then plateaued in mid-June and began to rise again sharply from early September. The trends observed for PA agree with data from the ONS survey and the REACT-1 study, although there is some divergence in September, when both PA and REACT-1 data show a sharper rise in prevalence than the ONS data (figure 1B). It should be noted that PA captures only symptomatic cases, whereas ONS and REACT-1 data also capture asymptomatic cases, which are thought to account for 40–45% of the total cases.18 Taking this factor into account, PA is slightly higher than ONS and REACT-1 data. On Sept 28, 2020, we estimated a prevalence of 0·53% (95% CI 0·45–0·60).

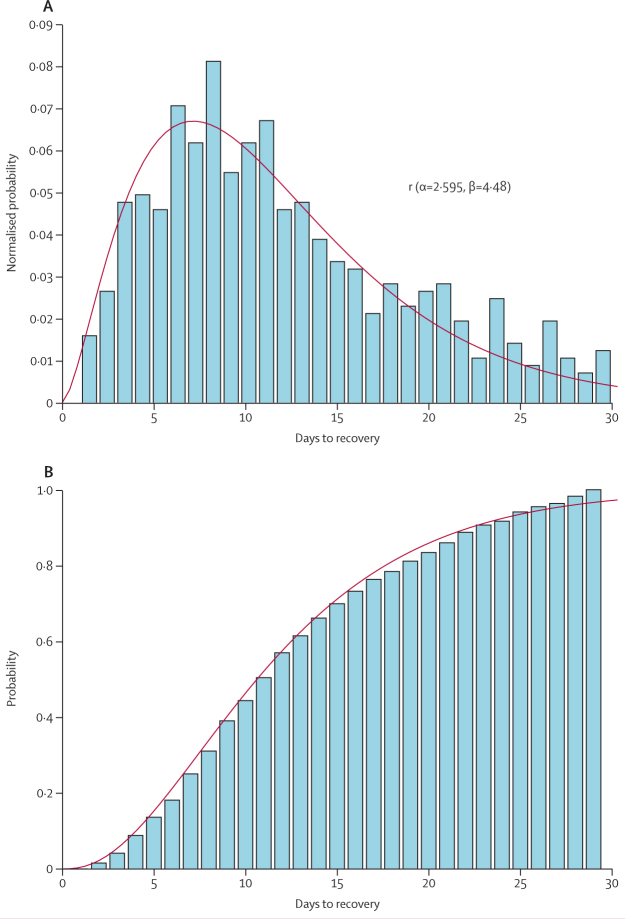

PH agrees with the trends in the other estimates, and predicts a rise in cases in September at a similar rate to PA and REACT-1 data (figure 1B). However, the absolute values are consistently lower than other estimates. The recovery model used to calculate PH is shown in figure 2. Although most users recover in 7–10 days, the curve shows there is a substantial minority who take longer than 3 weeks to recover from COVID-19.

Empirical probability density function of days to recovery with a gamma fit (A) and empirical cumulative density function of days to recovery with the same gamma fit (B)

The bars represent empirical findings and the red line is the gamma fit.

The size of our dataset allows us to estimate prevalence for more granular geographical regions than the ONS (appendix p 9). We considered that our estimates of prevalence might be biased by a user's health-seeking behaviour, and we sought to assess the influence of this factor by removing from the analysis all users who reported being sick upon sign-up (appendix p 10).

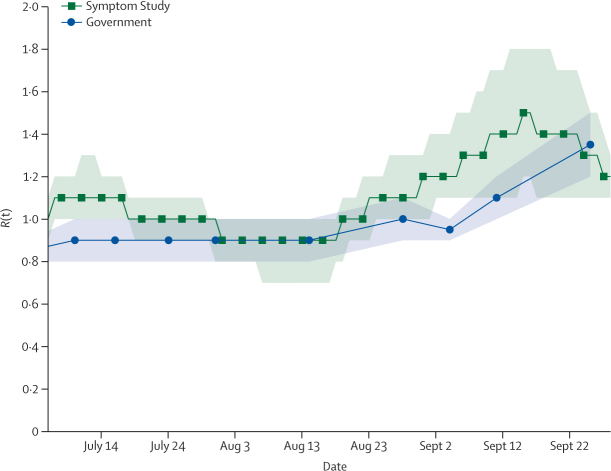

We compared our estimates of R(t) for England between June 24 and Sept 28, 2020, with the consensus estimates provided by the UK Government Science Pandemic Influenza Modelling group (figure 3).19 Estimates for each of the NHS regions are in the appendix (p 12). The estimates agree that R(t) has been above 1 from early-mid-September, and we estimate that R(t) in England was 1·17 (95% CI 1·15–1·19) on Sept 28, 2020. The government estimates are much smoother than our estimates, which is probably because they are derived from a consensus of the R(t) estimates from the models produced by many groups.

Estimated R(t) for England between June 24 and Sept 28, 2020

The shaded area for Symptom Study data represents 95% credible intervals and for government data represents 95% CIs. UK Government estimates published every 7–12 days from June 12, 2020. R(t)=effective reproduction number.

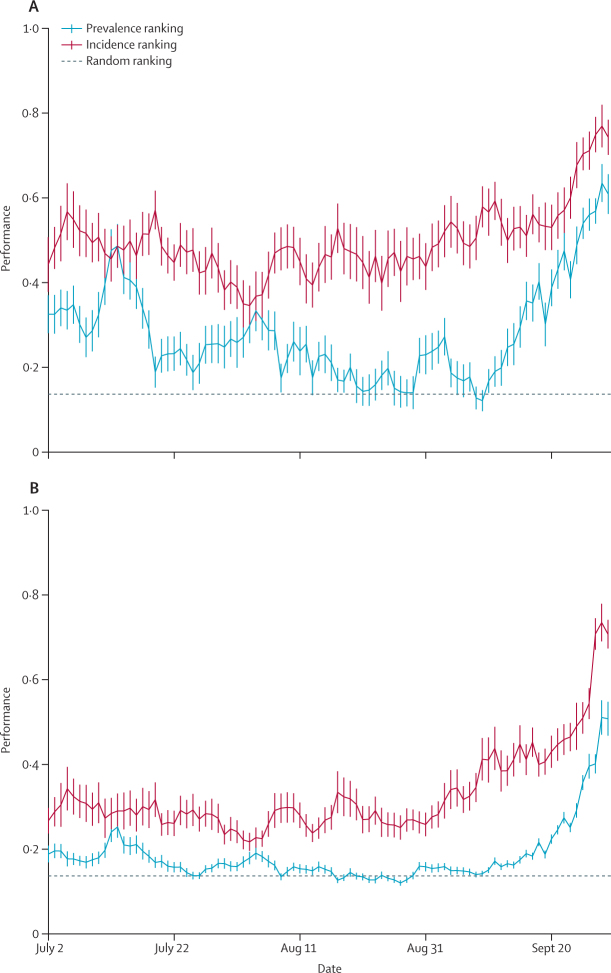

Concerning hotspot detection in England, ranking based on incidence consistently outperformed the prevalence-based ranking (figure 4). Performance varied over time; the best incidence-based ranking produced recall scores of up to 0·75, with a minimum of 0·35, indicating that we can predict between 7 and 15 of the regions in the UK Government's top 20. On a geographically granular level, on Sept 28, 2020, we detected 15 (75%) of the 20 regions with highest incidence according to government test data. The ranking performed best in late September when cases began to rise, due to a greater difference between regional case numbers. We also compared the agreement of weekly cases in each UTLA between our data and government data against the number of government pillar 2 tests carried out (figure 5). The correlation indicates that the two estimates agree best when the government carries out more tests. This finding means that disagreements between the two rankings could be partially explained by poorer ranking in regions with limited government testing.

Performance of our two ranking methods: ranking by prevalence and incidence on two metrics, recall at 20 (A) and the normalised mean reciprocal rank at 20 (B)

Vertical lines represent 95% CIs.

Discussion

In this study, we have demonstrated the use of population-wide data reported through the COVID Symptom Study app, drawing on more than 120 million daily reports and about 170 000 invited swab tests from more than 2·8 million users to estimate prevalence, incidence, and R(t). Other digital surveys have been used to provide valuable real-time information about the pandemic, including How We Feel,20 Corona Israel,5 the Facebook Survey, and CovidNearYou. However, to our knowledge we are the first to provide national-level disease surveillance, and we have found good agreement with traditional, representative community surveys.

Furthermore, we have used our data to produce geographically granular estimates and a list of potential hotspots. The list consistently flags a relatively high number of regions highlighted by the UK Government testing data. Although we compared our data with that from government testing in our results, it must be noted these cannot be considered ground truth estimates of COVID-19 cases. The government data are an incomplete sampling of new COVID-19 cases; results from our app indicate that only about 40% of those who report classic COVID-19 symptoms go on to receive a test. Furthermore, testing capacity is not uniform across all UTLAs.21 Our results indicate that our case estimates agree best with government estimates in areas with high levels of testing per capita, suggesting that our estimates could prove a valuable resource for forecasting in regions with poor testing provision. There are other reasons why our list might differ from the Government list. First, the two methods might have different uptake in some higher-risk groups, such as students in provided accommodation, thus showing different sensitivity to hotspots based on the demographic make-up of a region. The two methods might therefore be complementary, and we suggest that our hotspot detection could be most beneficial as an additional indication of regions where increased testing might be best focused. The modest reliance on PCR tests suggests our approach could prove valuable in countries where testing infrastructure is less developed, although further work is required to assess our approach in other locations.

Other efforts to track the national progression of COVID-19 rely on self-swabbing from community cohorts. Two such efforts exist in England: the ONS study17 and the REACT-1 study.8, 9 These studies have the advantage of being more representative of the population, and their design enables the detection of asymptomatic cases. However, they are smaller than the COVID Symptom Study; the ONS and REACT-1 studies currently report 120 000–175 000 participants in England, whereas the app reports over 2 800 000 users in England. The ability to use self-reported symptom data from this large cohort enables us to make predictions of more geographically granular regions than do either the ONS or REACT-1 studies, allowing us to predict COVID-19 hotspots at the UTLA level. Our estimates should therefore be viewed as independent and complementary to those provided by the ONS and REACT-1 studies.

Several limitations to our work must be acknowledged. The app users are not a representative sample of the wider population for which we aim to make an inference. There is a clear shift in age and gender compared with the general population, our users tend to live in less deprived areas,12 and we have few users reporting from key sites such as care homes and hospitals. We accounted for some population differences when producing prevalence estimates using specific census adjusted population strata, but the number of invited tests does not allow us to do this when calculating incidence. Differences in reported symptoms across age groups22 would probably lead to different prediction models of COVID-19 positivity, and the performance of the model will vary with the prevalence of other infections with symptoms that overlap with COVID-19, such as influenza. Furthermore, the app population is less ethnically diverse than the general population.23 Reliance on user self-reporting can also introduce bias into our results; for instance, users who are very sick might be less likely to report than those with mild symptoms. Other sources of error include collider bias24 arising from a user's probability of using the app being dependent on their likelihood of having COVID-19, potentially biasing our estimates of incidence and prevalence. We showed a sensitivity analysis that attempts to understand the effect of health-seeking behaviour, but we acknowledge that there are many other biases that might affect our results—for example, our users might be more risk-averse than the general population—and that our results must be interpreted with this in mind.

We have presented a means of combining app-based symptom reports and targeted testing from over 2·8 million users to estimate incidence, prevalence, and R(t) in England. By integrating symptom reports with PCR test results, we were able to highlight regions which might have concerning increases in COVID-19 cases. This approach could be an effective, complementary way for governments to monitor the spread of COVID-19 and identify potential areas of concern.

Data sharing

Data collected in the COVID Symptom Study smartphone application are being shared with other health researchers through the UK NHS-funded Health Data Research UK (HDRUK) and Secure Anonymised Information Linkage consortium, housed in the UK Secure Research Platform (Swansea, UK). Anonymised data are available to be shared with HDRUK researchers according to their protocols in the public interest. US investigators are encouraged to coordinate data requests through the Coronavirus Pandemic Epidemiology Consortium.

Acknowledgments

Zoe Global provided in-kind support for all aspects of building, running, and supporting the app, and provided service to all users worldwide. Support for this study was provided by the National Institute for Health Research (NIHR)-funded Biomedical Research Centre (BRC) based at Guys' and St Thomas' NHS Foundation Trust. Investigators also received support from the Wellcome Trust, the UK Medical Research Council and British Heart Foundation, Alzheimer's Society, the EU, the UK NIHR, Chronic Disease Research Foundation, and the NIHR-funded BioResource, Clinical Research Facility and BRC based at Guys' and St Thomas' NHS Foundation Trust, in partnership with King's College London, the UK Research and Innovation London Medical Imaging and Artificial Intelligence Centre for Value Based Healthcare, the Wellcome Flagship Programme (WT213038/Z/18/Z), the Chronic Disease Research Foundation, and the DHSC. CMA is supported by the National Institute of Diabetes and Digestive and Kidney Diseases (K23 DK120899), as is DAD (K01DK120742). DAD and LHN are supported by the American Gastroenterological Association–Takeda COVID-19 Rapid Response Research Award (AGA2021-5102). The Massachusetts Consortium on Pathogen Readiness and Mark and Lisa Schwartz supported DAD, LHN, and ATC. LHN is supported by the American Gastroenterological Association Research Scholars Award and the National Institute of Diabetes and Digestive and Kidney Diseases (K23 DK125838). TF holds a European Research Council Starting Grant. ATC was supported in this work through a Stuart and Suzanne Steele MGH Research Scholar Award. Investigators from the COVID Symptom Study Sweden were funded in part by grants from the Swedish Research Council, Swedish Heart–Lung Foundation, and the Swedish Foundation for Strategic Research (LUDC-IRC 15-0067). We thank Catherine Burrows (Bulb, UK) for assistance with database querying.

Contributors

TV, MSG, TF, MFG, PWF, JW, CJS, TDS, and SO contributed to the study concept and design. SG, JCP, CHS, DAD, LHN, ATC, RD, JW, CJS, TDS, and SO contributed to the acquisition of data. TV, MSG, LSC, SG, JCP, CHS, and BM contributed to data analysis and have verified the underlying data. TV, MSG, and LSC contributed to the initial drafting of the manuscript. ATC, CJS, TDS, and SO contributed to study supervision. All authors contributed to the interpretation of data and critical revision of the manuscript.

Declaration of interests

SG, JCP, RD, and JW are employees of Zoe Global. DAD and ATC previously served as investigators on a clinical trial of diet and lifestyle using a separate smartphone application that was supported by Zoe Global. TF reports grants from the European Research Council, Swedish Research Council, Swedish FORTE Research Council, and the Swedish Heart–Lung Foundation, outside the submitted work. MFG reports financial and in-kind support within the Innovative Medicines Initiative project BEAr-DKD from Bayer, Novo Nordisk, Astellas, Sanofi-Aventis, AbbVie, Eli Lilly, JDRF International, and Boehringer Ingelheim; personal consultancy fees from Lilly; financial and in-kind support within a project funded by the Swedish Foundation for Strategic Research on precision medicine in diabetes from Novo-Nordisk, Pfizer, Follicum, Abcentra; in-kind support on that project from Probi and Johnson and Johnson; and a grant from the EU, outside the submitted work. ATC reports grants from Massachusetts Consortium on Pathogen Readiness, during the conduct of the study, and personal fees from Pfizer and Boehringer Ingelheim and grants and personal fees from Bayer, outside the submitted work. TDS is a consultant to Zoe Global. All other authors declare no competing interests.

References

Full text links

Read article at publisher's site: https://doi.org/10.1016/s2468-2667(20)30269-3

Read article for free, from open access legal sources, via Unpaywall:

http://www.thelancet.com/article/S2468266720302693/pdf

Citations & impact

Impact metrics

Article citations

Investigating Frailty, Polypharmacy, Malnutrition, Chronic Conditions, and Quality of Life in Older Adults: Large Population-Based Study.

JMIR Public Health Surveill, 10:e50617, 11 Oct 2024

Cited by: 0 articles | PMID: 39145920 | PMCID: PMC11512125

Study of Postacute Sequelae of COVID-19 Using Digital Wearables: Protocol for a Prospective Longitudinal Observational Study.

JMIR Res Protoc, 13:e57382, 16 Aug 2024

Cited by: 0 articles | PMID: 39150750 | PMCID: PMC11364950

Regional variation of COVID-19 admissions, acute kidney injury and mortality in England - a national observational study using administrative data.

BMC Infect Dis, 24(1):346, 22 Mar 2024

Cited by: 0 articles | PMID: 38519921 | PMCID: PMC10960376

Evidence linking COVID-19 and the health/well-being of children and adolescents: an umbrella review.

BMC Med, 22(1):116, 13 Mar 2024

Cited by: 1 article | PMID: 38481207

Review

Mobile Crowdsensing in Ecological Momentary Assessment mHealth Studies: A Systematic Review and Analysis.

Sensors (Basel), 24(2):472, 12 Jan 2024

Cited by: 1 article | PMID: 38257567 | PMCID: PMC10820952

Review Free full text in Europe PMC

Go to all (49) article citations

Data

Data behind the article

This data has been text mined from the article, or deposited into data resources.

BioStudies: supplemental material and supporting data

Clinical Trials

- (1 citation) ClinicalTrials.gov - NCT04331509

Similar Articles

To arrive at the top five similar articles we use a word-weighted algorithm to compare words from the Title and Abstract of each citation.

Risk factors and disease profile of post-vaccination SARS-CoV-2 infection in UK users of the COVID Symptom Study app: a prospective, community-based, nested, case-control study.

Lancet Infect Dis, 22(1):43-55, 01 Sep 2021

Cited by: 431 articles | PMID: 34480857 | PMCID: PMC8409907

Early detection of COVID-19 in the UK using self-reported symptoms: a large-scale, prospective, epidemiological surveillance study.

Lancet Digit Health, 3(9):e587-e598, 29 Jul 2021

Cited by: 43 articles | PMID: 34334333 | PMCID: PMC8321433

Rapid, point-of-care antigen tests for diagnosis of SARS-CoV-2 infection.

Cochrane Database Syst Rev, 7:CD013705, 22 Jul 2022

Cited by: 99 articles | PMID: 35866452 | PMCID: PMC9305720

Review Free full text in Europe PMC

Funding

Funders who supported this work.

Crohn's & Colitis Foundation (2)

Grant ID: 563526

Grant ID: 690389

Medical Research Council (2)

Grant ID: HDR-9004

MICA: Ancestry and biological Informative Markers for stratification of HYpertension: The AIM HY study

Professor Phil Chowienczyk, King's College London

Grant ID: MR/M016560/1

NIDDK NIH HHS (3)

Grant ID: K23 DK120899

Grant ID: K23 DK125838

Grant ID: K01 DK120742

Wellcome Trust (2)

Grant ID: 212904/Z/18/Z

Grant ID: WT213038/Z/18/Z