Abstract

Objective

Data-driven population segmentation is commonly used in clinical settings to separate the heterogeneous population into multiple relatively homogenous groups with similar healthcare features. In recent years, machine learning (ML) based segmentation algorithms have garnered interest for their potential to speed up and improve algorithm development across many phenotypes and healthcare situations. This study evaluates ML-based segmentation with respect to (1) the populations applied, (2) the segmentation details, and (3) the outcome evaluations.

Materials and Methods

MEDLINE, Embase, Web of Science, and Scopus were used following the PRISMA-ScR criteria. Peer-reviewed studies in the English language that used data-driven population segmentation analysis on structured data from January 2000 to October 2022 were included.

Results

We identified 6077 articles and included 79 for the final analysis. Data-driven population segmentation analysis was employed in various clinical settings. K-means clustering is the most prevalent unsupervised ML paradigm. The most common settings were healthcare institutions. The most common targeted population was the general population.

Discussion

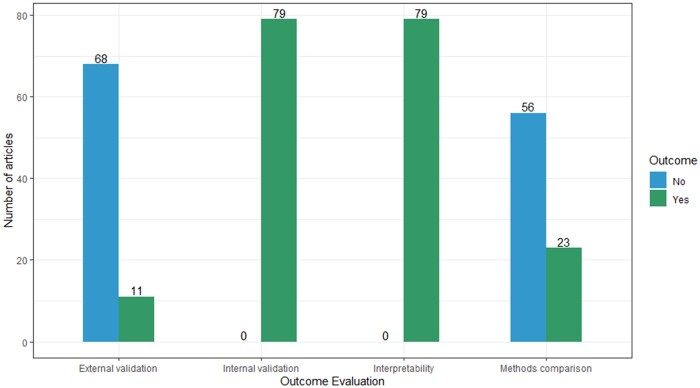

Although all the studies did internal validation, only 11 papers (13.9%) did external validation, and 23 papers (29.1%) conducted methods comparison. The existing papers discussed little validating the robustness of ML modeling.

Conclusion

Existing ML applications on population segmentation need more evaluations regarding giving tailored, efficient integrated healthcare solutions compared to traditional segmentation analysis. Future ML applications in the field should emphasize methods’ comparisons and external validation and investigate approaches to evaluate individual consistency using different methods.

Keywords: population segmentation, machine learning, data analytics, population health, health services research

BACKGROUND AND SIGNIFICANCE

Electronic health records (EHRs) are quickly becoming a primary data source for clinical and translational research due to their accessibility, volume, and granularity.1 In addition, EHR data have the potential to play a pivotal role in the research and development of practical, patient-specific approaches to disease prevention and treatment.2,3 Phenotyping algorithms based on machine learning (ML) models have received much attention in recent years for their potential to enhance the speed and precision of algorithm development across a wide variety of phenotypes and healthcare contexts.4,5 Compared to rule-based methods, which are human-driven, ML approaches consolidate multiple sources of information accessible in patient records to improve phenotypic characterization in an automated and generalizable manner.4

Therefore, using EHR to provide targeted, effective, and coordinated healthcare services has sparked a renewed focus on population health and integrated health systems around the world.6,7 Healthcare systems may learn more about the health of their populations, determine which health intervention initiatives to fund first, and allocate their resources more wisely with this patient-centered approach.8

Although it is impractical to address the unique care requirements of each member of a diverse community at the health policy level, this population can be broken down into smaller groups with similar physical, mental, and social health profiles and needs.9 Different care plans can be designed for each population subgroup using population segmentation.10 Healthcare resource planning and evidence-based policymaking are both improved by using population segmentation analysis.1 Over time, 2 primary methodologies for partitioning populations have emerged. The first is data-driven approaches using post-hoc statistical analysis like clustering analysis and latent class analysis on empirical data.11 In contrast to data-driven approaches, another is expert-driven approaches, which divide a population into subgroups according to a-priori, experts-defined criteria based on literature review and consensus.1

Given that data-driven population segmentation is gaining popularity and its potential utility is being more recognized,5 the field of population health is likely to produce more studies in the near future. Previous review5 for ML applications in population segmentation summarized clinical settings applied and methods used, as well as proposed a suitable segmentation framework. However, little is discussed whether studies validated the robustness of ML modeling, for example, conducting methods comparison.

OBJECTIVE

The purpose of this paper is to retrieve and critically review the existing published literature on the clinical application of ML in data-driven population segmentation analysis, concerning populations studied, segmentation details, and outcomes validation.

MATERIALS AND METHODS

Study design

The scoping review was conducted according to PRISMA for Scoping Reviews guidelines (PRISMA-ScR).12 Scoping studies, a popular research review method, involve mapping evidence to summarize a field’s breadth and depth, clarifying complex concepts, and refining future research. They do not assess study quality and require analytical interpretation compared with systematic review and narrative reviews separately. Relevant to emerging evidence, they could include published and gray literature, complementing clinical trial results.13

Search strategies

We used literature databases to obtain a comprehensive list of possibly relevant papers for this scoping review (Figure 1). From January 1, 2000, through October 1, 2022, the MEDLINE, Embase, Web of Science, and Scopus databases were searched. The publication year range was specified to not only study the use and development of ML in population segmentation since 2000 when unsupervised ML methods become widespread but also supplement the existing reviews when ML became popular in recent years. Our search strategy included the key search terms stratif* OR segment* OR categor* OR “cluster analysis” OR cluster* OR pattern* OR profil* OR phenotyp* OR partition* OR subgroups OR subtypes OR subpopulations. The search strategy for each database is presented in the Supplementary Material. We also conducted the ancestral search.

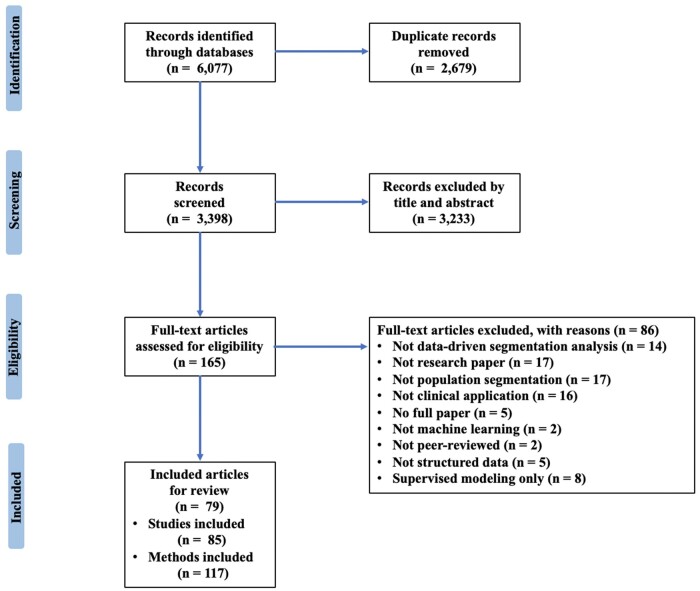

Figure 1.

Flow diagram for the scoping review process adapted from the PRISMA-ScR statement.

Eligibility criteria

Full-text, original, peer-reviewed, English-language papers that employed data-driven population segmentation analysis on structured data were included. The segmentation methods had to be applied to real-world datasets, not theoretical, hypothetical, or simulated ones. The meta-analysis, case series, case reports, conference papers, gray literature, and reviews were excluded. Articles that were not on human subjects, not in English, not applying ML methods (statistical methods such as latent class analysis would not be included), not using unsupervised segmentation methods (using unlabeled data as inputs), not applying in clinical settings, and only relying on experts’ opinions were also excluded. Clustering not on population, for example, on the country as a unit of analysis levels, was excluded as well.

Selection of studies

EndNote, version X9 was used to remove duplicate publications. Two independent researchers (PL and ZW) reviewed the abstracts of retrieved papers for inclusion and discussed any discrepancies. They then independently examined and assessed the full-text papers for eligibility.

As illustrated in Figure 1, there were 3398 articles found in databases searching after 2679 duplicates were removed. After screening the abstracts, 3233 papers that did not meet the inclusion criteria were eliminated, leaving 165 articles. For the 165 recognized articles, 79 papers were ultimately included for full-text review. The percentage agreement between PL and ZW achieved 90%. Disputes in the selection procedure were resolved by discussion and consensus with 2 other researchers (MAP and NL).

Data extraction

Once an article was determined to be included, 2 researchers (PL and ZW) independently extracted data from eligible papers. In addition to the title and year of publication, we mainly extracted information from selected publications from 3 perspectives: populations studied (including target population, population size, country of the study, health conditions, data sources, data type, and study settings), segmentation (including segmentation objectives, number of variables, type of variables used, statistical methods, newly designed/existed, number of segments, and names of segments), and validation (comparison with other methods, criteria to determine the number of segments, internal validation, external validation, interpretability, and methods for missingness).

Data synthesis

In the included publications, we summarized target group characteristics, such as inclusion criteria, sample size, and geographic region, illustrating the populations subjected to data-driven segmentation analysis. We detailed data sources (primary or secondary) and contexts (community-based or healthcare institutions) for future research. Moreover, we established segmentation objective topics, assessed articles' utilization of segments, and highlighted limitations of statistical methods to guide method selection. We examined method comparisons for conclusion validity and proposed pilot plans for consistency evaluation across different approaches.

RESULTS

In the 79 included papers, some papers used more than one data and conducted multiple studies, the number of studies in this review is 85. Also, one study may perform several methods; the total count of methods is 117. We summarized the information into 3 parts: populations, segmentation details, and outcome validation.

Information about populations of interest subject to segmentation analysis, including population size, country, health conditions, data sources, and study settings, is summarized in this section (Supplementary Table S2). The purpose of segmentation, variables utilized for segmentation, statistical methodologies, newly designed/existing method, as well as the number of derived segments and their names, are outlined in this section (Supplementary Table S3). Comparison with other methods, criteria to determine the number of segments, internal validation, external validation, interpretability, and methods for missingness are summarized in this section (Supplementary Table S4).

Population studied

As exhibited in Table 1, the population can be broadly classified as either the general population or the disease/condition-specific population. The included studies took a sample of the population. The segmentation targets of most articles (59/79; 74.7%) were the population with specific diseases/conditions. Individuals with cardiometabolic (7/79; 8.9%), diabetes (8/79; 10.1%), epilepsy (2/79; 2.5%), Covid-19 (4/79; 5.1%), ICU admissions (2/79; 2.5%), emergency (3/79; 3.8%), oral health (2/79; 2.5%), nervous system diseases (3/79; 3.8%), mental health (8/79; 10.1%), hospitalization (3/79; 3.8%), respiratory diseases (2/79; 2.5%), infectious diseases (3/79; 3.8%), multimorbidity (4/79; 5.1%), health-services usage (2/79; 2.5%), pregnancy (2/79; 2.5%), and other conditions (4/79; 5.1%) were included in these articles. Other papers (20/79; 25.3%) targeted the general population.14 However, in this review, there are still some unincluded health conditions where segmentation analysis has been merely not applied, such as unintentional injuries, stroke, kidney, and ophthalmology, which could be the future direction to improve the delivery of health plans using segmentation methods.

Table 1.

Characteristics of the target population subjected to data-driven segmentation

| Population selection (out of 79 papers) | Number of papers | List of papers |

|---|---|---|

| Without specific diseases/conditions | 20 | 11 , 14 , 16–33 |

| With specific diseases/conditions | 59 | |

| Diabetes | 8 | 34–40 |

| Mental health | 8 | 41–48 |

| Cardiometabolic | 7 | 34–40 |

| Multimorbidity | 4 | 49–52 |

| COVID-19 | 4 | 53–56 |

| Infectious diseases | 3 | 57–60 |

| Emergency | 3 | 61–63 |

| Hospitalization | 3 | 64–66 |

| Nervous systems | 3 | 15 , 67 , 68 |

| Epilepsy | 2 | 69 , 70 |

| ICU patients | 2 | 71 , 72 |

| Respiratory diseases | 2 | 73 , 74 |

| Oral health | 2 | 75 , 76 |

| Pregnancy | 2 | 77 , 78 |

| Health service usage | 2 | 79 , 80 |

| Others | 4 | 81–84 |

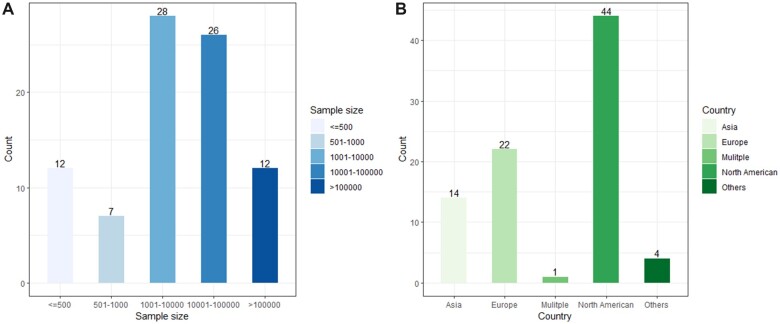

For the sample size in 85 studies (see Figure 2), the smallest number is 51 by Galvez-Goicurla et al, who classified a population of migraine patients into 4 clusters of pain types with distinct morphological features using a Logistic Model Tree algorithm.15 The most extensive study involved 1 608 741 patients from a diverse hospital cohort.16 As for study locations (see Figure 2), the vast majority were carried out in North America and Europe, with the United States (40/85; 47.1%) having the most studies.

Figure 2.

Population studied. (A) Number of studies that used the various sample sizes (out of 85 studies). (B) Number of studies that used the different countries’ datasets (out of 85 studies).

Segmentation variables, data sources, and settings

Depending on the purpose of segmentation, variables used for segmentation analysis in these studies were heterogeneous, including sociodemographic, vital signs, pharmacy, care utilization, psychosocial factors, behavioral factors, and lab tests. Supplementary Table S3 summarized the details of segmentation variables. Based on the study design (cross-sectional or longitudinal), the clustering could be longitudinal or static. For example, Wong et al used trajectories of hospitalization probability consisting of demographics, clinical, vital signs, utilization, pharmacy, and lab values to identify latent subgroups of high-risk patients for hospitalization.64 In addition to EHR, Benis et al also analyzed documented access to various communication channels to determine which channels of communication, within the health organization, were most preferred by the patients and could be used to deliver patient-specific messages.85 Sociodemographic data were nearly all utilized to identify the disease’s clinical subtypes.53,64,71,86 Diagnosis codes and procedural patterns were also used to assess patient representations and subtypes.21,23,87

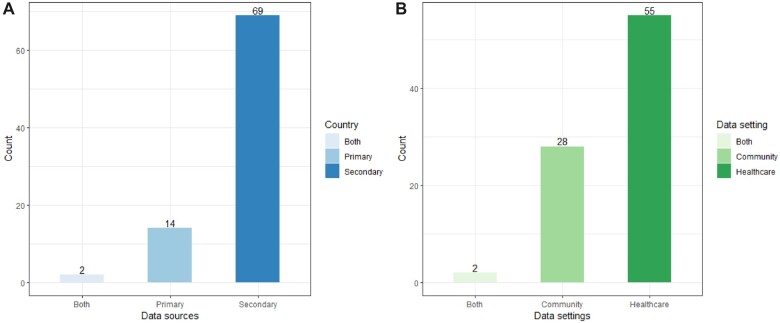

As shown in Figure 3, most studies (69/85; 81.2%) utilized secondary data from earlier studies or third-party sources, such as the National Health and Nutrition Examination Survey, which was designed to evaluate the general health and nutritional status of the US people and various demographic groups, including medical, dental, and physiological measurements, as well as laboratory tests administered by highly trained medical personnel. Besides, Bej et al used the National Family Health Survey-4 dataset from India to examine the type-2 diabetes subpopulation.88 Conversely, only 14 studies (14/85; 16.5%) employed primary data for population segmentation. For instance, researchers obtained real-time pain evolution data through a mobile application to analyze pain episodes in chronic diseases.15

Figure 3.

(A) Number of studies that used primary or secondary data sources (out of 85 studies). (B) Number of studies that were conducted in different settings (out of 85 studies).

This review classified the study settings into 2 categories: healthcare institutions and communities. The majority (55/85; 64.7%) of the study settings were healthcare institutions (see Figure 3). To illustrate, Rodríguez et al analyzed data from adult patients with confirmed SARS-CoV-2 infection admitted in 63 ICUs across Spain.54 Some studies were conducted in communities. For example, Hu used data from the Chinese Longitudinal Health Longevity Survey, which collected gathered data on aging and caregiving from a representative sample of the oldest old in 23 Chinese provinces.18

Objectives of segmentation

Included studies consistently identified 3 primary population segmentation goals: (1) clinical prediction, (2) health grouping/profiling, and (3) delivery of healthcare interventions. There is some overlap, and they are not mutually exclusive themes. All included articles (n = 79) centered on Health Grouping/Profiling. Furthermore, most (n = 52) aimed to deliver healthcare interventions specific to each demographic group. Twenty-five studies aimed to conduct clinical prediction to identify related risk factors. In conclusion, the objective is to categorize individuals by care type and frequency, enabling tailored clinical care, health interventions, and policies based on unique health factors, rather than predicting risk scores for specific outcomes like hospitalization or monthly costs, according to the Johns Hopkins Adjusted Clinical Groups system.89

Segmentation methods

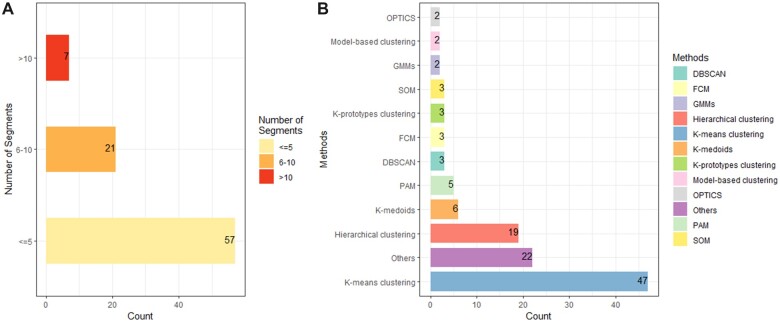

Based on the goal of population segmentation, we only focused on unsupervised classifications which are used to analyze and cluster unlabeled datasets. Figure 4 shows that among 117 methods, k-means cluster analysis (47/117; 40.2%) was the most widely used unsupervised method. For example, Flores et al applied the k-means clustering algorithm for the automated detection of coronary artery disease subgroups.34 Some clustering analyses were also performed using k-medoids, a variant of k-means that works well with ordinal scale variables.23,34,37,57 Some segmentation analyses used a mix of techniques, as they were not mutually exclusive. To illustrate, Benis et al used k-means clustering for discretization and patient profile construction and finally employed hierarchical clustering to show the various communication profiles with the health care organization.85 In order to cope with more sophisticated situations, deep learning was also applied in classification. Thomas et al proposed a new real-time tool for visualizing these high-dimensional data to expose previously undisclosed relationships and clusters with deep autoencoders.73

Figure 4.

(A) Number of studies that resulted in different segments (out of 85 studies). (B) Type of methods used to conduct population segmentation (out of 117 methods). OPTICS: ordering points to identify the clustering structure; GMMs: Gaussian mixture models; SOM: self-organizing map; FCM: fuzzy c-means; DBSCAN: density-based spatial clustering of applications with noise; PAM: partition around medoids algorithm.

Other clustering algorithms included deep autoencoder,73 clustering large applications,61 the mixture of multivariate generalized linear mixed models,82 uniform and manifold approximation and projection (UMAP),67 logistic model tree,15 latent trajectories analysis,18 Gaussian mixture models,63 1-layer convolutional neural networks,16 hypergraph network analysis with eigenvector centralities,75 composite mixture model (CMM),65 and collaborative modeling.46 Dimension reduction methods were sometimes used together with clustering. For example, self-organizing mapping (SOM) was used to reduce a high-dimensional dataset to a lower-dimensional one while maintaining its topological structure. Dipnall et al considered SOM after addressing the imbalanced nature of the data, which is the primary rationale for undertaking SOM.41 UMAP was another method found in this review for nonlinear dimensionality reduction. Hurley et al applied UMAP first for dimensionality reduction, which can capture both local and global structures in high-dimensional data, and then used Gaussian mixture models for clustering data.63

Segmentation outcome

Each study’s segmentation outcome was evaluated and summarized in Supplementary Table S4, considering the following items: internal validity (the degree to which the clustering solution accurately reflects the underlying structure of the data,90 through measures such as cluster stability), external validity (the extent to which the results can be generalized to other populations or contexts, by comparing the results to existing theory or assessing consistency across different datasets), and interpretability (ensuring segments are easily recognized and understood3). Figure 5 summarizes the number of articles on each evaluation criterion. All papers (n = 79) performed internal validation for the segmentation analysis. For example, Hurley et al employed the adjusted Rand index to establish stability in detecting these clusters found by Gaussian mixture models.63 Nevertheless, only a few papers incorporated external validation (11/79; 13.9%). Faghri et al used a replication cohort to validate the clusters externally.67 In addition, all papers (n = 79) interpreted the segmentation outcomes based on related analysis (eg, regression or Student’s t tests) or clinical experts’ viewpoints. For the number of segments (see Figure 4), we only counted and presented the one with the best performance result in each study. Most studies (79/85; 92.9%) produced less than 10 segments.22 Various methods were employed to decide the number of segments, including the Calinski-Harabasz criterion, elbow method, C index, Silhouette coefficient, clustering cohesion index, dendrogram, Akaike information criterion, Bayesian information criterion, cross-validation analysis, the Dunn index, stability index, Jaccard score, Jump method, Davis-Bouldin index, Wald’s minimum variance method, and expert viewpoints.

Figure 5.

Number of articles that used the various outcome validation (out of 79 articles).

Methods for missing values

A total of 48 of 79 articles (48/79; 60.1%) did not detail how to deal with missing values. Moreover, 14 articles (14/79; 17.7%) excluded all missingness and used complete cases for analysis. The rest of those papers used imputation mainly for missing values, including imputation by filling the subgroup-level mean values,64 imputation with the regularized iterative principal component analysis (PCA) algorithm17 for variables with less than 33% missingness, k-nearest neighbor imputation,67 generalized low-rank models,34 imputation with mean values,91,92 sensitive analysis to replace with positive values,75 genotype imputation,42 CMM,65 creating a separate variable indexing the count of missing variables out of the final variable set,66 replacement with the median value,35 and MissForest imputation method.23 Based on previous illustrated methods and various unlisted ones, future studies should be cautious when dealing with missingness according to data types and structure to keep as much information as possible.

DISCUSSION

This review drew attention to the previous and current research in ML-based segmentation. Numerous approaches have been considered, and population segmentation is frequently used in clinical contexts. Compared with the previous review conducted by Yan et al,5 we included many more new studies, narrowed the scope of methods to ML, and only included unsupervised modeling. In addition to the previous aspects summarized, we also investigated how many studies have conducted methods comparison which is necessary to verify the validity of methods.

Proof of the validity of methods

Nearly all papers concluded that the ML algorithms they used performed well in segmentation and provided valuable value in personalized treatment plans. However, less than 30% compared different methods. In order to discover underlying patterns in early childhood dental care, Peng et al76 compared k-means clustering with traditional segmentation analysis by calculating per member per year dental cost by gender. Results generated by k-means clustering performed better than random clustering and provided more details than traditional segmentation. Some studies compared several unsupervised methods. For example, Bose and Radhakrishnan36 compared hierarchical clustering, k-means clustering, and partitioning around medoids to identify subgroups among home health patients with heart failure.

Since unsupervised learning worked on the unlabeled dataset, this would make the results less accurate. Therefore, some studies also incorporated supervised or semisupervised learning and compared the results. Faghri et al applied unsupervised UMAP modeling and semisupervised (neural network UMAP) modeling to identify amyotrophic lateral sclerosis clinical subtypes.67 Although both modelings defined the same subgroups, they found that semisupervised ML produced the optimum results based on visual inspection. However, not all studies found that supervised methods outperformed unsupervised methods. Goodman et al used both unsupervised and supervised clustering algorithms to identify emergency department frequent user subgroups; however, they found that supervised algorithms could not group patients into clusters, while unsupervised modeling was able to form distinct clusters.62

Future studies should provide more evidence to show the strengths of applying ML to segmentation, especially compared with traditional segmentation analysis or some golden standards. Additionally, a lack of rigorous evaluation of segmentation methods was observed, with most studies merely employing standard metrics to assess internal validity. In light of this, we emphasize the necessity for further examination and exploration of segmentation models, encompassing both external and internal validation.

Limitations of methods

In the reviewed studies, researchers selected the most suitable segmentation methods based on data characteristics and variables, but no method is perfect. About half of these studies addressed the limitations of their chosen methods, including potential informative missingness from the k-means clustering algorithm,64 the conceptual costliness of the SOM ML algorithm with increasing variables and grid size, and the unsuitability of SOM for diagnostic modeling.22 Furthermore, incomplete data and outliers posed challenges for SOM maps,41 while fuzzy c-means50 and k-median71 clustering were found to be less susceptible to outliers.

The temporal stability of identified clusters emerged as another concern, particularly in studies using unsupervised ML approaches like the mixture of multivariate generalized linear mixed models.82 Future research could involve phenotyping investigations that assess chronotype stability associated with each phenotype.

Lastly, some newly designed methods, such as the CMM proposed by Mayhew et al, faced issues like computational intensity and the need to manually specify model templates or univariate distribution lists in high-dimensional feature spaces.65 Addressing these limitations and refining existing algorithms remain trending subjects in ongoing research.

Postsegmentation analysis

Only one-third of the papers in this review conducted postsegmentation analysis. The rest of them only discussed the profiles/characterization of segments and made suggestions for the individualized delivery of interventions but without real-life clinical applications. This is another serious issue to be considered for future research that how to make valuable use of those segments.

For those with postsegmentation analysis articles, most of them aimed to compare characteristics between clusters and characterize subgroups. Dipnall et al41 applied Multiple Additive Regression Tree boosted ML algorithm to identify the important medical symptoms for each key cluster and used binary logistic regression to identify the key clusters with higher levels of depression. Another common way is to use those clusters as labels for the later prediction by applying supervised ML. Fighri et al67 used supervised ML to build predictor models to classify individual patients. Segmentation results were also used to develop a web-based tool for clinical use.67 Landi et al16 evaluated disease subtypes as labels for training supervised models that can predict stratified patient risk scores. In addition, segments were also able to form patients’ migration patterns across 3 periods and classify disease trajectories.35 We advocate for the dissemination of current methods and methodological advancements in “postsegmentation” inferential and predictive modeling research.

Sensitive analysis for segmentation

Only a few studies in this review conducted a sensitivity analysis for segmentation to make results more confident. For missing values, Larvin et al75 examined the impact of absent survey responses and osteoporosis data by comparing the results with this cycle eliminated. For the quality, the silhouette score and the Calinski-Harabasz score were used to compare the clustering's quality to that of a random clustering.76 Sangkaew et al57 explored the effect that using the Gower distance in place of the Euclidean distance. In addition to this, Violán et al50 test with different prevalence cut-off points for chronic diseases.

Future direction

As shown in this review, using different segmentation algorithms on the same population may result in different clusters. Although some papers have checked the similarity of clusters derived by different methods, only 4 papers have researched the consistency of belonged clusters for the same individual using various methods in our review. One study checked the consistency for each individual with original clusters by drawing scatterplots.68 Landi et al16 compared type 2 diabetes and Parkinson’s disease subgroups’ results to related studies based on ad hoc cohorts. And Stafford et al49 checked the coincidence with the literature. Besides, Vidal et al58 conducted laboratory testing to check the concordance. In the future, the research could focus more on checking the consistency of resulting clusters for the same person using different methods, which is a major concern among clinicians, as different clusters would result in specific intervention plans or treatments. Another reason is that various clustering algorithms and threshold values could produce distinct clusters and migration patterns.35 However, when an individual is classified into different clusters under different models, how to calibrate the clusters of such fuzzy individuals is also a meaningful issue that can be paid attention to in the future. It is essential to be more cautious when it comes to interpreting and naming the derived segments. Therefore, it is also necessary to well-establish criteria for optimal segmentation.

Strengths and limitations

To the best of the authors’ knowledge, this is the first study to conduct a scoping review of data-driven population segmentation analysis using ML. We provided a summary of the various segmentation methods that are commonly used, the evaluations of outcomes, methods for coping with missing values, and the various clinical settings to which the segmentation analysis was applied. It is also the first study to summarize the subsequent use of the segments, which can provide some insights to guide future research. Nonetheless, our study has limitations, as we only reviewed literature written in English, excluded clustering not based on population levels and unstructured data, disregarded gray literature, and omitted papers published before January 2000.

CONCLUSION

This review summarizes the ML-based segmentation landscape. The large amount of literature assessed here shows that there is an existing foundation for data-driven population segmentation. It is possible to derive population clusters using various segmentation analysis techniques. However, there is a lack of evidence that ML algorithms outperform other methods including traditional segmentation analysis. Additionally, assessing the quality of the segmentation outcome, including external validation on other cohorts, is necessary after segmentation analysis. Further studies are needed to evaluate the consistency of belonged clusters for the same individual using various methods and to construct an optimal framework for segmentation outcome assessments.

Supplementary Material

Contributor Information

Pinyan Liu, Centre for Quantitative Medicine, Duke-NUS Medical School, Singapore, Singapore.

Ziwen Wang, Centre for Quantitative Medicine, Duke-NUS Medical School, Singapore, Singapore.

Nan Liu, Centre for Quantitative Medicine, Duke-NUS Medical School, Singapore, Singapore; Programme in Health Services and Systems Research, Duke-NUS Medical School, Singapore, Singapore; Institute of Data Science, National University of Singapore, Singapore, Singapore.

Marco Aurélio Peres, Programme in Health Services and Systems Research, Duke-NUS Medical School, Singapore, Singapore; National Dental Research Institute Singapore, National Dental Centre Singapore, Singapore, Singapore.

FUNDING

This research was supported by Duke-NUS Medical School, Singapore.

AUTHOR CONTRIBUTIONS

PL conceived and designed the study. PL and ZW performed the full-text review and analysis. All authors interpreted the data and results. PL drafted the manuscript. NL and MAP contributed to the conception and design, data interpretation, and critically reviewed the manuscript. All authors revised and approved the final manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online

CONFLICT OF INTEREST STATEMENT

None declared.

DATA AVAILABILITY

The data underlying this article are available in the article and in its online supplementary material.

REFERENCES

- 1. Zhou YY, Wong W, Li H. Improving care for older adults: a model to segment the senior population. Perm J 2014; 18 (3): 18–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Banda JM, Seneviratne M, Hernandez-Boussard T, Shah NH. Advances in electronic phenotyping: from rule-based definitions to machine learning models. Annu Rev Biomed Data Sci 2018; 1: 53–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Alzoubi H, Alzubi R, Ramzan N, West D, Al-Hadhrami T, Alazab M. A review of automatic phenotyping approaches using electronic health records. Electronics 2019; 8 (11): 1235. [Google Scholar]

- 4. Xiao C, Choi E, Sun J. Opportunities and challenges in developing deep learning models using electronic health records data: a systematic review. J Am Med Inform Assoc 2018; 25 (10): 1419–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Yan S, Kwan YH, Tan CS, Thumboo J, Low LL. A systematic review of the clinical application of data-driven population segmentation analysis. BMC Med Res Methodol 2018; 18 (1): 121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Kodner DL, Spreeuwenberg C. Integrated care: meaning, logic, applications, and implications–a discussion paper. Int J Integr Care 2002; 2: e12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Stine NW, Chokshi DA, Gourevitch MN. Improving population health in US cities. JAMA 2013; 309 (5): 449–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lynn J, Straube BM, Bell KM, Jencks SF, Kambic RT. Using population segmentation to provide better health care for all: the “Bridges to Health” model. Milbank Q 2007; 85 (2): 185–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Vuik SI, Mayer EK, Darzi A. Patient segmentation analysis offers significant benefits for integrated care and support. Health Aff (Millwood) 2016; 35 (5): 769–75. [DOI] [PubMed] [Google Scholar]

- 10. Chong JL, Matchar DB. Benefits of population segmentation analysis for developing health policy to promote patient-centred care. Ann Acad Med Singap 2017; 46 (7): 287–9. [PubMed] [Google Scholar]

- 11. Chuinsiri N. Unsupervised machine learning identified distinct population clusters based on symptoms of oral pain, psychological distress, and sleep problems. J Int Soc Prev Community Dent 2021; 11 (5): 531–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med 2018; 169 (7): 467–73. [DOI] [PubMed] [Google Scholar]

- 13. Levac D, Colquhoun H, O'Brien KK. Scoping studies: advancing the methodology. Implement Sci 2010; 5: 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Yuill W, Kunz H. Using machine learning to improve personalised prediction: A data-driven approach to segment and stratify populations for healthcare. Stud Health Technol Inform 2022; 289: 29–32. [DOI] [PubMed] [Google Scholar]

- 15. Galvez-Goicurla J, Pagan J, Gago-Veiga AB, Moya JM, Ayala JL. Cluster-then-classify methodology for the identification of pain episodes in chronic diseases. IEEE J Biomed Health Inform 2022; 26 (5): 2339–50. [DOI] [PubMed] [Google Scholar]

- 16. Landi I, Glicksberg BS, Lee H-C, et al. Deep representation learning of electronic health records to unlock patient stratification at scale. NPJ Digit Med 2020; 3 (1): 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Cleret de Langavant L, Bayen E, Bachoud-Lévi A-C, Yaffe K. Approximating dementia prevalence in population-based surveys of aging worldwide: an unsupervised machine learning approach. Alzheimers Dement (N Y) 2020; 6 (1): e12074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Hu B. Trajectories of informal care intensity among the oldest-old Chinese. Soc Sci Med 2020; 266: 113338. [DOI] [PubMed] [Google Scholar]

- 19. Kwon Y-J, Kim HS, Jung D-H, Kim J-K. Cluster analysis of nutritional factors associated with low muscle mass index in middle-aged and older adults. Clin Nutr 2020; 39 (11): 3369–76. [DOI] [PubMed] [Google Scholar]

- 20. McConnell MV, Shcherbina A, Pavlovic A, et al. Feasibility of obtaining measures of lifestyle from a smartphone app: the MyHeart Counts Cardiovascular Health Study. JAMA Cardiol 2017; 2 (1): 67–76. [DOI] [PubMed] [Google Scholar]

- 21. Mullin S, Elkin P. Assessing opioid use patient representations and subtypes. Stud Health Technol Inform 2020; 270: 823–7. [DOI] [PubMed] [Google Scholar]

- 22. Mutter S, Casey AE, Zhen S, Shi Z, Mäkinen V-P. Multivariable analysis of nutritional and socio-economic profiles shows differences in incident anemia for Northern and Southern Jiangsu in China. Nutrients 2017; 9 (10): 1153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Yan J, Linn KA, Powers BW, et al. Applying machine learning algorithms to segment high-cost patient populations. J Gen Intern Med 2019; 34 (2): 211–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Cutumisu M, Southcott J, Lu C. Discovering clusters of support utilization in the Canadian community health survey – mental health. Int J Ment Health Addict 2022. 10.1007/s11469-022-00880-4 [DOI] [Google Scholar]

- 25. Farrahi V, Kangas M, Kiviniemi A, Puukka K, Korpelainen R, Jämsä T. Accumulation patterns of sedentary time and breaks and their association with cardiometabolic health markers in adults. Scand J Med Sci Sports 2021; 31 (7): 1489–507. [DOI] [PubMed] [Google Scholar]

- 26. Ghane M, Sullivan-Toole H, DelGiacco AC, Richey JA. Subjective arousal and perceived control clarify heterogeneity in inflammatory and affective outcomes. Brain Behav Immun Health 2021; 18: 100341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ghassib IH, Batarseh FA, Wang H-L, Borgnakke WS. Clustering by periodontitis-associated factors: a novel application to NHANES data. J Periodontol 2021; 92 (8): 1136–50. [DOI] [PubMed] [Google Scholar]

- 28. Granda Morales LF, Valdiviezo-Diaz P, Reátegui R, Barba-Guaman L. Drug recommendation system for diabetes using a collaborative filtering and clustering approach: development and performance evaluation. J Med Internet Res 2022; 24 (7): e37233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Nnoaham KE, Cann KF. Can cluster analyses of linked healthcare data identify unique population segments in a general practice-registered population? BMC Public Health 2020; 20 (1): 798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Shahrbanian S, Duquette P, Kuspinar A, Mayo NE. Contribution of symptom clusters to multiple sclerosis consequences. Qual Life Res 2015; 24 (3): 617–29. [DOI] [PubMed] [Google Scholar]

- 31. Sousa P, Oliveira A, Gomes M, Gaio AR, Duarte R. Longitudinal clustering of tuberculosis incidence and predictors for the time profiles: the impact of HIV. Int J Tuberc Lung Dis 2016; 20 (8): 1027–32. [DOI] [PubMed] [Google Scholar]

- 32. Sprague NL, Rundle AG, Ekenga CC. The COVID-19 pandemic as a threat multiplier for childhood health disparities: evidence from St. Louis, MO. J Urban Health 2022; 99 (2): 208–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Vuik SI, Mayer E, Darzi A. A quantitative evidence base for population health: applying utilization-based cluster analysis to segment a patient population. Popul Health Metr 2016; 14: 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Flores AM, Schuler A, Eberhard AV, et al. Unsupervised learning for automated detection of coronary artery disease subgroups. J Am Heart Assoc 2021; 10 (23): e021976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Roni R-G, Tsipi H, Ofir B-A, Nir S, Robert K. Disease evolution and risk-based disease trajectories in congestive heart failure patients. J Biomed Inform 2022; 125: 103949. [DOI] [PubMed] [Google Scholar]

- 36. Bose E, Radhakrishnan K. Using unsupervised machine learning to identify subgroups among home health patients with heart failure using telehealth. Comput Inform Nurs 2018; 36 (5): 242–8. [DOI] [PubMed] [Google Scholar]

- 37. Sweatt AJ, Hedlin HK, Balasubramanian V, et al. Discovery of distinct immune phenotypes using machine learning in pulmonary arterial hypertension. Circ Res 2019; 124 (6): 904–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Tsoi KKF, Chan NB, Yiu KKL, Poon SKS, Lin B, Ho K. Machine learning clustering for blood pressure variability applied to Systolic Blood Pressure Intervention Trial (SPRINT) and the Hong Kong Community Cohort. Hypertension 2020; 76 (2): 569–76. [DOI] [PubMed] [Google Scholar]

- 39. Ghosh D, Cabrera J, Adam TN, Levounis P. Comorbidity patterns and its impact on health outcomes: two-way clustering analysis. IEEE Trans Big Data 2020; 6 (2): 359–68. [Google Scholar]

- 40. Sistani S, Norouzi S, Hassibian M, et al. The discovery of major heart risk factors among young patients with ischemic heart disease using K-means techniques. Int Cardiovasc Res J 2019; 13: 85–90. [Google Scholar]

- 41. Dipnall JF, Pasco JA, Berk M, et al. Into the bowels of depression: unravelling medical symptoms associated with depression by applying machine-learning techniques to a community based population sample. PLoS One 2016; 11 (12): e0167055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Liu D, Baskett W, Beversdorf D, Shyu C-R. Exploratory data mining for subgroup cohort discoveries and prioritization. IEEE J Biomed Health Inform 2020; 24 (5): 1456–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Silva DC, Rabelo-da-Ponte FD, Salati LR, Rodrigues Lobato MI. Heterogeneity in gender dysphoria in a Brazilian sample awaiting gender-affirming surgery: a data-driven analysis. BMC Psychiatry 2022; 22 (1): 79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Freese R, Ott MQ, Rood BA, Reisner SL, Pantalone DW. Distinct coping profiles are associated with mental health differences in transgender and gender nonconforming adults. J Clin Psychol 2018; 74 (1): 136–46. [DOI] [PubMed] [Google Scholar]

- 45. Hamilton JB, Agarwal M, Carter JK, Crandell JL. Coping profiles common to older African American cancer survivors: relationships with quality of life. J Pain Symptom Manage 2011; 41 (1): 79–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Lin Y, Huang S, Simon GE, Liu S. Analysis of depression trajectory patterns using collaborative learning. Math Biosci 2016; 282: 191–203. [DOI] [PubMed] [Google Scholar]

- 47. Malte CA, Dennis PA, Saxon AJ, et al. Tobacco use trajectories among a large cohort of treated smokers with posttraumatic stress disorder. Addict Behav 2015; 41: 238–46. [DOI] [PubMed] [Google Scholar]

- 48. Mohr PE, Cheng CM, Claxton K, et al. The heterogeneity of schizophrenia in disease states. Schizophr Res 2004; 71 (1): 83–95. [DOI] [PubMed] [Google Scholar]

- 49. Stafford G, Villén N, Roso-Llorach A, Troncoso-Mariño A, Monteagudo M, Violán C. Combined multimorbidity and polypharmacy patterns in the elderly: a cross-sectional study in primary health care. IJERPH 2021; 18 (17): 9216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Violán C, Foguet-Boreu Q, Fernández-Bertolín S, et al. Soft clustering using real-world data for the identification of multimorbidity patterns in an elderly population: cross-sectional study in a Mediterranean population. BMJ Open 2019; 9 (8): e029594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Ioakeim-Skoufa I, Clerencia-Sierra M, Moreno-Juste A, et al. Multimorbidity clusters in the oldest old: results from the EpiChron cohort. IJERPH 2022; 19 (16): 10180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kshatri JS, Palo SK, Bhoi T, Barik SR, Pati S. Prevalence and patterns of multimorbidity among rural elderly: findings of the AHSETS study. Front Public Health 2020; 8: 582663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Molina-Mora JA, González A, Jiménez-Morgan S, et al. Clinical profiles at the time of diagnosis of SARS-CoV-2 infection in Costa Rica during the pre-vaccination period using a machine learning approach. Phenomics 2022; 2 (5): 312–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Rodríguez A, Ruiz-Botella M, Martín-Loeches I, et al. ; COVID-19 SEMICYUC Working Group. Deploying unsupervised clustering analysis to derive clinical phenotypes and risk factors associated with mortality risk in 2022 critically ill patients with COVID-19 in Spain. Crit Care 2021; 25 (1): 63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Gholipour E, Vizvári B, Babaqi T, Takács S. Statistical analysis of the Hungarian COVID-19 victims. J Med Virol 2021; 93 (12): 6660–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Kenward C, Pratt A, Creavin S, Wood R, Cooper JA. Population health management to identify and characterise ongoing health need for high-risk individuals shielded from COVID-19: a cross-sectional cohort study. BMJ Open 2020; 10 (9): e041370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Sangkaew S, Tan LK, Ng LC, Ferguson NM, Dorigatti I. Using cluster analysis to reconstruct dengue exposure patterns from cross-sectional serological studies in Singapore. Parasit Vectors 2020; 13 (1): 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Vidal OM, Acosta-Reyes J, Padilla J, et al. Chikungunya outbreak (2015) in the Colombian Caribbean: latent classes and gender differences in virus infection. PLoS Negl Trop Dis 2020; 14 (6): e0008281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Li C, Wu X, Cheng X, et al. Identification and analysis of vulnerable populations for malaria based on K-prototypes clustering. Environ Res 2019; 176: 108568. [DOI] [PubMed] [Google Scholar]

- 60. Sentís A, Montoro-Fernandez M, Lopez-Corbeto E, et al. ; Catalan HIV and STI Surveillance Group. STI epidemic re-emergence, socio-epidemiological clusters characterisation and HIV coinfection in Catalonia, Spain, during 2017–2019: a retrospective population-based cohort study. BMJ Open 2021; 11 (12): e052817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Duwalage KI, Burkett E, White G, Wong A, Thompson MH. Retrospective identification of latent subgroups of emergency department patients: a machine learning approach. Emerg Med Australas 2022; 34 (2): 252–62. [DOI] [PubMed] [Google Scholar]

- 62. Goodman JM, Lamson AL, Hylock RH, Jensen JF, Delbridge TR. Emergency department frequent user subgroups: development of an empirical, theory-grounded definition using population health data and machine learning. Fam Syst Health 2021; 39 (1): 55–65. [DOI] [PubMed] [Google Scholar]

- 63. Hurley NC, Haimovich AD, Taylor RA, Mortazavi BJ. Visualization of emergency department clinical data for interpretable patient phenotyping. Smart Health 2022; 25: 100285. [Google Scholar]

- 64. Wong ES, Yoon J, Piegari RI, Rosland A-MM, Fihn SD, Chang ET. Identifying latent subgroups of high-risk patients using risk score trajectories. J Gen Intern Med 2018; 33 (12): 2120–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Mayhew MB, Petersen BK, Sales AP, Greene JD, Liu VX, Wasson TS. Flexible, cluster-based analysis of the electronic medical record of sepsis with composite mixture models. J Biomed Inform 2018; 78: 33–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Parikh RB, Linn KA, Yan J, et al. A machine learning approach to identify distinct subgroups of veterans at risk for hospitalization or death using administrative and electronic health record data. PLoS One 2021; 16 (2): e0247203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Faghri F, Brunn F, Dadu A, et al. ; ERRALS consortium. Identifying and predicting amyotrophic lateral sclerosis clinical subgroups: a population-based machine-learning study. Lancet Digit Health 2022; 4 (5): e359–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Goudman L, Rigoard P, Billot M, et al. ; Discover Consortium. Spinal cord stimulation-naïve patients vs patients with failed previous experiences with standard spinal cord stimulation: two distinct entities or one population? Neuromodulation 2023; 26 (1): 157–63. [DOI] [PubMed] [Google Scholar]

- 69. Josephson CB, Engbers JDT, Sajobi TT, Wiebe S. Adult onset epilepsy is defined by phenotypic clusters with unique comorbidities and risks of death. Epilepsia 2021; 62 (9): 2036–47. [DOI] [PubMed] [Google Scholar]

- 70. Josephson CB, Engbers JDT, Wang M, et al. ; Calgary Comprehensive Epilepsy Program collaborators. Psychosocial profiles and their predictors in epilepsy using patient-reported outcomes and machine learning. Epilepsia 2020; 61 (6): 1201–10. [DOI] [PubMed] [Google Scholar]

- 71. Hyun S, Kaewprag P, Cooper C, Hixon B, Moffatt-Bruce S. Exploration of critical care data by using unsupervised machine learning. Comput Methods Programs Biomed 2020; 194: 105507. [DOI] [PubMed] [Google Scholar]

- 72. Vranas KC, Jopling JK, Sweeney TE, et al. Identifying distinct subgroups of ICU patients: a machine learning approach. Crit Care Med 2017; 45 (10): 1607–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Thomas SA, Smith NA, Livina V, et al. Analysis of primary care computerized medical records (CMR) data with deep autoencoders (DAE). Front Appl Math Stat 2019; 258: 249–50. [PubMed] [Google Scholar]

- 74. Rancière F, Nikasinovic L, Momas I. Dry night cough as a marker of allergy in preschool children: the PARIS birth cohort. Pediatr Allergy Immunol 2013; 24 (2): 131–7. [DOI] [PubMed] [Google Scholar]

- 75. Larvin H, Kang J, Aggarwal VR, Pavitt S, Wu J. Systemic multimorbidity clusters in people with periodontitis. J Dent Res 2022; 101 (11): 1335–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Peng J, Zeng X, Townsend J, Liu G, Huang Y, Lin S. A machine learning approach to uncovering hidden utilization patterns of early childhood dental care among Medicaid-insured children. Front Public Health 2020; 8: 599187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Pacyga DC, Haggerty DK, Nicol M, et al. Identification of profiles and determinants of maternal pregnancy urinary biomarkers of phthalates and replacements in the Illinois Kids Development Study. Environ Int 2022; 162: 107150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Rosenberg D, Handler A, Furner S. A new method for classifying patterns of prenatal care utilization using cluster analysis. Matern Child Health J 2004; 8 (1): 19–30. [DOI] [PubMed] [Google Scholar]

- 79. Hewlett MM, Raven MC, Graham-Squire D, et al. Cluster analysis of the highest users of medical, behavioral health, and social services in San Francisco. J Gen Intern Med 2023; 38 (5): 1143–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Soo J, Hoay L, MacCormack-Gelles B, et al. Characterizing multisystem high users of the homeless services, jail, and hospital systems in Chicago, Illinois. J Health Care Poor Underserved 2022; 33 (3): 1612–31. [DOI] [PubMed] [Google Scholar]

- 81. Santos J, Dias C, Dias A. Machine learning and national health data to improve evidence: finding segmentation in individuals without private insurance. Health Policy Technol 2021; 10 (1): 79–86. [Google Scholar]

- 82. Ensari I, Caceres BA, Jackman KB, et al. Digital phenotyping of sleep patterns among heterogenous samples of Latinx adults using unsupervised learning. Sleep Med 2021; 85: 211–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Kruse C, Eiken P, Vestergaard P. Clinical fracture risk evaluated by hierarchical agglomerative clustering. Osteoporos Int 2017; 28 (3): 819–32. [DOI] [PubMed] [Google Scholar]

- 84. Carrillo-Larco RM, Guzman-Vilca WC, Castillo-Cara M, Alvizuri-Gómez C, Alqahtani S, Garcia-Larsen V. Phenotypes of non-alcoholic fatty liver disease (NAFLD) and all-cause mortality: unsupervised machine learning analysis of NHANES III. BMJ Open 2022; 12 (11): e067203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Benis A, Barak Barkan R, Sela T, Harel N. Communication behavior changes between patients with diabetes and healthcare providers over 9 years: retrospective cohort study. J Med Internet Res 2020; 22 (8): e17186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Bello-Chavolla OY, Bahena-López JP, Vargas-Vázquez A, et al. ; the Metabolic Syndrome Study Group. Clinical characterization of data-driven diabetes subgroups in Mexicans using a reproducible machine learning approach. BMJ Open Diabetes Res Care 2020; 8 (1): e001550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Chushig-Muzo D, Soguero-Ruiz C, Engelbrecht AP, Bohoyo PDM, Mora-Jiménez I. Data-driven visual characterization of patient health-status using electronic health records and self-organizing maps. IEEE Access 2020; 8: 137019–31. [Google Scholar]

- 88. Bej S, Sarkar J, Biswas S, Mitra P, Chakrabarti P, Wolkenhauer O. Identification and epidemiological characterization of type-2 diabetes sub-population using an unsupervised machine learning approach. Nutr Diabetes 2022; 12 (1): 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Hopkins J. The Johns Hopkins ACG® System. 2018.

- 90. Milligan GW. Clustering validation: results and implications for applied analyses. Clustering Classif 1996; 341–75. [Google Scholar]

- 91. Abul-Husn NS, Kenny EE. Personalized medicine and the power of electronic health records. Cell 2019; 177 (1): 58–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Franklin JM, Liaw K-L, Iyasu S, Critchlow CW, Dreyer NA. Real-world evidence to support regulatory decision making: new or expanded medical product indications. Pharmacoepidemiol Drug Saf 2021; 30 (6): 685–93. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available in the article and in its online supplementary material.