Abstract

The high rate of false alarms in intensive care units (ICUs) is one of the top challenges of using medical technology in hospitals. These false alarms are often caused by patients’ movements, detachment of monitoring sensors, or different sources of noise and interference that impact the collected signals from different monitoring devices. In this paper, we propose a novel set of high-level features based on unsupervised feature learning technique in order to effectively capture the characteristics of different arrhythmia in electrocardiogram (ECG) signal and differentiate them from irregularity in signals due to different sources of signal disturbances. This unsupervised feature learning technique, first extracts a set of low-level features from all existing heart cycles of a patient, and then clusters these segments for each individual patient to provide a set of prominent high-level features. The objective of the clustering phase is to enable the classification method to differentiate between the high-level features extracted from normal and abnormal cycles (i.e., either due to arrhythmia or different sources of distortions in signal) in order to put more attention to the features extracted from abnormal portion of the signal that contribute to the alarm.

Keywords: ECG signal analysis, unsupervised feature learning, false alarm reduction, clustering

I. Introduction

A key objective of the monitoring devices in ICUs is to constantly monitor patients’ heart function to diagnose any life-threatening arrhythmia. An ECG signal measures the electrical activity of the heart and is known as an important tool in diagnosing different heart conditions. In spite of many well-developed methods to detect abnormal rhythms, the ICUs still suffer from significantly high false alarm rates due to different reasons including complex nature of signal patterns for some arrhythmia, motion artifacts, noise, sensor detachment, and loose threshold settings of the monitoring devices. Several research and clinical studies aimed at reducing the number of false alarms, ranging from expert systems that define several rules based on expert experiences to machine learning methods [1]–3]. A typical challenge of the proposed machine learning-based methods is the chance of overfitting and inaccurate performance when dealing with a large number of noisy, redundant and correlated features extracted from time-series signals or their transform domains (e.g. wavelet). Feature selection / reduction methods attempt to reduce the large set of input features to the most salient ones, but they may result in discarding important features, thereby missing some meaningful patterns in the signals [4]–[6].

One distinction of the biomedical signals with other timeseries datasets is the periodic / semi-periodic behavior of such signals while the typical machine learning approaches are not capable of capturing this characteristic. In this paper, we took advantage of this property and developed an unsupervised feature learning method that creates a set of lowdimensional features for each subject that captures important characteristics of the underlying patterns in high-dimensional time-series input data by putting an extra attention on the abnormal portions of the signal. In other words, this method constructs a set of higher-level features that better captures the underlying patterns related to different alarm types by processing each patient’s signal segment by segment.

Representation learning (feature learning) is one of most recent trends in machine learning that can improve the performance of machine learning methods by focusing on automatic discovery of features obtained from the raw data sets. Feature learning methods can be categorized into two groups of supervised and unsupervised learning. In supervised feature learning, the labels of input data are used to learn the feature representation step and train a method for classification [7]. In unsupervised feature learning, the input data without its labels is used to learn feature representation [8]–[10]. Unsupervised feature learning has been used in time-series data [11], [12]; however, the current approaches have not provided the expected performance in ECG analysis yet, mostly due to presence of a wide set of diverse patterns related to different cardiac events. On the other hand, lack of availability of annotated long ECG recordings limits the application of supervised feature learning methods.

To the best of our knowledge, the previously reported unsupervised feature learning methods for ECG analysis are based on deep learning [13]. In [14], clustering is used to find the most representative beats for training recurrent neural networks. In [15], the authors used aligned heartbeats for deep neural network to learn features and classification.

In this paper, an unsupervised feature learning method is proposed that takes the segmented unannotated ECG signals as input, clusters these segments to learn the relationships among these segments, and then uses the resulting clusters toward learning high-level features. Specific to the detection of false alarms, the algorithm first segments the ECG signal of each patient based on its heartbeats. These segments contain important features that represent the characteristics of the major ECG components such as P wave, QRS complex, and T wave. Then, the extracted segments of each patient’s ECG signal are clustered into several clusters. Each cluster represents a group of segments that are the most similar to each other. In fact, we build a bidirectional top-down and down-top feature learning by multi-resolution features that helps to better focus on patterns from toppest to lowest ones and vice versa (lowest to toppest). In the lowest to toppest direction, it provides a framework to capture nonlinear relations, differences and similarities among those local features in the higher resolutions (i.e., segments and clusters). It is worth noting that in this method we only utilize one-lead ECG signal collected from the patients and achieved comparable results to the methods that utilized all collected signals available in 2015 PhysioNet challenge data set (i.e. ECG lead II, ECG lead V, arterial blood pressure (ABP) or photoplethysmogram (PPG). This fact suggests the potential capability of this method to be used for cardiac event detection in wearable heart monitoring systems, since the majority of these remote monitoring systems such as Holter monitor only collect one lead ECG signal.

Section III presents the proposed method. In Section IV, the experiment results are described, followed by the concluding remarks in Section V.

II. Database Description

In this paper, a publicly available database for ‘Reducing False Arrhythmia Alarms in the ICU’ provided by PhysioNet/Computing in Cardiology Challenge 2015 [16], [17] is used. Each recording includes one or two ECG leads and one or more pulsatile waveforms, such as ABP and PLETH. This dataset focuses on five life-threatening arrhythmia alarms of Asystole (ASY), Extreme Bradycardia (EBR), Extreme Tachycardia (ETC), Ventricular Tachycardia (VTA), and Ventricular Flutter/Fibrillation (VFB). The training set contains 750 recordings, and the test dataset is not available to public; therefore, we used the training dataset for both training and testing purposes by utilizing k-fold cross validation. This study focuses only on the ECG lead II signal as this lead is the only recording available for the majority of patients. The ECG signals are 5 minutes in length and have been resampled at the rate of 250 Hz. Out of the 750 subjects, 29 of them were removed since they did not include ECG lead II signal. Table I shows the statistics of the numbers of true and false alarms for each arrhythmia type considered in this study.

Table 1:

Number of true and false alarms for each arrhythmia type.

| Alarm | # of Patients | False Alarm | True Alarm |

|---|---|---|---|

| ASY | 116 | 94 | 22 |

| EBR | 86 | 41 | 45 |

| VFB | 57 | 51 | 6 |

| ETC | 131 | 8 | 123 |

| VTA | 331 | 245 | 86 |

| Total | 721 | 439 | 282 |

The majority of methods for signal processing that are based on time-series analysis or transform-based techniques handle the entire collected signal in the same way, while empirically the abnormal portions of the signals often contain more information about the event of interest. A common feature of several biomedical signals is the existence of a basic periodic pattern that can help distinguish between a normal- and an abnormal condition of a physiological system. For instance, in ECG signal analysis, the periodic normal ECG signal reveals several basic information about the heart function such as the heart beat, while the abnormal ECG can help in diagnosis of several arrhythmias. The multi-resolution feature extraction method presented below leverages this difference between the normal and abnormal portions by extracting additional higher resolution features from the abnormal sections, thereby enhancing the accuracy of arrhythmia detection process.

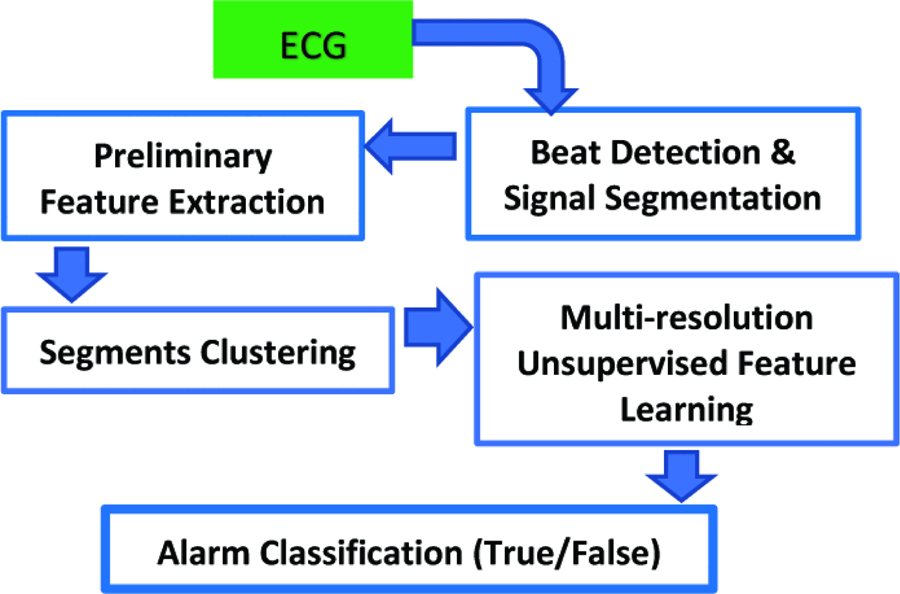

Figure 1 provides a schematic diagram of the overall method. First, the ECG signals are segmented by performing peak detection and decomposing each signal into P-T segments. Then, a set of preliminary features are extracted from each segment as described in Table II. After that, the detected segments for each patient are clustered into several clusters using the K-means algorithm, and a set of multi-resolution features are learned from each cluster in an unsupervised way. K-means algorithm is used for clustering as it provides a fast and robust performance in most applications. Finally, these new constructed features are normalized to classify the alarms as true or false.

Figure 1:

A schematic diagram of the proposed method.

Table 2:

The preliminary 84 features extracted for each segment

| No. | Feature | No. | Feature |

|---|---|---|---|

| 1 | Px | 11 | OnQRS_x |

| 2 | Py | 12 | OnQRS_y |

| 3 | Qx | 13 | OFFQRS_x |

| 4 | Qy | 14 | OFFQRS_y |

| 5 | Rx | ⋯ | ⋯ |

| 6 | Ry | ⋯ | ⋯ |

| 7 | Sx | 80 | RR2 interval |

| 8 | Sy | 82 | RR interval |

| 9 | Tx | 83 | R-R2 amplitude |

| 10 | ⋯ | 84 | R-R amplitude |

A. Beat Detection and Signal Segmentation

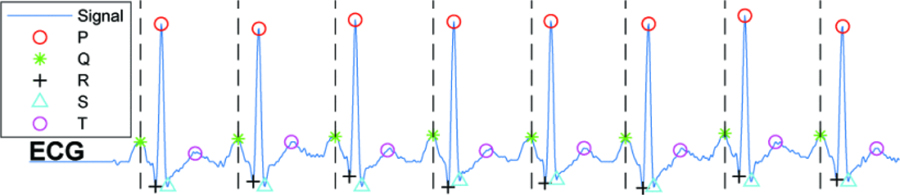

There are several techniques to segment the ECG signals and extract the beat-to-beat intervals. Pan Tompkins algorithm is one of the most common, and low-computational methods to detect the QRS complex in the ECG signals [18]. Most of the proposed methods for ECG segmentation are highly sensitive to several disturbances such as noise, interference, and motion artifacts. The objective of the proposed method is to decrease the number of false alarms due to these abnormalities in the signal. Let us consider three general cases of possible segments in the ECG signal including the normal cycles, arrhythmia segments and the abnormal ones (i.e., noisy segments, the irregular segments due to inaccurate segmentation, or the ones affected by sudden changes in QRS amplitudes). The proposed feature learning technique can significantly degrade the impact of inaccuracy in segmentation, noise, and abrupt changes in alarm detection, since these distortions lead to different behavior in segments compared to the known arrhythmia patterns. During the clustering phase, different abnormal segments due to the aforementioned sources of distortions are more likely to be clustered into separate clusters. Then, the classification technique can recognize such abnormal ones by learning the relations between the labels and the extracted clusters. We should note that the proposed feature learning algorithm is a generic method and can be applied over any segmentation techniques such as Hamilton-Tompkins and Hilbert transform-based algorithm. After detecting R-peaks, the presence of other waves in the signal including P, Q, S and T are detected using adaptive searching windows for each peak. Then, each segment is identified from the onset of its P wave to the offset of its T wave. Figure 2 illustrates an ECG signal annotated with R-peaks, P-, QRS-, and T-waves.

Figure 2:

The detected peaks and computed segments of ECG signal. Each part between each two hashed-lines is considered one segment.

B. Preliminary Feature Extraction

As mentioned earlier, the entire 5-minute-long recordings of ECG lead II are utilized during feature extraction. Preliminary features are extracted from each segment of the given ECG signal in the time domain. These preliminary features can be categorized into three groups, 1) x- and y-coordinates of each present waves (i.e., P, Q, R, S, and T waves) of the signal, for example Px and Py; 2) the intervals between the beginnings of different waves, for example, RR intervals; and 3) the intervals of the amplitudes between the waves or RR amplitude intervals. The set of extracted ECG-related features (84 in total) are provided in Table II. The x values in each segment are defined as the relative distance to the location of Q-wave as a reference point. The y-value refers to the amplitude of the ECG signal. In the first category of primary features, the (x, y) of P,Q,R,S, and T are extracted for each segment. For example, Px and Py refers to x and y coordinate of the P wave. In the second category of primary features, notation OnQRS_x refers to the average of x values of P and Q waves and the average of x values of S and T is defined as OFFQRS_x. Notations OnQRS_y and OFFQRS_y are the corresponding values of ECG signal for OnQRS_x and OFFQRS_x, respectively. In the third category of primary features, the interval (difference between the x values of two waves) are measured. For example, PPinterval means the difference between the x value of the P of the current segment and the x value of the P of the next segment. RR2 interval means the distance between the x value of R of the current segment and x value of R-peak of the next-but-one segment. In the fourth category of primary features, the difference of the y-values of two peaks are measured. For example, R − Ramplitude means the difference between the y value of the R-peak of the current segment and the y value of the R-peak of the next segment. R − R2amplitude means the difference between the y value of R-peak of the current segment and the R-peak of the next-but-one segment.

C. Multi-resolution Unsupervised Feature Learning

The proposed method learns the patterns of different heart arrhythmias through several high-level features, which are learned thorough unsupervised feature learning. The key contribution of the proposed method is to put attention on abnormal portions of the longitude ECG signals, either arrhythmia or distorted portions, through clustering. When the clustering approach is applied on the segments, the center of each cluster is a representative of the segments that belong to that cluster. Then, the proposed method assigns different weights to the formed clusters based on the number of segments within each clusters. These weights represent the probability of a cluster occurrence. The high-level learned features are built based on the position of the centers of these clusters as well as the number of their encountered segments.

The extracted segments of the signals are the units that can provide micro resolution frame of the signal to distinguish the encountered abnormalities in different clusters. Let us denote a cluster t with ct as a set of segments as {Seg1, Seg2, …, Segn}, with |ct| = n. The segments’ clusters provide a macro (higher-level) representation of the signals in which the center of each cluster represents the behavior of a set of segments that are most similar to each other. Therefore, the center of a cluster, centroid, is used as the representative of encountered segments. The number of features of the center of clusters is the same as the number of features of each segment (i.e., 84). The center of cluster t is denoted with cct in which cct = {ft1, ft2, …, ft84i. The number of clusters’ elements, the center of clusters, and the distance among these centers are defined as high-level features that capture the relations among different signals. For instance, when the number of elements of a cluster is relatively small and its centers is far from other clusters for a patient, and the clusters similar to this cluster are frequently seen in other patients signals, this cluster likely represents an arrhythmia. Therefore, these high-level features are used to construct a low-dimensional set from the high-dimensional low-level features of segments. The preliminary features of centers are normalized between 0 and 1 for each cluster. Also, the clusters of each patient are ordered based on the number of their members in an ascending order. The high-level features can be categorized in four categories as follows:

The patient’s heart rate and alarm type (i. e., Tachycardia, Asystole, Ventricular Flutter Fib, Ventricular Tachycardia, and Bradycardia).

The number of elements of each cluster in an ascending order. For example, for the case of having two clusters with , there are two features in the following order and .

The ratio of summation of features’ values for the centers of each cluster to the number of elements in that cluster based on the order . For example, if there are two clusters, we will have two values in the following order of and , assuming that .

The ratio of summation of features’ values for the centers of each cluster to the number of all segments of that patient based on order,. For instance, for the case of two clusters, we will have two values in the following order of and , assuming that |c1| < |c2|.

D. Model Building

The learned high-level features of each signal are used in two different scenarios as the input for the classification phase. Here, we used two well-known classification methods of Boosted Trees and RUSBoosted Trees from the MATLAB’s Classification Learner APP since they are based on ensemble learning and provide robust results [19]. However, the proposed method is independent of the choice of the classification technique and the extracted high-level features can be fed to any classifiers.

In the first scenario, only the unsupervised learned features are used as the input. In the second scenario, the unsupervised learned features are added to the features that are extracted using discrete wavelet transform, as described in Section IV. We also evaluated the proposed method using two different distance measures. As shown in Section IV, we achieved a great performance in alarm classification by only using one signal of ECG lead II and even by using a few number of high-level features that indicates the capability of this method in arrhythmia detection.

III. Experiment Results

The performance of this method is evaluated using the 2015 PhysioNet computing in cardiology challenge dataset [16], [17]. We should note that in this study we only used the ECG lead II to extract the features as this signal was the most common available recordings in the database. However, the majority of existing studies on this dataset used all available signals for the patients including ECG II, ECG V, PPG, and ABP (for many patients only two of these signals are available) and yet our proposed method achieves a comparable performance related to these works. We would also like to note that the winner projects of 2015 Physionet challenge reported the results based on using the public portion of the data set for training and the private part for test, however, in this work, the public training set is used for both test and training with K-fold cross-validation.

To evaluate the performance of our proposed method, several evaluation metrics such as accuracy, sensitivity, specificity, and area under the ROC curve (AUC) of the proposed method are reported for the two aforementioned scenarios. The results of this method is compared against two common methods of time-domain and wavelet-domain analysis of the ECG signal [20]. In the wavelet-based method, the discrete wavelet transform (DWT) is applied on the entire 5-minute ECG lead II recordings, where a 6-level Daubechies 8 (db8) wavelet is used since there is a good match between its shape with the shape of the ECG signal. Because feeding all wavelet coefficients as features into the classification algorithm can result in over–fitting; we reduce the number of features by extracting 20 representative statistical and information– theoretic features from each level of the wavelet vectors as summarized in Table II in [6]. In this case, the overall number of wavelet-based features is 20 × 6 = 120. We also compared the performance of the proposed method against a scenario, where the low-level 84 features per segments are extracted for the last 10 seconds of the ECG recording, as the data set identifies that the cardiac events have occurred within the 10 seconds of the signal. Since these 10-second recordings include a different number of segments for each patient, we considered seven last segments of the signal as an average number of observed segments for the last 10-seconds and extracted the 84-features per segment for these last segments (total of 84 × 7 = 588 low-level features). We should note that one can consider a longer interval of the signal in time domain which results in a larger number of low-level features and can result in over-fitting.

Tables III and IV present the accuracy, sensitivity, specificity and the AUC of two classification techniques of Boosted Tree and RUSBoosted Trees, respectively, for five scenarios including, i) using the 588 low-level features extracted from the last 10 seconds of the signal, ii) using the 120 statistical and information-theoretic features extracted from a 6-level DWT over the entire 5 minutes ECG lead II signal, iii) only using the proposed high level features when the distance metric of K-means clustering is cityblock, iv) only using the proposed high level features when the distance metric of K-means clustering is Squared Euclidean distance, and v) both the proposed high-level learned features as well as the DWT-based features. The number of clusters in the K-means clustering is considered as five. As the results show, the Boosted Trees classification method with only the proposed features with distance metric of cityblock provides better performance in nearly all measures in comparison to all other scenarios including using only the DWT-based features as well as using both sets of features high-level features and the DWT-based ones together (due to potential redundancy between the two sets), and also compared to the case of using squared Euclidean distance metric.

Table 3:

Comparison of the performance of Boosted Trees classification method for different scenarios of using i) the learned high level features using our proposed method when using cityblock distance metric in clustering (31 features) referred as HLFcityblock, ii) the learned high level features using our proposed method when using squared Euclidean distance metric in clustering (31 features) referred as HLFEuclidean, iii) 120 statistical and information-theoretic features extracted using 6-level DWT, and iv) 588 low-level features extracted from the last 10 second of the signal, referred as LLF.

| Boosted Trees | ||||||

|---|---|---|---|---|---|---|

| Scenarios | LLF | DWT | HLFcityblock | HLFEuclidean | DWT + HLFcityblock | DWT + HLFEuclidean |

| Accuracy | 0.791 | 0.717 | 0.818 | 0.793 | 0.788 | 0.803 |

| Specificity | 039 | 0.65 | 0.83 | 0.79 | 0.77 | 0.78 |

| Sensitivity | 0.80 | 0.76 | 0.81 | 0.80 | 0.80 | 0.82 |

| AUC | 0.82 | 0.78 | 0.85 | 0.82 | 0.84 | 0.85 |

Table 4:

Comparison of the performance of RUSBoosted Trees classification method for different scenarios of using i) the learned high level features using our proposed method when using cityblock distance metric in clustering (31 features) referred as HLFcityblock, ii) the learned high level features using our proposed method when using squared Euclidean distance metric in clustering (31 features) referred as HLFEuclidean, iii) 120 statistical and information-theoretic features extracted using 6-level DWT, and iv) 588 low-level features extracted from the last 10 second of the signal, referred as LLF.

| RUSBoosted Trees | ||||||

|---|---|---|---|---|---|---|

| Scenarios | LLF | DWT | HLFcityblock | HLFEuclidean | DWT + HLFcityblock | DWT + HLFEuclidean |

| Accuracy | 0.775 | 0.725 | 0.779 | 0.77 | 0.753 | 0.771 |

| Specificity | 0.73 | 0.63 | 0.75 | 0.72 | 0.67 | 0.70 |

| Sensitivity | 0.80 | 0.80 | 0.80 | 0.80 | 0.81 | 0.82 |

| AUC | 0.83 | 0.79 | 0.82 | 0.81 | 0.83 | 0.85 |

In summary, the proposed method obtains a better result compared with the common wavelet-based and time-domain based methods by only 31 high-level features since the unsupervised learned features have better discrimination powers while they need less much computation resources than commonly used methods.

IV. Conclusion

In this paper, an unsupervised feature learning approach is proposed for analysis of periodic/semi-periodic biomedical signals. This method works based on a clustering approach and learns a few number of high-level features from several formed clusters of time-domain segments of the signals. The learned high-level features can handle the various patterns and variations in ECG signals in a autonomous and scalable way by splitting the signals to their constituent segments and representing the discrimination of the segments by clustering. In this paper, the proposed feature learning technique has been applied on a single lead ECG signal available in 2015 PhysioNet challenge to distinguish the patterns in ECG signal associated to several arrhythmia’s from other potential distortions in ECG signals due to noise, interference, motion artifacts and other source of disturbance in collected ECG signals. For this purpose, we applied the proposed method on the entire 5-minutes available ECG recordings to enable the model to learn both normal and abnormal patterns in the collected signal per patient. As seen in the experimental results, the proposed method is capable of achieving a better performance compared to common false alarm detection based on DWT and a time-domain analysis-using the last 10 seconds of the signal that include the event-by only using a few number of high-level features and a low level of computations.

Acknowledgements:

This material is based upon work supported by the National Science Foundation under Grant Number 1657260. Research reported in this publication was also supported by the National Institute on Minority Health and Health Disparities of the National Institutes of Health under Award Number U54MD012388.

Contributor Information

Behzad Ghazanfari, School of Informatics, Computing and Cyber Security, Northern Arizona University, Flagstaff, AZ.

Fatemeh Afghah, School of Informatics, Computing and Cyber Security, Northern Arizona University, Flagstaff, AZ.

Kayvan Najarian, Department of Computational Medicine and Bioinformatics, Professor, Department of Emergency Medicine, Michigan Center for Integrative Research in Critical Care, University of Michigan, Ann Arbor, MI.

Sajad Mousavi, School of Informatics, Computing and Cyber Security, Northern Arizona University, Flagstaff, AZ.

Jonathan Gryak, Department of Computational Medicine and Bioinformatics, University of Michigan, Ann Arbor, MI.

James Todd, School of Informatics, Computing and Cyber Security, Northern Arizona University, Flagstaff, AZ.

V. References

- [1].Zhang Y and Szolovits P, “Patient-specific learning in real time for adaptive monitoring in critical care,” Journal of biomedical informatics, vol. 41, no. 3, pp. 452–460, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Li Q and Clifford GD, “Signal processing: False alarm reduction,” in Secondary Analysis of Electronic Health Records. Springer, 2016, pp. 391–403. [PubMed] [Google Scholar]

- [3].He R, Zhang H, Wang K, Yuan Y, Li Q, Pan J, Sheng Z, and Zhao N, “Reducing false arrhythmia alarms in the icu using novel signal quality indices assessment method,” in Computing in Cardiology Conference (CinC), 2015 IEEE, 2015, pp. 1189–1192. [Google Scholar]

- [4].Hira ZM and Gillies DF, “A review of feature selection and feature extraction methods applied on microarray data,” Advances in bioinformatics, vol. 2015, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Afghah F, Razi A, and Najarian K, “A shapley value solution to game theoretic-based feature reduction in false alarm detection,” Neural Information Processing Systems (NIPS), Workshop on Machine Learning in Healthcare, arXiv:1512.01680 [cs.CV], Dec. 2015.

- [6].Afghah F, Razi A, Soroushmehr R, Ghanbari H, and Najarian K, “Game theory for systematic selection of wavelet-based features; application in false alarm detection in intensive care units,” Entropy, Special Issue on Information Theory in Game Theory, vol. 20, no. 3, p. 190, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Oglic D and Gartner T, “Greedy feature construction,” in ¨ Advances in Neural Information Processing Systems, 2016, pp. 3945–3953.

- [8].Coates A, Ng A, and Lee H, “An analysis of single-layer networks in unsupervised feature learning,” in Proceedings of the fourteenth international conference on artificial intelligence and statistics, 2011, pp. 215–223. [Google Scholar]

- [9].Coates A and Ng AY, “Learning feature representations with kmeans,” in Neural networks: Tricks of the trade. Springer, 2012, pp. 561–580. [Google Scholar]

- [10].Goroshin R, Bruna J, Tompson J, Eigen D, and LeCun Y, “Unsupervised feature learning from temporal data,” arXiv preprint arXiv:1504.02518, 2015.

- [11].Langkvist M, Karlsson L, and Loutfi A, “A review of unsupervised ¨ feature learning and deep learning for time-series modeling,” Pattern Recognition Letters, vol. 42, pp. 11–24, 2014. [Google Scholar]

- [12].Zhang Q, Wu J, Yang H, Tian Y, and Zhang C, “Unsupervised feature learning from time series.” in IJCAI, 2016, pp. 2322–2328.

- [13].Eduardo A, Aidos H, and Fred A, “Ecg-based biometrics using a deep autoencoder for feature learning,” 2017.

- [14].Zhang C, Wang G, Zhao J, Gao P, Lin J, and Yang H, “Patientspecific ecg classification based on recurrent neural networks and clustering technique,” in 2017 13th IASTED International Conference on Biomedical Engineering (BioMed) IEEE, 2017, pp. 63–67. [Google Scholar]

- [15].Xu SS, Mak M-W, and Cheung C-C, “Towards end-to-end ecg classification with raw signal extraction and deep neural networks,” IEEE journal of biomedical and health informatics, 2018. [DOI] [PubMed]

- [16].PhysioNet, Reducing False Arrhythmia Alarms in the ICU, 2015, accessed July 28, 2016 [Online]. Available: http://www.physionet.org/challenge/2015/

- [17].Clifford GD, Silva I, Moody B, Li Q, Kella D, Shahin A, Kooistra T, Perry D, and Mark RG, “The physionet/computing in cardiology challenge 2015: reducing false arrhythmia alarms in the icu,” in Computing in Cardiology Conference (CinC), 2015 IEEE, 2015, pp. 273–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Pan J and Tompkins WJ, “A real-time qrs detection algorithm,” IEEE transactions on biomedical engineering, no. 3, pp. 230–236, 1985. [DOI] [PubMed] [Google Scholar]

- [19]. “MATLAB’s Classification Learner APP.”.

- [20].Salas-Boni R, Bai Y, Harris P, Drew B, and Hu X, “False ventricular tachycardia alarm suppression in the icu based on the discrete wavelet transform in the ecg signal,” Journal of electrocardiology, vol. 47, 08 2014. [DOI] [PubMed] [Google Scholar]