Abstract

Free full text

Sensory Processing

Towards a somatosensory theory of speech perception

Abstract

Speech perception is known to be a multimodal process, relying not only on auditory input but also on the visual system and possibly on the motor system as well. To date there has been little work on the potential involvement of the somatosensory system in speech perception. In the present review, we identify the somatosensory system as another contributor to speech perception. First, we argue that evidence in favor of a motor contribution to speech perception can just as easily be interpreted as showing somatosensory involvement. Second, physiological and neuroanatomical evidence for auditory-somatosensory interactions across the auditory hierarchy indicates the availability of a neural infrastructure that supports somatosensory involvement in auditory processing in general. Third, there is accumulating evidence for somatosensory involvement in the context of speech specifically. In particular, tactile stimulation modifies speech perception, and speech auditory input elicits activity in somatosensory cortical areas. Moreover, speech sounds can be decoded from activity in somatosensory cortex; lesions to this region affect perception, and vowels can be identified based on somatic input alone. We suggest that the somatosensory involvement in speech perception derives from the somatosensory-auditory pairing that occurs during speech production and learning. By bringing together findings from a set of studies that have not been previously linked, the present article identifies the somatosensory system as a presently unrecognized contributor to speech perception.

INTRODUCTION

Speech perception is fundamentally auditory: The brain areas involved in hearing are also involved in speech perception. Perception is sensitive to the frequency composition of the acoustical input and is disrupted by acoustical noise and by hearing loss. However, there is likewise extensive evidence that the visual system and possibly the motor system each contribute to speech perception. Visual inputs bias perception (1, 2); motor areas are active during perceptual tasks (3, 4), and perception corresponds to articulatory differences (5). In the present article, we ask whether the somatosensory system, a term we use in reference to primary and second somatosensory cortex and to somatic functions of the inferior parietal lobule, also contributes to speech perception. Somatosensation may seem like an unlikely participant in speech perception; however, there is considerable anatomical, electrophysiological, and behavioral evidence of a somatosensory-auditory link, some specifically in the context of auditory processing in speech.

We begin by reviewing evidence for motor area involvement in speech perception and show that any evidence for the involvement of the motor system might equally be attributed to the somatosensory system. We then review evidence indicating auditory-somatosensory interactions at different levels of the brain. Both functional and neuroanatomical evidence point to a neural infrastructure that could support a role for the somatosensory system in auditory processing. Next, we review evidence for somatosensory involvement in speech perception per se. Studies using simultaneous tactile and auditory stimulation show that tactile stimulation can alter speech perception and associated neural activity. Other studies show that, even in the absence of tactile stimulation, speech perception on its own elicits activation of somatosensory cortical areas. We suggest that the somatosensory system contributes distinctions in somatic units, which guide the mapping of acoustic patterns onto speech categories, and, as such, assist in auditory speech perception. The focus throughout is on auditory, somatosensory, and phonological processes. This review does not deal with lexical, syntactic, or semantic processing and associated brain areas, which have been addressed in other work on speech perception (6, 7).

MOTOR AREA INVOLVEMENT IN SPEECH PERCEPTION

The motor theory of speech perception posits that the motor system provides an articulatory basis for speech perception (5, 8). In support of this idea, several neuroimaging studies have shown neural activation in frontal motor areas during speech perception. For example, Wilson et al. (4) used functional magnetic resonance imaging (fMRI) to show frontal cortical activity during both speech perception and speech production. Speech perception was associated with a peak of activity in the superior part of ventral premotor cortex, and this area overlapped with an area activated during speech production with a peak that sits on the border of Brodmann’s area 6 and anterior area 4. The posterior part of area 4, in the central sulcus, showed speech production-related activity but no perception-related activity. This general pattern of results is confirmed by other fMRI studies. Perception-related activity in left ventral premotor cortex was found to scale with utterance complexity (9) and to show increased activity during perception of nonnative phonemes (10). Activity in the precentral gyrus differed in the perception of bilabial and alveolar stops (3). Audiovisual speech stimuli elicited responses in premotor and motor cortex, but responses in audio-only speech perception were restricted to premotor cortex (11). Electrocorticography recordings also showed that mouth area ventral premotor cortex, as defined by movement elicited through direct cortical stimulation, was active during naturalistic speech perception (12). Other studies have confirmed that premotor cortex shows responses during speech perception (13, 14) but with only sparse responses in primary motor cortex.

Transcranial magnetic stimulation (TMS) shows a somewhat different pattern, consistent with the involvement of both premotor and primary motor cortex in perception. TMS of orofacial motor cortex showed that stimulation during speech perception alters listeners’ performance in a speech identification task (15). Motor-evoked potentials measured from the tongue (16) and the orbicularis oris muscle (17) both increased during perception of speech sounds that are articulated with the target muscle. Repetitive TMS (rTMS) to ventral premotor cortex impaired performance in a speech discrimination task (18). Furthermore, rTMS to the lip area of motor cortex impaired categorical perception on a bilabial-alveolar continuum (19), suggesting that these areas are causally involved in speech perceptual identification and discrimination. In a study that combined TMS and positron emission tomography (PET), increases in motor cortex excitability during speech perception correlated with activity in the posterior inferior frontal gyrus (20). The authors suggested that posterior inferior frontal gyrus (area 44) could be involved by priming the motor system in response to heard speech. Overall, these studies are consistent with the involvement of frontal motor areas in speech perception.

However, the involvement of motor and premotor cortex in speech perception may not be entirely motor. Specifically, there is limited evidence that ventral premotor cortex is directly involved in the control of speech movements, and the activity observed in frontal motor areas during speech perception may be due to the presence of somatosensory inputs that are recruited during perception. The details are as follows: neuroanatomical tracer studies and antidromic stimulation in monkeys show that primary motor cortex on its own has direct corticobulbar projections to brain stem motor nuclei involved in orofacial movements (21–23). These findings are consistent with the results of degeneration studies in humans, which suggest direct corticobulbar fibers to brain stem motor nuclei, including the hypoglossal nucleus, the facial nucleus, the nucleus ambiguus, and the trigeminal nucleus (24, 25). In contrast, projections from ventral premotor cortex are more limited. Specifically, after injection of an anterograde tracer in the laryngeal part of premotor cortex, Simonyan and Jürgens (26) did not find labeled neurons in the nucleus ambiguus, suggesting that in monkeys there is no direct corticobulbar projection to laryngeal motoneurons. However, evidence for a projection to hypoglossal nucleus was obtained with anterograde tracers injected in ventral premotor cortex of rhesus monkeys (22).

In the control of upper limb movement, where there is a more extensive literature, although sparse labeling of cells in ventral premotor cortex is found after the injection of retrograde tracers in the upper cervical spinal cord segments, there is little evidence of labeled neurons in ventral premotor cortex after tracer injection in segments associated with movement of the fingers and hands (27, 28). In macaque monkeys, outputs from ventral premotor cortex to the lower cervical spine for the control of arm and hand movement are in the range of 1% of those from primary motor cortex. The fact that ventral premotor cortex projects to areas that do have direct outputs does not distinguish it from sensory areas such as somatosensory cortex, which similarly has projections to frontal motor areas proper. In fact, there are twice as many inputs to primary motor cortex from parietal cortex as from ventral premotor cortex. Likewise, there are more inputs to ventral premotor cortex from parietal cortex than from any other motor area (29, 30). Although direct connections to motor nuclei are not necessary either for the control of movement or in speech, to be implicated in the motor theory of speech perception it would seem crucial, given the name, that any candidate area is primarily a motor area and not substantially sensory.

It is well established that neurons in cortical motor areas have sensory receptive fields (Fig. 1). Microelectrode recordings from the forelimb area of primary motor and premotor cortex in nonhuman primates show responses to tactile stimulation of the hand and to proprioceptive stimulation (31–33) and also demonstrate responsiveness to changes in texture (34). Neurons in face area motor cortex and lateral premotor cortex were also shown to respond to tactile stimulation, with receptive fields for the upper lip, lower lip, tongue, teeth, and intraoral somatosensation (Ref. 35; see Fig. 1). Murray and Sessle (36) used intracortical microstimulation to evoke tongue movements from macaque motor cortex and recorded responses from the same area during tactile stimulation. Results showed a close spatial match between motor output of the tongue area in primary motor cortex and somatic input as defined by responses to tactile stimulation. Electrode recordings from the premotor cortex of anesthetized macaques showed responses to tactile stimulation of mouth and face, among other body parts such as leg, arm, and trunk (37). This is in line with an earlier study that also showed responses to tactile stimulation of the face, hand, and mouth in ventral premotor cortex of macaques (38).

Areas of primary motor cortex that elicit orofacial movement also respond to tactile stimulation. Symbols show locations in primary cortex that responded to tactile stimulation of different orofacial structures in macaques. Colored outlines show regions where intracortical microstimulation (ICMS) elicited movements of the hand, face, jaw, or tongue. The vertical dashed line, CS, represents the edge of the central sulcus. Gyrus indicates the crest of the precentral gyrus; sulcus is the anterior bank of the central sulcus. The thin dashed line on the right indicates the fundus of the central sulcus. Numbers show the location of Brodmann areas 4 and 3a. Adapted from Ref. 35.

Patterns of neuroanatomical connectivity indicate direct input to both primary motor cortex and ventral premotor cortex from somatosensory cortex and parietal cortex more generally, whereas there is limited input from auditory cortex. Injection of retrograde tracers in orofacial primary motor cortex in monkeys showed projections from primary and second somatosensory cortices, as well as from a variety of motor and prefrontal areas (39–41). Injection of retrograde tracers in ventral premotor cortex similarly showed labeled cells in frontal and prefrontal regions as well as parietal cortex (29, 30, 42, 43). In contrast, there is little evidence of input to ventral premotor cortex from auditory areas (44–46). Injection of anterograde and retrograde tracers in lateral auditory belt cortex (see anatomical overview of cortical auditory areas in Fig. 2) revealed connections to prefrontal areas, including area 45, but no cells were labeled in premotor cortex (47). Similarly, tracer injections in the parabelt auditory cortex and in rostral superior temporal gyrus did not label any cells in motor or premotor cortex (48). Petrides and Pandya (49) did find a small number of labeled cells in dorsal premotor cortex after injection of an anterograde tracer in auditory belt cortex, but most auditory projections were found to target prefrontal cortex. Similarly, Luppino et al. (50) found retrogradely labeled cells in the superior temporal sulcus after injections in dorsal, but not ventral, premotor areas. Overall, tracer studies show at best only sparse auditory input to frontal motor areas, although some studies using diffusion tensor imaging (DTI) in humans have suggested connectivity between posterior temporal areas and premotor cortex (51–53).

Auditory cortical areas in the macaque brain. The upper bank of the lateral sulcus is partially opened (cut) to show auditory cortical areas. Areas in blue represent the auditory core (AI, auditory area 1; R, rostral; RT, rostrotemporal), areas in yellow show the auditory belt (AL, anterolateral; CL, caudolateral; CM, caudomedial; ML, middle lateral; RM, rostromedial; RTL, rostrotemporal lateral; RTM, rostrotemporal medial), and areas in green indicate the auditory parabelt (CPB, caudal parabelt; RPB, rostral parabelt). AS, arcuate sulcus; CiS, circular sulcus; CS, central sulcus; IPS, intraparietal sulcus; LS, lateral sulcus; LuS, lunate sulcus; PS, principal sulcus; STGs, superior temporal gyrus; STS, superior temporal sulcus. Adapted from Ref. 73.

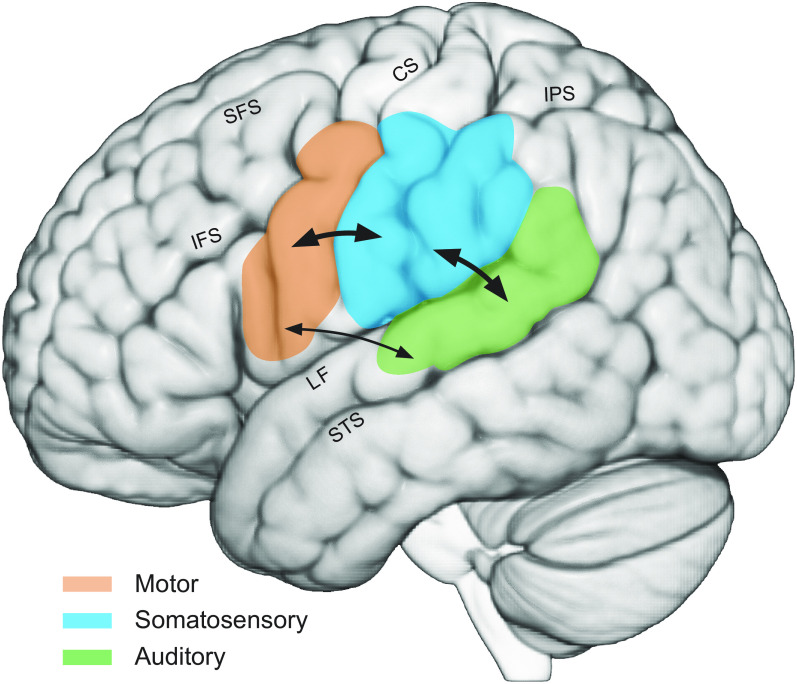

In summary, although there is speech perception-related activity in frontal motor areas, the activity could be somatic rather than motor. The primary perceptual activity is in ventral premotor cortex, which has only limited direct motor output to either brain stem motor nucleus. In contrast, there are extensive somatosensory receptive fields and direct inputs from somatosensory and parietal cortex to both motor and premotor cortex (Fig. 3). It is unlikely that motor or premotor activity is auditory in any usual sense given an absence of direct auditory projections. Thus, although there appears to be no direct pathway providing auditory input to cortical motor areas, somatosensory inputs, which are extensively tied to the auditory system, do provide direct motor area input. In the sections that follow, we review evidence for a broader somatosensory involvement in the context of the auditory system and in the perception of speech.

Overview of major areas implicated in speech perception. Auditory areas in the superior temporal lobe are indicated in green, frontal motor areas are in orange, and somatosensory areas, including primary somatosensory cortex and the inferior parietal lobe, are in blue. Arrows show reciprocal projections between auditory and somatosensory areas and between somatosensory and frontal motor areas. Tracer injections in monkeys also show projections between auditory cortex and area 44. However, little or no anatomical connectivity between premotor areas and auditory cortex has been reported; diffusion tensor imaging in humans suggests connectivity between auditory areas and ventral premotor cortex. CS, central sulcus; IFS, inferior frontal sulcus; IPS, intraparietal sulcus; LF, lateral fissure; SFS, superior frontal sulcus; STS, superior temporal sulcus.

AUDITORY-SOMATOSENSORY INTERACTIONS IN THE CEREBRAL CORTEX

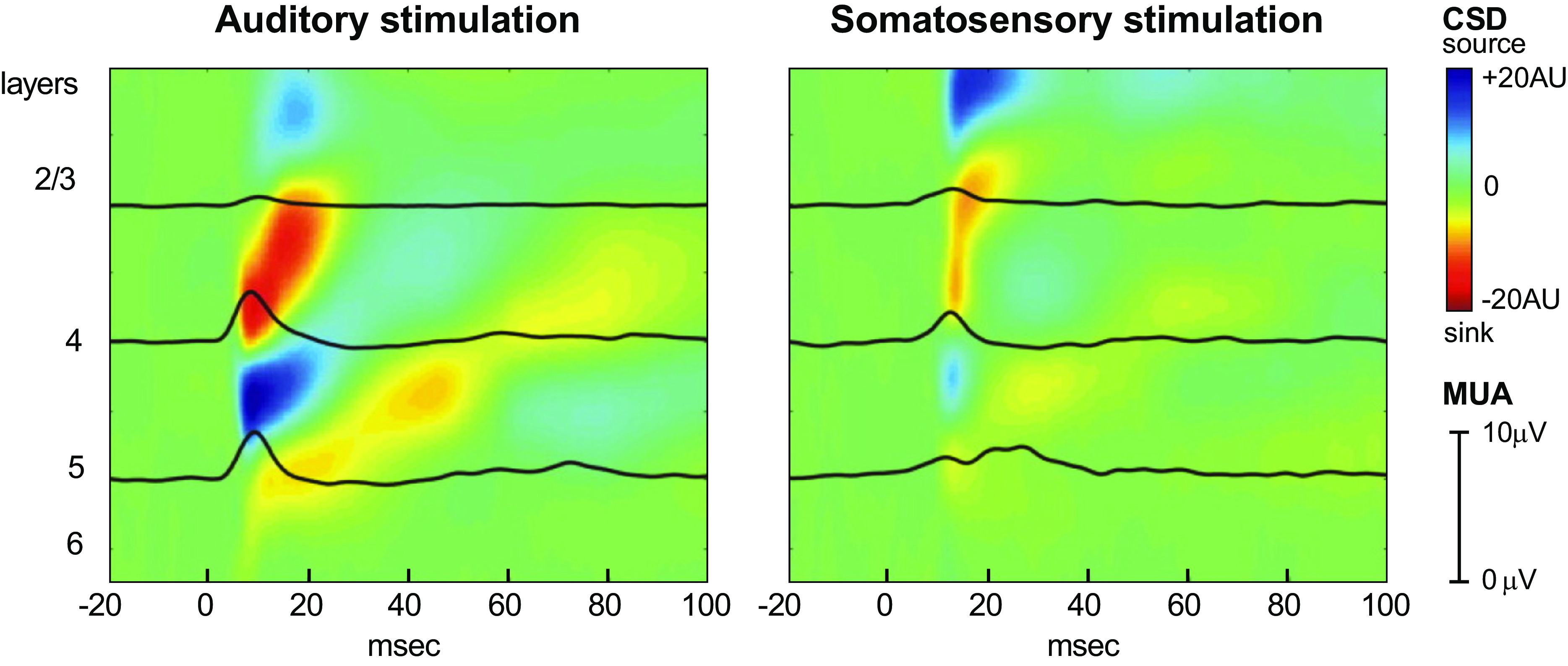

Neuronal interactions between the auditory and somatosensory systems occur at multiple levels of the brain, suggesting that somatosensory contributions to speech perception are situated within an extensive auditory-somatosensory network. We first present evidence for this broad multisensory interaction at the level of cerebral cortex, followed by a brief summary of other work showing that the interaction occurs from the cochlear nucleus on up. In nonhuman primates, there is electrophysiological evidence of this interaction in primary auditory cortex, where paired auditory-somatosensory stimulation (auditory clicks and electrical stimulation of the median nerve) resulted in greater activation of the primary auditory cortex than either modality alone (54). Responses to somatosensory stimuli have also been recorded in the caudomedial belt region (CM; see Fig. 2) of auditory cortex (Refs. 55–57; see Fig. 4). As in primary auditory cortex, combined auditory and somatosensory stimulation resulted in stronger auditory responses (58). Auditory cortex activity in response to somatosensory stimulation has been observed in other species. In core auditory cortex in the ferret, auditory neurons responded to tactile stimulation of air puffs delivered to the face, and some also responded to visual signals. Most of the neurons that showed a multisensory response were affected by more than one modality (59).

Multielectrode recordings in area CM of the auditory belt show responses to both auditory and somatosensory stimulation. Colors indicate current source density (CSD, indexing local synaptic activation) time-locked to onset of auditory and somatosensory stimulation across cortical layers in area CM of the auditory belt in a macaque. Black superimposed lines are multiunit activity profiles (MUA, indexing local action potential activity). Auditory stimulation was a pure-tone stimulus; somatosensory stimulation consisted of electrical stimulation of the contralateral median nerve. AU, arbitrary units. Adapted from Ref. 57.

There is also electrophysiological evidence, although considerably less, of this relationship in the other direction, with auditory stimuli resulting in activity in somatosensory areas of the brain. Cells in the primary somatosensory cortex of monkeys responded to auditory clicks that signaled movements of the arm and hand (60). In both cats and mice, there is evidence of the response of cells in somatosensory cortex to auditory stimulation (61, 62). Rats with lesions to somatosensory cortex have been shown to have deficits in auditory processing in a learned go/no-go task with auditory cues (63). In mice, a complete reversal of roles is observed in which a subset of cells in somatosensory cortex respond to sound but not to touch (64).

In humans, there is evidence that auditory stimulation activates somatosensory areas and vice versa. In neuroimaging studies, vibrotactile stimulation of the palms and fingers has been found to activate the posterior auditory belt (65). Responses in this region to combined stimulation are greater than the response to each individual modality (66). This result mirrors the data from nonhuman primates, suggesting that this region of the human auditory cortex may be analogous to the multisensory CM region seen in macaque and marmoset monkeys (Fig. 4). An auditory-somatosensory interaction is also seen in auditory association cortex contralateral to the side of somatosensory stimulation (67). In this study, subjects were also able to detect combined auditory and somatosensory stimulation faster than they were able to detect either unisensory event. In an example closer to speech, stimulation of the lower lip resulted in an improvement in an auditory stimulus detection task. Increased activation was observed in the primary auditory cortex in response to synchronous auditory and somatosensory stimuli (68).

There are also studies with humans that show that auditory stimuli elicit responses or moderate responses in traditionally defined somatosensory areas. In an auditory frequency discrimination task, there were robust frequency-dependent responses in somatosensory areas, specifically in the parietal operculum (69). In another study, auditory stimulation, when paired with electrical stimulation of the median nerve, significantly shortened the latency of the N20 evoked response that originates in primary somatosensory cortex (70). In a different study examining somatosensory evoked potentials, it was found that responses to combined square-wave sounds and median nerve stimulation differed from the sum of responses to auditory and somatosensory stimulation alone. This effect was localized to the posterior parietal cortex and the parietal operculum (71). A similar magnetoencephalography (MEG) study revealed an interaction in second somatosensory cortex between auditory tone bursts and tactile stimulation of the thumb (72).

ANATOMICAL CONNECTIVITY BETWEEN CORTICAL SOMATOSENSORY AND AUDITORY AREAS

There is extensive neuroanatomical connectivity at cortical and subcortical levels between auditory and somatosensory areas of the brain. Accordingly, auditory system activity may facilitate activation of the somatosensory system as well. In nonhuman primates, most somatosensory areas project to the CM region of the auditory belt. Injections of retrograde tracers into this region in marmoset and macaque monkeys revealed labeled cells in retroinsular and granular insula areas of the second somatosensory cortex (57, 73, 74). In marmoset monkeys, the CM region also received projections from the posterior parietal cortex (73) as well as from second somatosensory cortex and from the anterior bank of the lateral sulcus (75).

Studies using ferrets and gerbils as animal models have found similar anatomical connectivity between auditory and somatosensory areas. Injections of retrograde tracers into the core auditory cortex of ferrets revealed labeled cells in parts of primary and second somatosensory cortex (59). In gerbils, there is connectivity between the auditory cortex and the somatosensory and posterior parietal cortices, as revealed by injections into primary auditory cortex of a bidirectional tracer (76). In humans, similar evidence of connectivity has been found with diffusion tensor imaging (DTI), which indicates white matter connections between primary auditory cortex and primary and second somatosensory cortices (77).

AUDITORY-SOMATOSENSORY INTERSECTION AT THE COCHLEAR NUCLEUS, INFERIOR COLLICULUS, AND THALAMUS

There is overlap between somatosensory and auditory inputs all along the auditory pathway, as early as the cochlear nucleus. Auditory inputs converge with somatosensory inputs that arrive from the trigeminal system. Injections of retrograde tracers into the cochlear nucleus in guinea pigs reveal labeled cells in the spinal trigeminal nuclei, which transmits somatosensory information from the face. Likewise, anterograde tracer injections into the spinal trigeminal nucleus demonstrated anatomical connections to the marginal area of the ventral cochlear nucleus and the fusiform cell layer of the dorsal cochlear nucleus (78). Stimulation of the trigeminal ganglion, which receives inputs from sensory receptors in the face, also led to altered responses in the ventral cochlear nucleus (79). Therefore, even early on in the auditory pathway, at the level of the cochlear nucleus, there are auditory and somatosensory interactions. Moreover, many of the somatic inputs to the cochlear nucleus are orofacial and thus potentially contribute to a link between the somatosensory system and speech acoustics.

There is likewise auditory-somatosensory overlap at the level of the inferior colliculus. There are descending auditory cortex projections to the inferior colliculus, with a large percentage of terminations located in the external nucleus. There are also descending projections from the somatosensory cortex that terminate in the external nucleus, and these connections may influence the auditory pathway (80). A majority of the neurons in the external nucleus are influenced by both somatosensory and auditory peripheral inputs. Most of these cells are excited by a pure-tone auditory stimulus and inhibited by dorsal column stimulation, suggesting that these cells contain auditory and somatosensory information in their patterns of activity (81). Retrograde tracers injected into the external nucleus of the inferior colliculus reveal labeled cells in the cuneate, gracile, and spinal trigeminal nuclei, indicating that peripheral somatosensory information from the limbs and face passes through the inferior colliculus (82). For facial somatic inputs, more recent work has shown that electrical stimulation of the spinal trigeminal nucleus can modulate the responses to sounds in the external nucleus (83). Thus, there is extensive somatosensory and auditory interactivity in the inferior colliculus, at least some of which is orofacial in nature.

The medial geniculate nucleus of the thalamus is the final stop for auditory inputs before auditory cortex. Within the medial geniculate, cells within the magnocellular division (MGm) show responses to somatosensory stimulation (84). Lesion studies in rats point to the trigeminal pathway (85) and dorsal column pathway as MGm peripheral inputs (86). Injections of retrograde tracer into the CM region of the auditory belt in macaque monkeys revealed projections from the thalamus with labeled cells in MGm among other divisions of the medial geniculate nucleus (87). These data provide further evidence that the CM region of the auditory belt cortex plays a role in the auditory and somatosensory interaction, and since some of the interaction originates in the trigeminal system, this may contribute to somatic influences in speech processing in the brain.

To summarize, there is extensive anatomical connectivity between the auditory and somatosensory systems at all levels of the auditory pathway. At the level of the cerebral cortex, somatosensory stimuli are associated with activity in auditory cortex, and auditory stimuli elicit responses in somatosensory cortex. As highlighted below, some of these cross-modal interactions are learned (60) as may be needed in the context of somatosensory participation in speech perception.

TACTILE CONTRIBUTION TO SPEECH PERCEPTION

There is also evidence of interaction between the auditory and the somatosensory systems in the specific context of speech. Studies suggest that listeners can use haptic information to aid in speech perception. An early example is the so-called Tadoma method for speech perception, in which deaf-blind individuals can be trained to perceive speech haptically by placing their hand over the mouth and jaw of the speaker (88, 89). Tactile information can likewise facilitate speech perception in untrained listeners with normal hearing. Fowler and Dekle (90) had participants perform a speech identification task and simultaneously perform a haptic task where they placed their hand over the mouth of a speaker as in the Tadoma method. Judgments in the auditory task were biased by the syllable identity of the haptic syllable. For example, when the haptic syllable corresponded to the articulation of “ba,” listeners were more likely to identify the auditory stimulus as “ba.” A more recent study shows that haptic information, provided by placing the listener’s hand on the speaker’s face, improved perception of speech in noise for both blind and sighted adults (91). In the same vein, Gick et al. (92) showed that tactile information that matched auditory stimuli improved listeners’ speech perception by ~10% in a speech identification task. The participants in this study were not trained in using or interpreting haptic speech information.

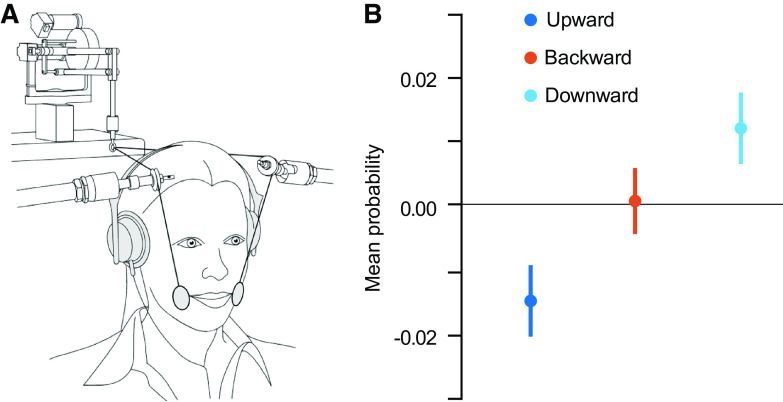

More recent studies have used other types of tactile stimulation and showed that they also affect speech perception. Air puffs applied to the right hand or to the neck were shown to increase the probability that listeners identified an auditory stimulus as a syllable starting with an aspirated plosive sound (93). Light taps did not produce the same effect, suggesting that the modulation of speech perception is specific to the type of somatosensory stimulation. The biased perception also depended on the timing of the tactile and auditory stimuli and was only seen if there was <200 ms of asynchrony when the puff followed the speech stimulus and up to 50 ms of asynchrony when the air puff preceded the speech stimulus (94). A subsequent study has shown that the perceptual bias induced by air puffs was largest when the speech stimuli were ambiguous (95). Somatosensory inputs, in the form of facial skin deformation, likewise altered perception (96), and the effects were specific to the direction of skin stretch (Fig. 5). Speechlike deformations of the facial skin altered the auditory perception of speech sounds, whereas deformations of the facial skin that do not occur in speech had no effects on speech perception. Similarly, facial skin deformation altered perceptual judgments of audiovisual stimuli (97). The contribution of somatosensory inputs to speech perception has also been demonstrated in the complete absence of auditory stimulation. In a task in which visual feedback guided participants to a target tongue posture, it was shown that speakers could categorize vowels based on tongue somatosensation alone (98).

Tactile stimulation alters speech perception. A: participants’ facial skin was stretched in different directions during a speech categorization task, akin to facial skin deformation associated with speech production. B: differences in mean probability of vowel identification with and without skin stretch. The responses in the speech categorization task depended on the direction of the skin stretch, suggesting that the somatosensory information associated with skin stretch can modulate speech perception. Adapted from Ref. 96.

Auditory and Somatosensory Modulation of Evoked Potentials

The integration of auditory and somatosensory information has been demonstrated by showing a mutual modulation of their neural responses. In a comparison of audio-only speech perception and audio-haptic perception, in which the listener places their hand on the speaker’s lips and jaw, there were shortened latencies for auditory evoked potentials during the audio-haptic task (99). There was also a modulation of both the latency and the amplitude of the N1/P2 auditory potential during the audio-haptic task that was not present during the audio-only task (100). Moreover, the neural response to combined auditory and somatosensory stimulation showed some specificity to the place of stimulation and type of auditory stimulus. In particular, the N1 potential was significantly enhanced when participants heard the /pa/ speech sound with concurrent tactile lip stimulation. Similarly, but in a nonspeech example, the N1 potential was also enhanced when a finger-snapping sound was paired with stimulation of the middle finger (101).

Auditory effects on somatosensory evoked potentials have also been reported. Speech sounds were able to modify somatosensory evoked potentials (102). Specifically, speechlike patterns of facial skin deformation were applied while participants were exposed to either speech or nonspeech sounds. There was an increase in the magnitude of the somatosensory evoked potential only in combination with speech sounds, suggestive of speech-specific somatosensory processing. In other work, tactile stimulation of the lips during an auditory speech identification task also altered somatosensory neural processing (103). Participants listened to recordings of bilabial or dental syllables. On a subset of trials, listeners’ inferior lip was electrically stimulated simultaneously with the auditory stimulus. EEG recordings revealed that the beta-band power decreased after lip stimulation when the stimulation matched place of articulation of the auditory stimulus (i.e., bilabial syllables). Given that beta rhythms evoked by electrical stimulation are thought to originate in somatosensory cortex (104), these results are suggestive of somatosensory cortex involvement in the speech perception task. In other work, cortical oscillations showed evidence of integrating the spectral properties of auditory stimulation combined with the envelope of concurrent tactile stimulation (105).

Together, studies using simultaneous somatosensory and auditory stimulation show that auditory speech perception can be accessed and modified through tactile stimulation. Although tactile stimulation does not normally occur during speech perception, these studies show that auditory speech perception is not isolated or segregated from activity in the somatosensory system. Importantly, the specific timing and type of tactile input are crucial to the auditory perceptual effect, suggesting there is a specific or selective nature to the interaction.

SOMATOSENSORY CORTICAL INVOLVEMENT IN SPEECH PERCEPTION

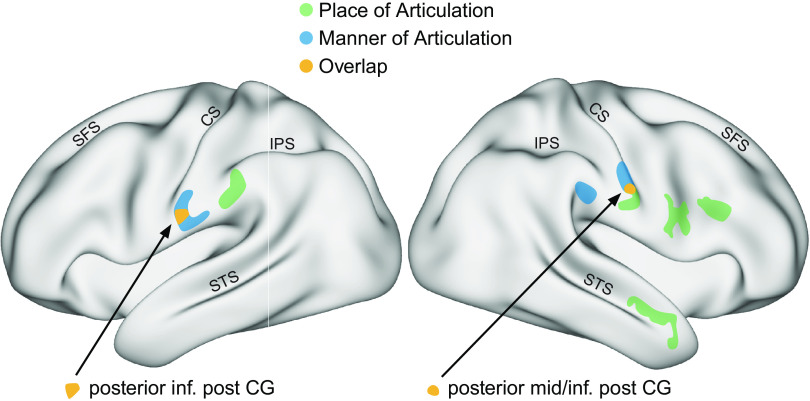

Even without direct tactile stimulation, some recent studies have shown somatosensory involvement in speech perception. Using functional magnetic resonance imaging (fMRI) Skipper and colleagues (11) showed that several somatosensory areas, including left supramarginal gyrus and Brodmann areas 2 and 3, showed more activity in response to auditory compared with audiovisual speech stimuli. There is also evidence that somatosensory cortex activity encodes features of the speech signal (Fig. 6). Correia et al. (106) showed that, in response to auditory stimuli, articulatory features could be decoded from somatosensory cortex with fMRI scans. In response to auditory stimuli, inferior somatosensory cortex showed sensitivity to both place and manner of articulation, suggesting that speech perception activates articulatory feature representations in somatosensory cortex. Similarly, Arsenault and Buchsbaum (107) used multivariate pattern analysis and were able to decode place of articulation during passive listening from the subcentral gyrus. Other work involving recordings from the cortical surface in patients who were undergoing clinical evaluation for epilepsy surgery show responses in somatosensory areas during listening. Both a listening task as well as a speaking task led to responses in electrodes placed on precentral and postcentral gyrus (13), showing that in addition to motor cortex somatosensory cortex is also activated when listening to speech. Other electrocorticography studies have yielded similar results. High gamma-band responses during speech listening were observed in the postcentral gyrus and parietal somatosensory areas, as well as in temporal auditory areas and frontal motor areas (14). Yi et al. (108) had participants learn to discriminate between Mandarin tones (Fig. 7). Electrophysiological recordings from the cortical surface showed responses to speech stimuli in motor, premotor, and superior temporal areas as well as in somatosensory cortex. In addition, activity in the postcentral gyrus as well in frontal and temporal areas showed a correlation with trial-to-trial speech perceptual learning and thus auditory-related plasticity in somatosensory cortex.

Articulatory features can be decoded from functional magnetic resonance imaging (fMRI) activity in somatosensory cortex. Participants listened to auditory speech stimuli in a scanning session. Colors indicate cortical areas from which articulatory features of the presented syllables such as manner of articulation or place of articulation could be decoded. Place and manner of articulation can both be decoded in the inferior (lateral) postcentral and supramarginal gryi. An area in both left and right inferior postcentral gyrus showed overlap between manner and place of articulation. These data suggest that somatic brain areas code articulatory features during speech perception. CS, central sulcus; IPS, intraparietal sulcus; post CG, postcentral gyrus; SFS, superior frontal sulcus; STS, superior temporal sulcus. Adapted from Ref. 106.

Cortical surface recordings show sensitivity to speech sounds and speech perceptual learning in somatosensory cortical areas, among other regions. Locations are depicted on the cortical surface where direct cortical recordings showed a high-gamma response to speech sounds (in black) or where responses were associated with perceptual learning over the course of the experiment (in yellow). English-speaking listeners were trained to discriminate between Mandarin tones. CS, central sulcus. Adapted from Ref. 108.

In other work, it has been found that damage following stroke to somatosensory areas in the inferior parietal cortex is associated with deficits in speech perception. Deficits in a phoneme discrimination task were found after damage to the left intraparietal sulcus (109). In another study, both phoneme discrimination and identification were associated with damage to the left supramarginal gyrus and parietal operculum (110). A recent study showed that damage to the left supramarginal gyrus, among other areas, was associated with deficits in an auditory nonword discrimination task (111). These lesion studies thus suggest that areas in the inferior parietal lobe contribute to auditory speech perception, in line with suggestions that this area is involved in multimodal transformations during speech perception (6).

REPEATED AUDIO-TACTILE PAIRING LEADS TO SOMATOSENSORY INVOLVEMENT IN SPEECH PERCEPTION

There is evidence that repeated pairing of somatosensory and auditory stimuli, which occurs during both speech production and speech motor learning, may lead to somatosensory responses to auditory inputs. In nonhuman primates, this interaction can be seen through a learned activation of somatosensory neurons in response to an auditory cue. Monkeys trained to perform arm movements in response to a sound showed a time-locked response to the auditory signal in area 5 of parietal cortex (112, 113). In a similar experiment, monkeys were trained to lift a lever in response to an auditory cue. Cortical field potentials showed responses to the auditory cue in primary auditory, auditory association, premotor, motor, and somatosensory cortices (114). Although these studies trained monkeys to perform a movement in response to an auditory cue, a similar phenomenon was observed when monkeys were trained in a haptic memory task, namely, there was a learned response in somatosensory cortex to the auditory input (115).

Evidence that auditory-somatosensory interactions in speech are learned is also found in work on human development that shows that, starting at an early age, somatosensory information may affect speech perception. Infants as young as 4 mo were less likely to look at a face matching an auditory stimulus when at the same time they were making a similar lip movement (116). Moreover, whereas adults show changes to speech perception in response to simultaneous air puff stimulation (93), 6- to 8-mo-old infants do not (117), suggesting that the integration of tactile and auditory modalities develops with age. Similarly, facial skin stretches bias young adults’ performance in a concurrent speech perception task, but this bias was weaker with children of ages 5–6 yr (118).

In adults, speech perception was altered after a training period that combined auditory perceptual judgments with facial skin stretch, where the latter served as a model of the sensory pairing that occurs during speech motor learning (119). When facial skin stretches were applied during speech motor learning, it was shown that although the skin deformation did not affect the amount of motor learning, the associated recalibration of speech perception was dependent on the direction of skin stretch (120). In addition, the effect of facial skin stretch on speech perception is correlated with individual variability in speech production, which is in line with the idea that speech production drives a learned somatosensory-auditory mapping that is involved in speech perception (121).

The evidence laid out here suggests that somatosensory contributions to speech perception may develop over time and may be related to the paired auditory-somatosensory stimulation that occurs during speech production and learning. The basic idea is that speech production is accompanied by systematically paired auditory-somatosensory stimulation, such that production produces, in effect, an auditory-somatosensory map. In speech perception, this mapping is accessed through auditory stimulation. When a unit in the network is activated, other related units, auditory and somatosensory, are activated as well.

DISCUSSION

The evidence reviewed here shows in various ways that the somatosensory system contributes to speech perception. The present proposal is not intended as a variant of the motor theory of speech perception, nor is it intended to replace the idea that speech perception is primarily auditory. Rather, it is further evidence that speech perception sits within an extensive multisensory network, which speech is a part of and some of which is learned. Somatic inputs when present and appropriately timed can alter perception, and this indicates specificity to the intersensory relation. The most significant aspects of this relationship are that speech elicits activity in somatosensory cortex (Ref. 108; Fig. 7) and articulatory features can be decoded from somatosensory cortex in functional MRI recorded during speech perception (Ref. 106; Fig. 6). Tongue somatosensation in the absence of auditory input allows for vowel categorization (98), and lesions to the inferior parietal cortex disrupt speech perception (109–111). The addition of somatosensory cortex to the roster of players supporting speech perception adds to our comprehensive understanding of the neurobiology of speech perception.

Much of the evidence presented here has been indirect, based on patterns of neuroanatomical connectivity and data from a variety of species, with examples that are not directly related to speech perception. Much of the data comes from work with nonhuman primates and other mammals and in some cases limb movement because of the extensive available literature; there is nothing comparable in work with humans. These studies nevertheless inform our understanding of what we might expect in humans and in speech.

In addition to the evidence for somatosensory involvement in speech perception, this review has touched on three further issues. The first was whether somatosensory involvement in auditory perception is a speech-specific adaptation. The evidence reviewed here suggests that speech is not special in this regard. We have shown there is broader somatosensory-auditory interaction and interconnectivity between auditory and somatosensory systems, which is found in nonspeech contexts and in nonhuman species. Much of the evidence for somatosensory-auditory interaction is not specific to orofacial somatosensation. Indeed, even tactile stimulation that does not naturally occur during speech, such as air puffs applied to the hand or neck (93, 95), modulates speech perception. This suggests that the auditory-somatosensory interaction that is observed in speech may have some speech specific attributes but builds upon a preexisting neural infrastructure that supports somatosensory-auditory integration more generally.

A second issue, in the specific case of speech perception, is whether and how an auditory-somatosensory mapping is acquired. We suggest that it is built upon existing neuroanatomical connectivity in combination with the systematic pairing of auditory with somatosensory stimulation that occurs during speech learning and production. A recent study indeed suggests that systematic pairing of facial skin stretches with a concurrent auditory stimulus affects subsequent speech perception (119). Work in nonhuman primates shows a similar effect, namely, learning-related activity in somatosensory cortex in response to auditory inputs (60, 112–115). Developmental work in humans is also consistent with the idea that an auditory-somatosensory relationship is learned (117, 118) and that there is specificity with respect to timing and type of successful stimulation (93, 94, 96).

A third issue is whether the activity observed in somatosensory areas during speech perception plays a causal role in perception. In other words, is activity in these areas merely a by-product of auditory stimulation that, during speech production, is systematically matched with somatosensory stimulation, or does it reflect a substantial contribution to perception? The evidence from fMRI and electrocorticography is, strictly speaking, correlational: they show that somatosensory area activity is elicited during speech perception but not whether these areas contribute causally. However, both lesion studies and research that involves tactile stimulation during speech perception suggest a causal relationship. Deficits in speech perception are observed following lesions to the inferior parietal lobe (109–111). Stretches of the facial skin similar to movements that occur during speech production are shown to alter speech perception (96), and their effect on perception is dependent on the direction of the stretch, in other words, on the type of somatosensory input.

Together with prior evidence that both the visual and the motor system contribute to speech perception, the present article shows that speech perception is not just an auditory but rather a fundamentally multimodal sensorimotor process. The involvement of the motor, auditory, and somatosensory systems in both speech production and perception is also in line with research in recent decades, which has suggested strong links between speech production and perception, as shown by correlations between perception and production performance and overlapping neural activity during speaking and listening. Speech production-perception links may reflect common auditory-somatosensory mappings that both drive speech production and serve as targets in speech perception. Common multimodal sensory mappings may serve as a source of production-perception links and drive concurrent development and learning.

Future studies are necessary to address some of the issues raised in the present article. For example, the causal contribution of somatosensory cortical areas to speech perception could be tested with noninvasive brain stimulation. If somatosensory cortex is causally involved in speech perception, disruption of activity in this area should affect performance in a subsequent speech perception task. As reviewed above, there is evidence that somatosensory involvement in speech perception is parasitic upon a broader network for auditory-somatosensory interactions that is already in place in nonhuman primates. On the other hand, there is also evidence for specificity, suggesting that systematic pairing of auditory and somatosensory stimulation leads to a learned mapping that is specific to the pairing that occurs during speech production. Future studies are necessary to further characterize both the unique and nonspecific aspects of the auditory-somatosensory interaction. For example, in case of specific learned sensory mappings, temporal suppression of neural activity in lip area somatosensory cortex might only affect speech perception of lip-related speech sounds. Additional studies are necessary to further characterize the precise role of the somatosensory system and the extent to which it can be disentangled from the role that classically defined motor areas in the frontal cortex play during speech perception.

GRANTS

This work was supported by National Institute on Deafness and Other Communication Disorders Grant R01DC017439 to D. J. Ostry.

AUTHOR CONTRIBUTIONS

M.K.F. and D.J.O. conceived and designed research; M.K.F. and D.J.O. prepared figures; M.K.F., B.C.L., and D.J.O. drafted manuscript; M.K.F., B.C.L., and D.J.O. edited and revised manuscript; M.K.F., B.C.L., and D.J.O. approved final version of manuscript.

REFERENCES

Articles from Journal of Neurophysiology are provided here courtesy of American Physiological Society

Citations & impact

Impact metrics

Alternative metrics

Discover the attention surrounding your research

https://www.altmetric.com/details/139052457

Smart citations by scite.ai

Explore citation contexts and check if this article has been

supported or disputed.

https://scite.ai/reports/10.1152/jn.00381.2022

Article citations

Evoking artificial speech perception through invasive brain stimulation for brain-computer interfaces: current challenges and future perspectives.

Front Neurosci, 18:1428256, 26 Jun 2024

Cited by: 0 articles | PMID: 38988764 | PMCID: PMC11234843

Review Free full text in Europe PMC

Similar Articles

To arrive at the top five similar articles we use a word-weighted algorithm to compare words from the Title and Abstract of each citation.

Attention fine-tunes auditory-motor processing of speech sounds.

J Neurosci, 34(11):4064-4069, 01 Mar 2014

Cited by: 32 articles | PMID: 24623783 | PMCID: PMC3951700

Sensory preference in speech production revealed by simultaneous alteration of auditory and somatosensory feedback.

J Neurosci, 32(27):9351-9358, 01 Jul 2012

Cited by: 118 articles | PMID: 22764242 | PMCID: PMC3404292

Speech sounds alter facial skin sensation.

J Neurophysiol, 107(1):442-447, 19 Oct 2011

Cited by: 18 articles | PMID: 22013241 | PMCID: PMC3349680

Efficient Neural Coding in Auditory and Speech Perception.

Trends Neurosci, 42(1):56-65, 05 Oct 2018

Cited by: 19 articles | PMID: 30297085 | PMCID: PMC6542557

Review Free full text in Europe PMC

Funding

Funders who supported this work.

HHS | NIH | National Institute on Deafness and Other Communication Disorders (1)

Grant ID: R01DC017439

NIDCD NIH HHS (2)

Grant ID: R01 DC017439

Grant ID: R01DC017439

1

,

2

1

,

2