Abstract

Free full text

ECG Classification Using an Optimal Temporal Convolutional Network for Remote Health Monitoring

Abstract

Increased life expectancy in most countries is a result of continuous improvements at all levels, starting from medicine and public health services, environmental and personal hygiene to the use of the most advanced technologies by healthcare providers. Despite these significant improvements, especially at the technological level in the last few decades, the overall access to healthcare services and medical facilities worldwide is not equally distributed. Indeed, the end beneficiary of these most advanced healthcare services and technologies on a daily basis are mostly residents of big cities, whereas the residents of rural areas, even in developed countries, have major difficulties accessing even basic medical services. This may lead to huge deficiencies in timely medical advice and assistance and may even cause death in some cases. Remote healthcare is considered a serious candidate for facilitating access to health services for all; thus, by using the most advanced technologies, providing at the same time high quality diagnosis and ease of implementation and use. ECG analysis and related cardiac diagnosis techniques are the basic healthcare methods providing rapid insights in potential health issues through simple visualization and interpretation by clinicians or by automatic detection of potential cardiac anomalies. In this paper, we propose a novel machine learning (ML) architecture for the ECG classification regarding five heart diseases based on temporal convolution networks (TCN). The proposed design, which implements a dilated causal one-dimensional convolution on the input heartbeat signals, seems to be outperforming all existing ML methods with an accuracy of

1. Introduction

Electrocardiogram (ECG) is a rapid bedside inspection that measures the electrical activity generated by the heart as it contracts. It is commonly used to recognize diverse heart diseases such as arrhythmia, cardiomyopathy, coronary heart disease, cardiovascular disease, and many others. The inspection process has always been carried out by physicians and clinicians, which is a time-consuming procedure requiring significant medical and human resources to process the large amount of ECG data [1]. On the other hand, due to the diversity of ECG signals, many issues could arise, making this process of ECG inspection even more challenging. For example, the ECG of two healthy people may not be completely similar. Moreover, two patients suffering from the same heart disease could show different signs in their ECGs. Another issue could be that two different diseases have very close signals at the ECG level. It seems that there are no definite standards to be used in the diagnosis process [2]. For that reason, the use of artificial intelligence (AI) methods are needed, as these methods are learnable through accumulated experiences such that they could find hidden patterns that humans cannot find.

The computerized analysis of ECG signals was mainly meant to improve the diagnosis process, save time, and target rural and remote regions where medical specialists are not always affordable [3]. To this end, millions of ECG schemes are recorded worldwide every year, where most of them are automatically analyzed and decided afterwards. However, a false analysis is very likely, especially in the case of inexperienced clinicians who might endorse any automated results without further analysis. Such clinical mismanagement mostly ends up with a useless or even dangerous treatment. Thus, it becomes necessary that the ECG results are read and approved by well-experienced physicians. On the other hand, doctors highly recommend the modernization of existing computerized ECG analysis methods as well as the improvement of their robustness for more reliable medication.

Machine learning (ML) has proven to be eminently successful in different classification problems. This opened the door for its use in ECG analysis problems, and various ML-based methods have been recorded in this domain. In [4], ECG signals of normal people are collected and compared to ECG signals under tests using cross-correlation techniques. This allows for the detection of ECG signals of patients with myocardial infarction with an accuracy of

Convolution neural networks (CNN) are mainly intended for visual imagery analysis and computer vision tasks. However, with the great success these networks have shown in classification tasks, they started to be involved in automated ECG analysis as well. Nevertheless, the performance of convolutional networks could degrade due to the impurity of data as well as the imbalance in the number of examples between classes. For that, it is often required to utilize some effective data augmentation techniques with the raw data before the recruitment of robust-to-data convolutional network models. In this paper, we present an optimal architecture for sequential data processing based on 1D Temporal Convolutional Networks (TCN). A database of five classes named ECG5000 [13], originally established from the BIDMC Congestive Heart Failure Database [14], is considered in our study. The ECG database signals used are noise-free clean, which makes them ready for use without any preprocessing. Each sample includes a single heartbeat. The ECG5000 database is enhanced by three data augmentation techniques for better performance of the network. The network involves various diluted causal one-dimensional convolutions with padding. As a result, the output signal is the same length as the input heartbeat. The convolution layers are followed by a softmax unit that matches the heartbeats with their classes. Accordingly, the network is evaluated. Due to the unique internal design of TCNs, these networks are lighter in weight, faster, and more stable than conventional convolutional networks. This allows the implementation of efficient embedded systems suited to remote health monitoring systems. ECG analysis devices are thus realized using low complexity and power consumption hardware.

The rest of the paper is organized as follows: in Section 2, we provide a background on the machine learning methods used in ECG analysis. In Section 3, we present in detail our proposed temporal convolution network architecture; next we demonstrate the data augmentation techniques applied to the ECG data and designate the values used in the training process; and lastly we explain the standard used in the evaluation of the network. In Section 4, we display the results of the multiple trials that we carried out and compare our optimized model to some existing machine learning networks implemented on the same dataset. The whole paper is summarized and concluded in Section 5.

2. Background and Related Work

A large part of the world’s population resides in a spread-out remote or rural area. In these rural and remote areas, besides other basic needs of life, the overall access to medical facilities ranges from difficult to deficient, and the availability of doctors is scarce. The deficiency of timely medical advice and assistance to the patients, due to distance and lack of adequate infrastructure, is the source of critical situations and may lead to death in some cases. Remote health care is considered a serious candidate for facilitating access to health services for all. Sensing and actuating technologies along with big data analysis provide basic building blocks for remote health monitoring (RHM). The concept of RHM is not new, but newer and efficient systems are still being designed to overcome the weaknesses of existing systems, especially for rural areas. Indeed, in rural areas, the main challenges are related to communication latency and bandwidth availability, autonomy and energy consumption, and low cost devices.

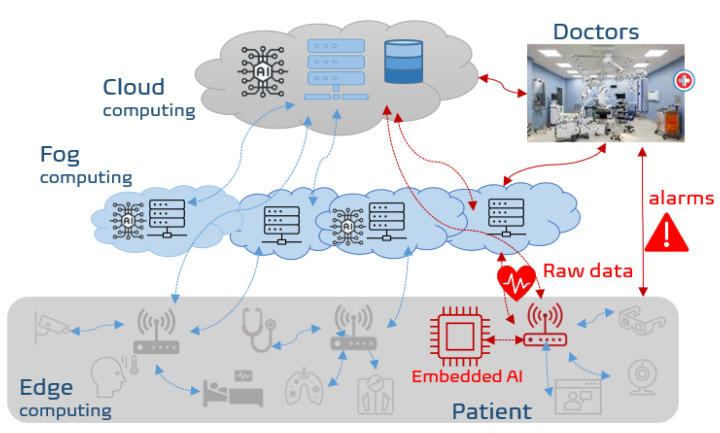

In the context of SAFE-RH (Sensing, ArtiFicial intelligence, and Edge networking towards Rural Health monitoring), a framework is proposed to cope with the above-mentioned problems by sending (and thus recording permanently) only the relevant data. Indeed, these relevant data to send are related to generation of alarms identified mostly by AI or machine learning (ML) driven methods, and thus significantly limit the bandwidth cost and communication overhead. Figure 1 shows the overall architecture of the proposed RHM system where ECG related flow is depicted in red.

Overall framework of remote health monitoring in rural areas. In red is the flow related to ECG data. The classification is done by embedding intelligence near sensors and sending only alarms. If necessary, raw data can be sent to fog or cloud for further analysis or storage.

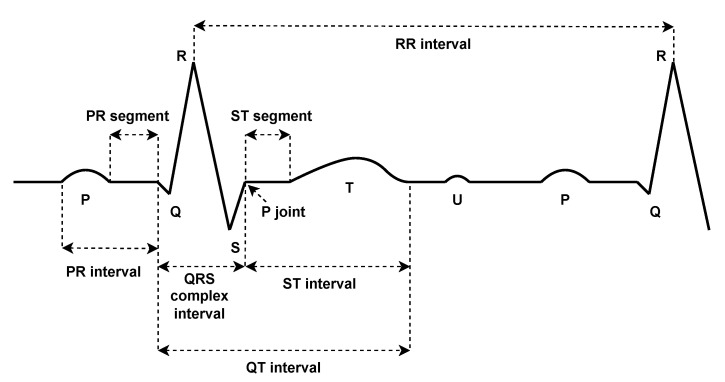

The study of ECG signals has become an essential tool in the clinical diagnosis of various heart diseases. This study is mainly based on the detailed characteristics of the ECG signal. In detail, an ECG signal is composed of numerous heartbeats connected together. Each heartbeat consists of different parts, namely: P wave, QRS complex, and T wave (see Figure 2). A normal heartbeat is characterized by given amplitude values for its peaks (P, Q, R, S, T, and U), as well as given duration values for its intervals (PR, RR, QRS, ST, and QT) and segments (PR and ST). The variation of any of these values indicates a certain abnormality at the diagnosis level. More details of an ECG beat can be found in [15]. These peaks, intervals, and segments are called the ECG features, on which the ECG classification is mainly based. The ECG classification problem is often a multi-class classification problem. It includes several classes not limited to: normal (N), right bundle branch block (RBBB), and left bundle branch block (LBBB). An ECG classification process involves multiple steps starting from signal preprocessing, feature extraction, then normalization, and ending with classification. In the first phase, signals are filtered to remove any kind of possible noise that could affect the extraction of the features. This includes powerline interference [16], EMG noise [17], baseline wander [18], and electrode motion artifacts [19]. Various techniques are proposed for noise removal such as low and high pass linear phase filters. For baseline adjustment, techniques such as linear phase high pass filter, median filter, and mean median filter are usually employed. In the second phase, the main features are collected to be used as inputs to a classification model. The commonly used techniques for this purpose are: Continuous Wavelet Transform (CWT) [20], Discrete Wavelet Transform (DWT) [21], Discrete Fourier Transform (DFT) [22], Discrete Cosine Transform (DCT) [23], S-Transform (ST) [24], Principal Component Analysis (PCA) [25], Pan–Tompkins Algorithm [26], Daubechies Wavelet (Db4) [27], and Independent Component Analysis (ICA) [28]. For the normalization of the features, two main approaches are commonly used: Z-score [29] and Unity Standard Deviation (SD) [30]. Finally, in the classification stage, different models are utilized such as: Multilayer Perceptron Neural Network (MLPNN) [31], Quantum Neural Network (QNN) [32], Radial Basis Function Neural Network (RBFNN) [33], Fuzzy C-Means Clustering (FCM) [34], ID3 Decision Tree [35], Support Vector Machine (SVM) [36], Type2 Fuzzy Clustering Neural Network (T2FCNN) [37], and Probabilistic Neural Network (PNN) [38].

Different ECG classifications have been recorded. In [39], the authors established a four-class ECG classification problem using the RR intervals as inputs. The data were collected from the MIT-BIH arrhythmia database. The raw signals were first subjected to baseline adjustment. After that, the RR intervals were extracted using DWT and then normalized using Z-score. The classification was done using FCM with an accuracy of

Convolution neural networks (CNN) have also been involved in ECG analysis. In [46], a 2D CNN approach for ECG classification is investigated. The sequential vectors representing the heartbeats are transformed into binary images via one-hot encoding [47] before being introduced to the network. The morphology of the heartbeats as well as the temporal relationship between every two adjacent heartbeats is captured in such images. The learning process is accelerated using ADADELTA [48], a per-dimension learning rate method for gradient descent. The network also involves a biased dropout [49] to mitigate the overfitting of the network. The network, when tested on the MIT-BIH arrhythmia database, has shown to be highly effective in the detection of various cardiovascular diseases. Another work is investigated in [50]. A 1D CNN approach for arrhythmia detection is proposed. The sequential data are extracted using two leads and then injected directly into the network without any preprocessing. Although the network achieves high accuracy when tested on the MIT-BIH database, some classes are hardly recognized. This can be the result of the impurity of the data as well as the imbalance between classes.

3. Method

3.1. Proposed Architecture

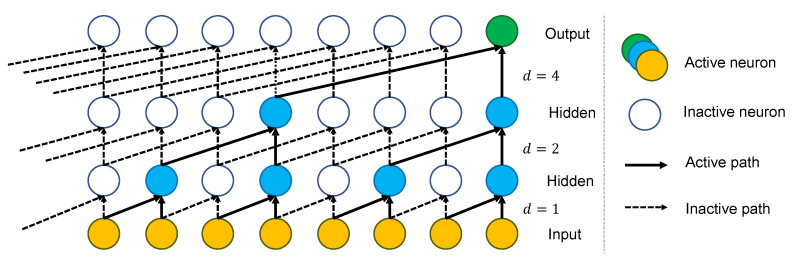

Until recently, sequential data was mostly analyzed and modelled using recurrence-dependent networks such as recurrent neural networks and LSTM architectures. However, the most problematic issue that arises in training such networks is the vanishing or exploding of gradients. In other words, the network is often incapable of learning its weights from long-past values. For that, convolutional neural networks, widely used for computer vision and visual imagery tasks, are currently used for signal processing as well, under the name 1D Temporal Convolutional Network (1D TCN). The convolution in a TCN is uni-dimensional, causal, and dilated. A causal convolution means that the computation at a given unit of the network only depends on present and past values; this suits sequential data where each point of a sequence depends on previous ones. On the other side, dilation is set to increase the sparsity of a kernel so that the receptive field of the convolution layer can be enlarged without using additional parameters. Note that a receptive field is the region in the input that produces a feature at the output. The receptive field (R) of a dilated convolution layer with factor d is

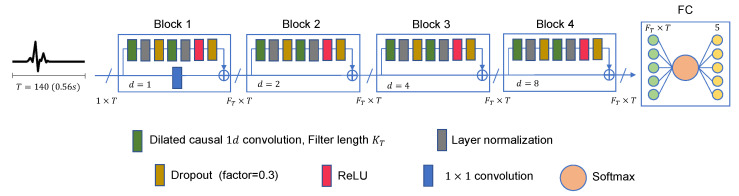

To build up a TCN, multiple convolution layers are stacked above each other as shown in Figure 3. The dilation factor of layer

The convolution in a TCN layer is defined as follows:

where x is the input sequence, d is the dilation factor, and f is a convolution filter of size k applied at time t. It should be noted that an input sequence of length n is introduced to the above vanilla 1D convolution layer in order to generate an output sequence of length

The full TCN model that we propose in this work is demonstrated in Figure 4. It is made up of multiple residual blocks, followed by a fully connected layer (FC), a softmax function, and a classifier. Each block contains a group of layers and a skip connection that links its input to its output. A

TCN architecture for ECG heartbeat classification. The input signal is made of 140 points (0.56 s). Number of filters is

Temporal convolution networks have been shown to outperform recurrent neural networks [52]. In terms of memory, the sparse kernels in TCNs allow the prediction of a time series from their long-past values using a very low number of parameters. In recurrent networks, this is done by the use of cycles and condensed recurrent connections, resulting in a large number of parameters. Moreover, the internal structure of a TCN is independent of the input signal. This allows the processing of excessively long sequences using a small TCN structures. Additionally, the receptive field size in such networks is easily tuned by modifying the number of layers, the filter size, and the dilation factors; this facilitates control of the model’s memory for various requirements. In terms of performance, temporal convolution networks are much faster than recurrent networks. This is due the fact that these networks compute their outputs in parallel. On the other side, the structure of a TCN leads to more stable gradients where these gradients vary in the direction of the layers not in the temporal direction (also thanks to the residual connections). Temporal convolution networks are not without flaws. Indeed, these networks do not function well in the case of domain transfer especially from a domain that requires a short history to another that requires a long one.

3.2. Data Augmentation

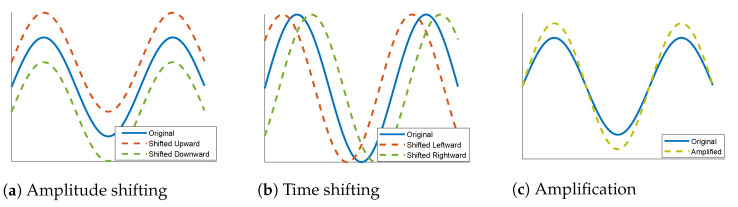

In terms of classification problems, the lack of sufficient training samples of certain classes is often fixed by using data augmentation techniques. This occurs by adding new copies of the existing samples of the deficient classes after applying certain minor alterations or using machine learning models to generate new examples in the latent space of the original data. Various techniques can be found in the literature for the augmentation of image data such as rotation, flipping, cropping, and color transformation [53]. Such mechanisms preserve the main features of an image while providing a bigger space for training. This seems more complicated in the case of one-dimensional data. In this work, we propose three simple types of data augmentation that can be applied to the ECG signals: amplitude shifting, time shifting, and amplification. Amplitude shifting, as shown in Figure 5a, means moving the signal a certain number of steps either upward or downward. Time shifting is presented in Figure 5b; it is about moving the signal a given number of steps either to the right or to the left. Amplification, as shown in Figure 5c, is done by vertically extending the signal by a certain ratio. Like conventional 2D data augmentation techniques, these proposed techniques, along with many others, provide additional data for use in training while preserving the main features of a signal.

3.3. Training Process

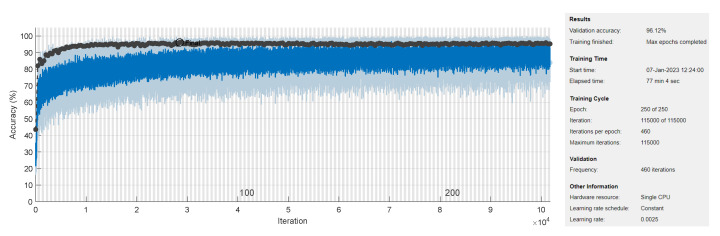

The general TCN model, proposed in Section 3.1 and shown in Figure 4, is trained using Adam optimizer on batches of size 20 at a learning rate of

3.4. Network Evaluation

Classification model performance is mostly evaluated based on the “confusion matrix” [54]. This is a quite common measure that can be applied to both binary and multiclass classification problems where the counts of predicted and actual outcomes are all represented. For each class, four quantities can be identified: TP, FP, TN, and FN. The term “TP” denotes True Positive, which represents the number of positive examples that are correctly classified by the model. Similarly, the term “TN” stands for True Negative, which represents the number of negative examples that are correctly classified. The term “FP” stands for False Positive, which is the number of negative examples classified by the model as positive; whereas the term “FN” denotes False Negative, i.e., the number of positive examples classified as negative. The most commonly used criterion in the evaluation of a classification model is accuracy, which presents the fraction of true examples over all examples:

However, the accuracy defined in Equation (3) can be misleading when the datasets used for training and test purposes are imbalanced. For that, there are other metrics that could be involved in the evaluation process for better analysis: precision and sensitivity. Precision is the proportion of correctly classified positive cases, i.e., the fraction of positive examples over the total predicted positive examples. On other hand, sensitivity is the proportion of correctly recognized actual positive cases, i.e., the fraction of positive instances over the total actual positive instances. The formulas are defined below:

It should be noted that the two quantities above are computed for each class separately, and therefore the overall quantities are deduced by averaging. For the sake of brevity, precision and sensitivity can be combined into one term, the

The balanced

It is always preferable to achieve a good performance of the TCN model using the most concise network structure. For that, the number of parameters that comprise the TCN is another criterion to be considered in the evaluation process. A TCN, as shown in Figure 4, is made up of multiple layers, where each distinct layer involves a different number of parameters. The only layers that have no learnable parameters are the input layer, the ReLU layer, and the dropout layer. The input layer only provides the shape of the input signal and has nothing to do with the training process. The ReLU performs a threshold operation on its input x according to the fixed equation

where W and B are the number of weights and the number of biases of the convolution layer, respectively. Note that the dilation, stride, and padding are hyperparameters that do not interfere in the learning process [55,56]. Similarly, a normalization layer also has two learnable parameters of its own: offset (also called beta) and scale (gamma). Each channel of a normalization layer has one parameter of each kind. Attached to the convolution layer, the normalization layer thus has

4. Results and Discussion

The TCN model that we proposed in Section 3.1 is tested on the ECG5000 dataset [13] that has been collected from the BIDMC Congestive Heart Failure Database [14]. The raw record is composed of 17,998,834 data points including 92,584 heartbeats. The heartbeats are first extracted and then interpolated so that they all become the same length. After that, the heartbeats are annotated according to five classes holding the following labels: Normal (N), R-on-T Premature Ventricular Contraction (Ron-T PVC), Premature Ventricular Contraction (PVC), Supraventricular Premature or Ectopic Beat (SP or EB), and Unclassified Beat (UB). About 128,570 annotated heartbeats are present from which a dataset of 5000 randomly selected heartbeats is created. The new dataset is then divided into two sets: one for training that contains 4500 samples and another for testing that contains 500 samples.

On the other hand, different forms of the TCN model are built and experimentally tested. All details are summarized in Table 1, where 12 different experiments are demonstrated. The number of blocks varies between 3 and 5. The size of the filters of the dilated convolution layers is constant in one block whereas it grows from one block to another. However, the number of filters is always the same. Consequently, the number of parameters of the TCN model is calculated (see Section 3.4 for more details). In all experiments, the mini-batch has a size of 20, whereas the training process lasts for 250 epochs. The dilation factors (

Table 1

Different forms of the TCN model proposed in Section 3.1 with different data augmentation cases.

| Exp | #Blocks | #Filters | Filter Size | #Parms | Data Augmentation Factor | Epoch Size | F1 Score | Accuracy |

|---|---|---|---|---|---|---|---|---|

|

| 4 | 16 |

| 10.2 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 3 | 16 |

| 6 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 5 | 16 |

| 15.4 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 4 | 8 |

| 2.7 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 4 | 32 |

| 39.9 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 4 | 16 |

| 14.9 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 4 | 32 |

| 58.4 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 3 | 16 |

| 8.6 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 3 | 16 |

| 12.2 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 3 | 32 |

| 23.3 K | class3:24, class4:12, class5:24 | 460 |

|

|

|

| 4 | 16 |

| 10.2 K | class3:24, class4:24, class5:24 | 566 |

|

|

|

| 4 | 16 |

| 10.2 K | class3:24, class4:06, class5:24 | 407 |

|

|

The TCN model in Experiment 1 is the most optimal among all models present in Table 1. In detail, it has convolution layers that involve 16 filters (

Table 2

Data augmentation applied on ECG5000 dataset in Experiments 1–10 of Table 1.

| Class | Data Augmentation Type | ||

|---|---|---|---|

| Amplitude Shift | Time Shift | Amplification | |

| PVC |

|

|

|

| SP |

|

|

|

| UB |

|

|

|

Table 3

Distribution of samples of ECG5000 dataset in Experiments 1–10 of Table 1, before and after data augmentation.

| Class | Count | ||||

|---|---|---|---|---|---|

| Testing Set | Before Augmentation | After Augmentation | |||

| Training Set | Total | Training Set | Total | ||

| N | 292 | 2627 | 2919 | 2627 | 2919 |

| Ron-T PVC | 177 | 1590 | 1767 | 1590 | 1767 |

| PVC | 10 | 86 | 96 | 2150 | 2160 |

| SP | 19 | 175 | 194 | 2287 | 2306 |

| UB | 20 | 2 | 24 | 550 | 552 |

| Total | 500 | 4500 | 5000 | 9204 | 9704 |

The ECG5000 dataset has been adopted in various classification works for heart disease detection. The comparison between our optimal model and some state-of-the-art models is demonstrated in Table 4. We show in this table the accuracy, the F1 score (combination between sensitivity and precision), and the number of parameters of the different models. As shown, our TCN model with the fewest number of parameters among all other existing models (

Table 4

Evaluation of different methods on the ECG5000 dataset.

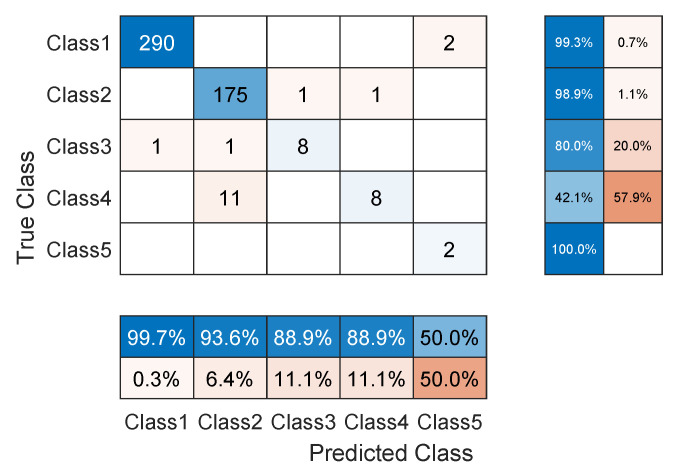

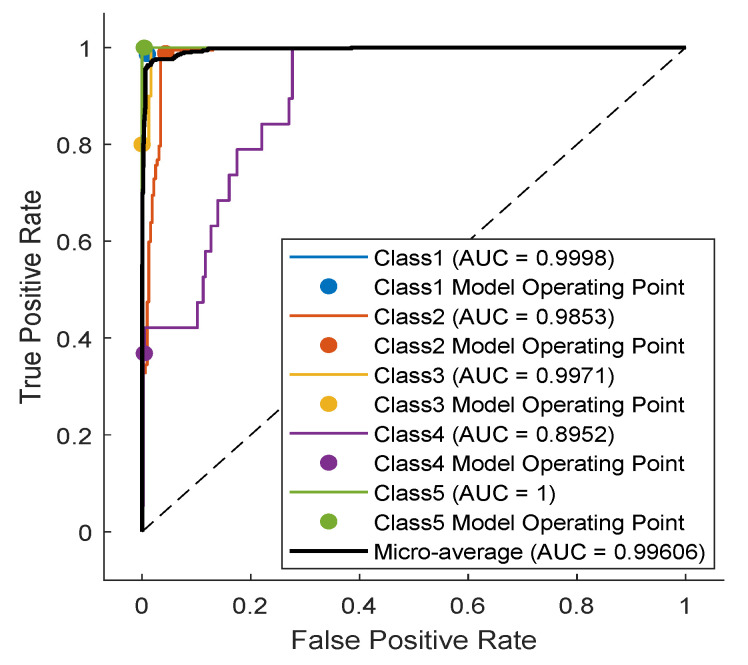

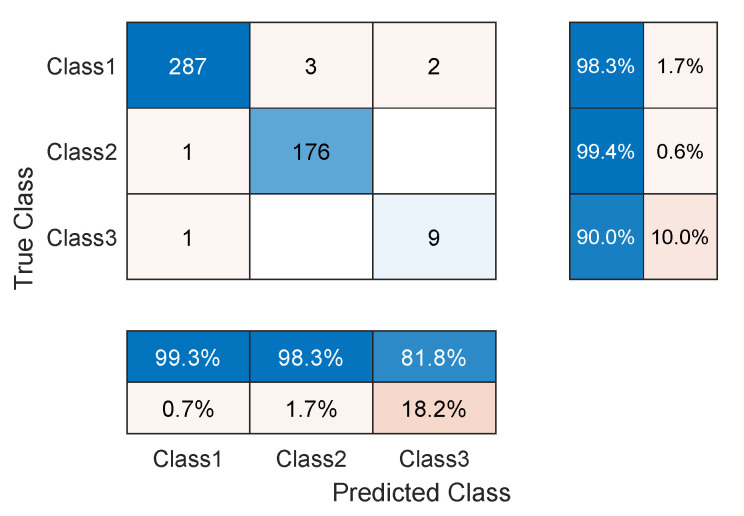

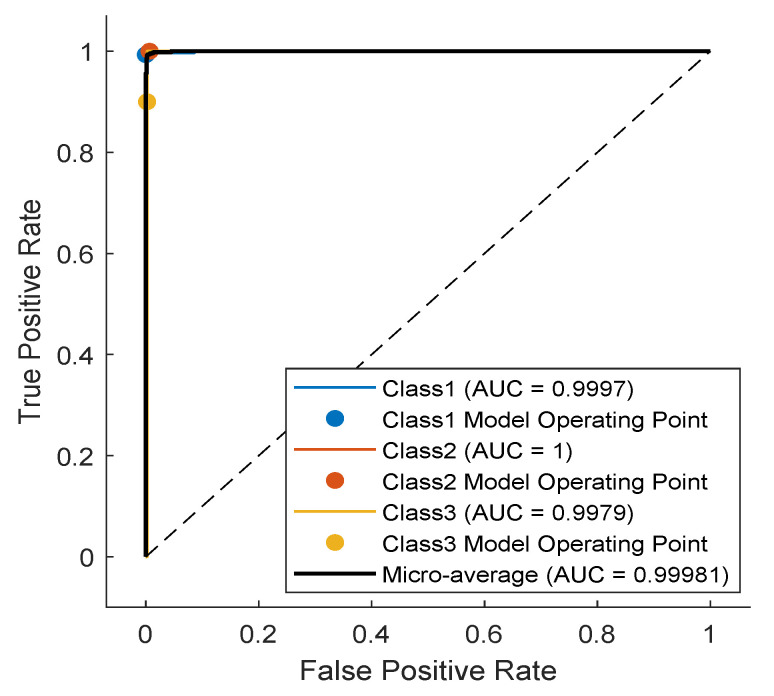

As noticed in the confusion matrix of Figure 7, the slight deficiency in the F1 score is mainly due to classes SP and UB (see orange boxes). For that, we retrain the network while excluding these classes. As shown in Table 4, the accuracy now is up to

5. Conclusions

In this paper, we proposed a 1D Temporal Convolutional Network (TCN) based architecture for ECG classification of five heart diseases. The main goal was to provide a low complexity architecture aimed at being used in low-cost embedded devices for remote health monitoring. The proposed architecture is characterized by its simplicity and the lowest number of used parameters compared to the state-of-the-art approaches (

Funding Statement

This research was funded by the European Erasmus+ capacity building for higher education program under grant agreement no. 619483.

Author Contributions

Conceptualization and methodology A.R.I., S.J. and H.R.; software, A.R.I.; validation, A.R.I., S.J. and H.R.; investigation, A.R.I., S.J. and H.R.; resources, H.R. and N.R.; writing—original draft preparation, A.R.I., S.J. and H.R.; writing—review and editing, S.J., A.R.I., N.R. and H.R.; visualization, H.R., S.J., N.R. and A.R.I.; supervision, H.R. and S.J.; project administration, H.R.; funding acquisition, H.R. and N.R. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

http://www.timeseriesclassification.com/description.php?Dataset=ECG5000.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

Articles from Sensors (Basel, Switzerland) are provided here courtesy of Multidisciplinary Digital Publishing Institute (MDPI)

Full text links

Read article at publisher's site: https://doi.org/10.3390/s23031697

Read article for free, from open access legal sources, via Unpaywall:

https://www.mdpi.com/1424-8220/23/3/1697/pdf?version=1675413205

Citations & impact

Impact metrics

Citations of article over time

Alternative metrics

Discover the attention surrounding your research

https://www.altmetric.com/details/143752356

Article citations

Intelligent mutation based evolutionary optimization algorithm for genomics and precision medicine.

Funct Integr Genomics, 24(4):128, 22 Jul 2024

Cited by: 0 articles | PMID: 39037544

A Smartphone-Based M-Health Monitoring System for Arrhythmia Diagnosis.

Biosensors (Basel), 14(4):201, 18 Apr 2024

Cited by: 0 articles | PMID: 38667194 | PMCID: PMC11047874

ECG Heartbeat Classification Using Machine Learning and Metaheuristic Optimization for Smart Healthcare Systems.

Bioengineering (Basel), 10(4):429, 28 Mar 2023

Cited by: 5 articles | PMID: 37106616 | PMCID: PMC10135930

Similar Articles

To arrive at the top five similar articles we use a word-weighted algorithm to compare words from the Title and Abstract of each citation.

Automatic 12-Leading Electrocardiogram Classification Network with Deformable Convolution.

Annu Int Conf IEEE Eng Med Biol Soc, 2021:882-885, 01 Nov 2021

Cited by: 2 articles | PMID: 34891431

Detection of shockable ventricular cardiac arrhythmias from ECG signals using FFREWT filter-bank and deep convolutional neural network.

Comput Biol Med, 124:103939, 29 Jul 2020

Cited by: 15 articles | PMID: 32750507

An ECG Signal Classification Method Based on Dilated Causal Convolution.

Comput Math Methods Med, 2021:6627939, 02 Feb 2021

Cited by: 2 articles | PMID: 33603825 | PMCID: PMC7872762

A review on utilizing machine learning technology in the fields of electronic emergency triage and patient priority systems in telemedicine: Coherent taxonomy, motivations, open research challenges and recommendations for intelligent future work.

Comput Methods Programs Biomed, 209:106357, 16 Aug 2021

Cited by: 8 articles | PMID: 34438223

Review

Funding

Funders who supported this work.

European Erasmus+ capacity building for higher educa- 342 tion programme (1)

Grant ID: 619483

0 publications

European Erasmus+ capacity building for higher education program (1)

Grant ID: 619483

0 publications