On the 14th Aug from ~13:40 - 14:50 we had limited maps.wikimedia.org availability where ~50% requests were successful.

Backround

One of the components of our maps infrastructure is tegola vector server (running on kubernetes under tegola-vector-tiles.svc.$dc.wmnet). Kartotherian (kartotherian.discovery.wmnet) makes a request to tegola, tegola in turn checks its cache ( thanos-swift.discovery.wmnet). If the object is not found, tegola pulls any realted data from a maps-postgres hosts, generates it, and stores it back. Tegola-vector-tiles talks directly to thanos-swift.

Happy Path: edge -> kartotherian -> tegola -> thanos-swift

Partial Outage

From ~13:40 and onwards, we saw tegola overwhelmed with in-flight requests, and kartotherian in turn would start returning 500s due to timeouts. The kartotherian unavailability was picked up by our typical LVS checks and in turn by ATS.

Due to issues stated below, it was not immediately obvious that this outage was related with the cfssl rollout on thanos-swift change 950072

Responders: @fgiunchedi @Jgiannelos @jbond, @Joe @JMeybohm @herron

Red Herrings:

There were 2 issues that delayed us from pinpointing the exact problem

- maps2009 T344110 is dead (maps-posgres primary server)

- a scap bug/hiccup on the 1st Aug, had the side effect of kartotherian on eqiad to use tegola-vector-tiles.svc.codfw.wmnet as a backend, instead of the eqiad one. This was discovered during the incident, though it was unrelated with it

Actions taken so far

- codfw is depooled (due to T344110)

- cfssl patch has been rolled back

- updated wmf-certificates in tegola-vector-tiles

- enabled tegola's s3 clients debug, hoping to get more information.

- we rolled out the cfssl patch twice on codfw and pooled it back to get traffic

- 14 Aug ~13:40 - 14:50 (actual incident - grafana &from=1692018879707&orgId=1&to=1692027162776)

- 17 Aug 13:36 - 13:40 GMT

- 17 Aug ~13:50 - 14:00 GMT (grafana: &from=1692279018056&to=1692282274755)

- 18 Aug 14:24 - 14:29 GMT (grafana: &from=1692367786000&to=1692373532000)

Information

- Both CAs are present in the tegola-vector-tiles contaner

- a curl call from codfw tegola-vector-tiles contaner to thanos-swift is successful

- when experimenting with codfw, we restarted all pods, tegola's cache tests were successful

- in other words, it appears that tegola is able to talk to thanos-swift after the cfssl rollout

- we have not ruled out that TLS is the problem here

- during codfw tests, we initially see tegola serving requests and talking to its cache. After less than 1-2 minutes after pooling, tegola fails to reach is cache.

- During the 14th Aug incident, we saw the following

{"log":"2023-08-14 14:38:31 [ERROR] middleware_tile_cache.go:42: cache middleware: error reading from cache: RequestError: send request failed\n","stream":"stderr","time":"2023-08-14T14:38:31.737858817Z"}

{"log":"caused by: Get https://thanos-swift.discovery.wmnet/tegola-swift-codfw-v002/shared-cache/osm/8/148/74: net/http: TLS handshake timeout\n","stream":"stderr","time":"2023-08-14T14:38:31.737918346Z"}- During the 18th Aug test, the s3 debug log didn't yield a TLS error, but we rather saw the s3 client making GET requests without getting a response

- it is notable to mention that go's net/http package is the http client here

- Cipher Suits: TLS_AES_128_GCM_SHA256 is selected by envoy with the puppet cert vs TLS_AES_256_GCM_SHA384 with cfssl

Useful Graphs and data

14 Aug

tegola-vector-tiles-app-metrics

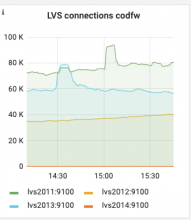

lvs codfw

thanos-swift codfw

thanos-fe2004 host overview

thanos-swift enoy telemetry

18 Aug

cc: @Jgiannelos @Vgutierrez @Joe @JMeybohm @akosiaris (thank you for the help in going through logs, tcpdumps, and helping)